Regression Models

Linear Regression

Training Dataset

Hypothesis Space: All possible linear functions of continuous-valued inputs and outputs.

Hypothesis:

Loss Function:

Cost Function:

Regression Models

Linear Regression

Analytical Solution:

Gradient Descent Algorithm:

Initialize w randomly

repeat

for each w[i] in w

Compute gradient: g = ∇Loss(w[i])

Update weight: w[i] = w[i] - α * g

until convergence

Hyperparameters: Learning rate, number of epochs, and batch size.

Multivariate Linear Regression

In these problems, each example is a n-element vector. The hypotheses space H now includes linear functions of multiple continuous-valued inputs and a single continuous output.

We want to find the

In vector notation:

Where

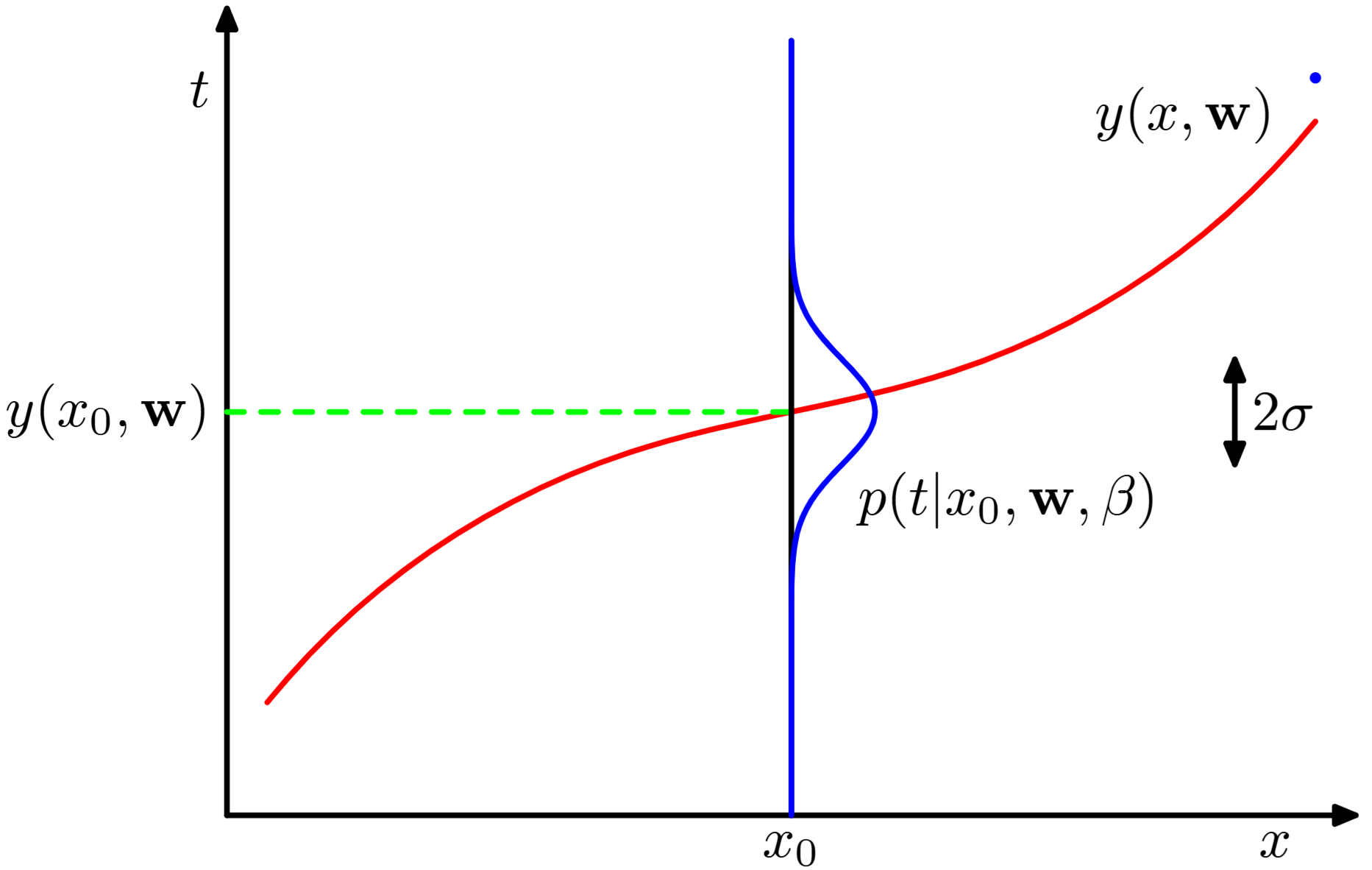

Probabilistic Interpretation of Linear Regression

The regression problem is considered as:

We want to maximise the likelihood function of the training data given the model parameters.

Closed-form solution for linear regression:

Where

Linear Basis Function Models

The model becomes:

Where

Linear Basis Function Models

If we apply a probabilistic interpretation, we need to maximise the likelihood of:

After a similar process (See Chapter 3 in Bishop, 2006), the loss function becomes:

And the normal equation solution:

Linear Basis Function Models

The design matrix

By using basis functions

Linear Classifiers

A decision boundary is a line (or a surface in higher dimensions) that separates data into classes.

The hypothesis is the result of passing a linear function through a threshold function:

The Perceptron

The perceptron is a linear classifier model (i.e., linear discriminant), with hypothesis space defined by all the functions of the form:

The function

We want to find

Neural Networks

Feedforward Neural Network:

The overall network function combines these stages. For sigmoidal output unit activation functions, takes the form:

The bias parameters can be absorbed:

Neural Networks

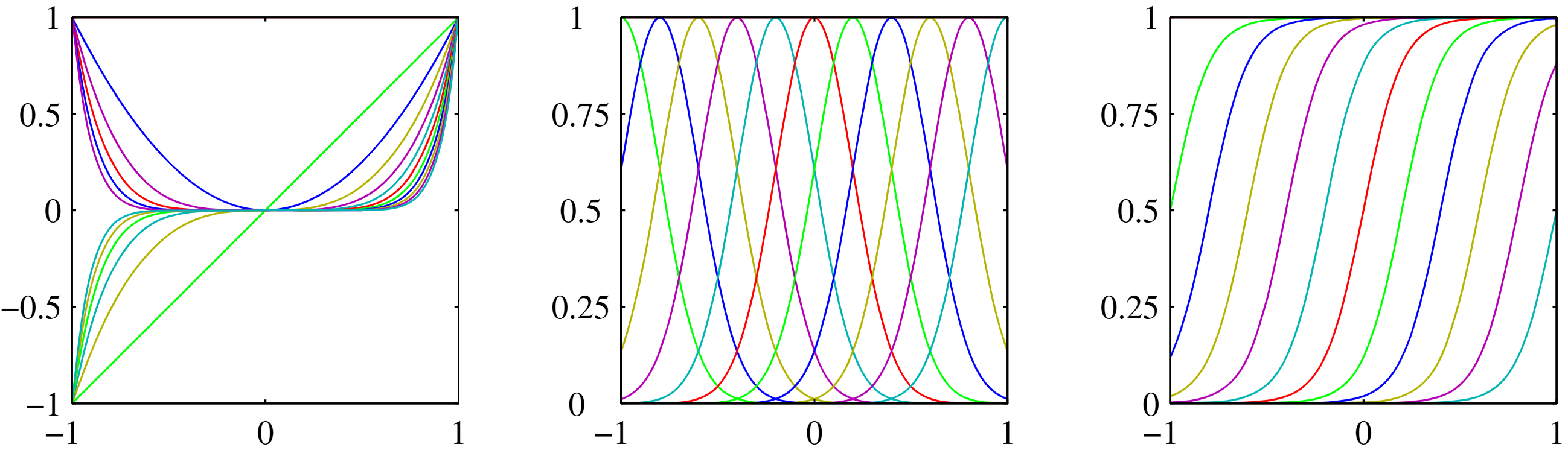

Sigmoid Function:

Range:

Derivative:

Hyperbolic Tangent:

Range:

Derivative:

ReLU (Rectified Linear Unit):

Range:

Derivative:

Neural Networks

Training Process:

Given a training set of N example input-output pairs

Each pair was generated by an unknown function

We want to find a hypothesis

Neural Networks

Error Backpropagation Algorithm:

1. Forward Pass: Compute all activations and outputs for an input vector.

2. Error Evaluation: Evaluate the error for all the outputs using:

3. Backward Pass: Backpropagate errors for each hidden unit in the network using:

4. Derivatives Evaluation: Evaluate the derivatives for each parameter using:

Neural Networks

Gradient Descent Update Rule:

Where

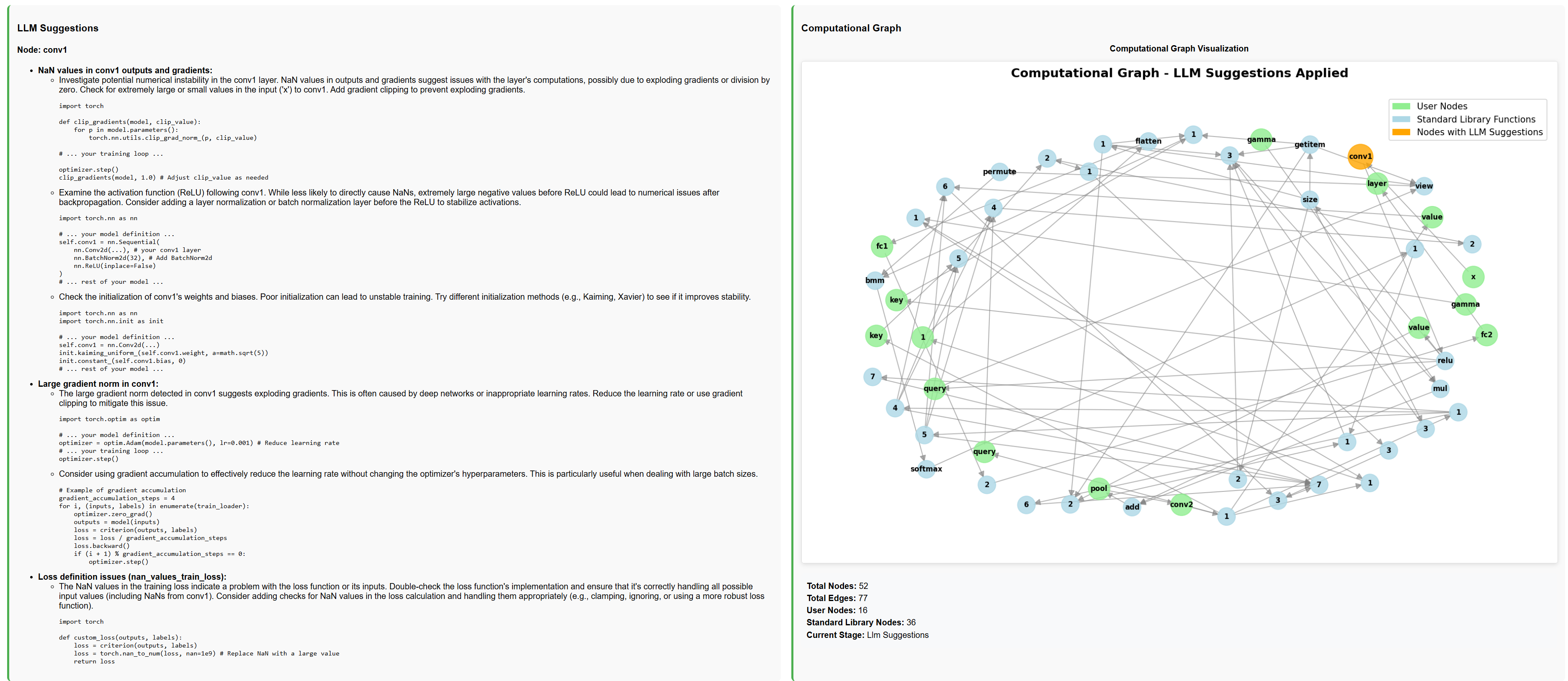

Deep Learning

Deep Learning

Vanishing and Exploding Gradients: In deep networks, gradients can become very small (vanishing) or very large (exploding) during backpropagation:

- Proper Weight Initialization: Xavier/Glorot

- Batch Normalization: Normalize inputs/outputs

- Modern Optimizers: Adam, RMSprop with adaptive learning rates

- Regularisation: Dropout, L2, early stopping, and augmentation techniques

- Network Architectures: Different architectures for different problems

Reinforcement Learning

The RL framework is composed of:

- Agent: The learner and decision maker

- Environment: The world in which the agent operates

- State: Current situation of the environment

- Action: What the agent can do

- Reward: Feedback from the environment

The environment is stochastic, meaning that the outcomes of actions taken by the agent in each state are not deterministic.

Reinforcement Learning

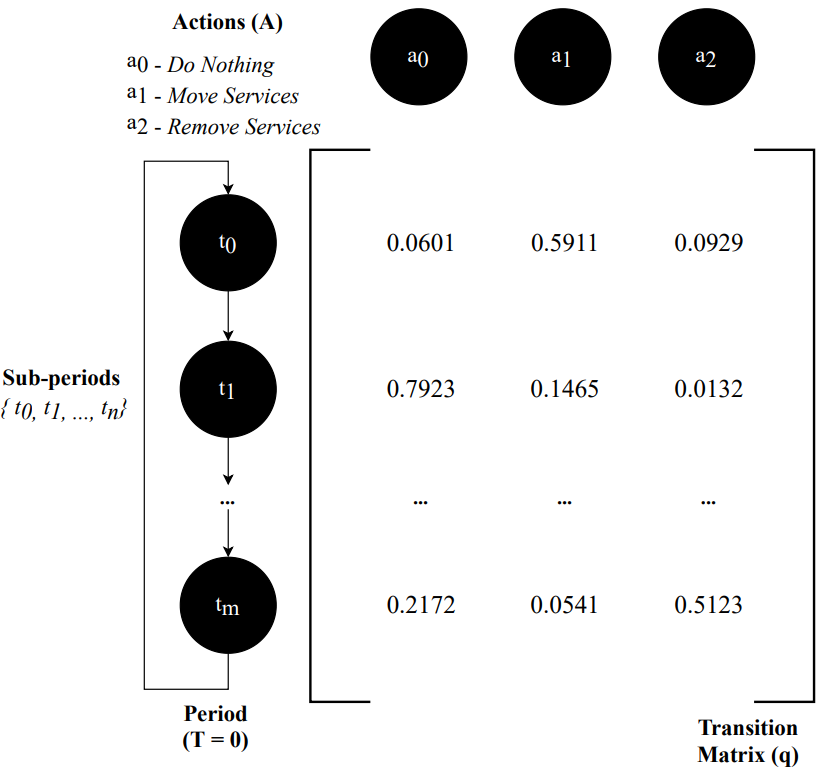

Markov Decision Process (MDP):

A mathematical framework for modeling sequential decisions problems for fully observable, stochastic environments. The outcomes are partly random and partly under the control of a decision maker.

Reinforcement Learning

A MDP is a 4-tuple:

Where:

Reinforcement Learning

Model-Based RL Agent

Model-Free RL Agent

- Knows transition model and reward function

- Can simulate outcomes before taking actions

- Value Iteration, Policy Iteration

- Unknown transition model and reward function

- Cannot simulate outcomes

- Q-Learning, DQN

Reinforcement Learning

Policy Iteration

Q-Value

DQN

Value-function

Model-based with guaranteed convergence for finite and discrete problems.

Q-function

Model-free and simple for small and discrete problems.

Neural Network

Model-free and complex for large and continuous problems.

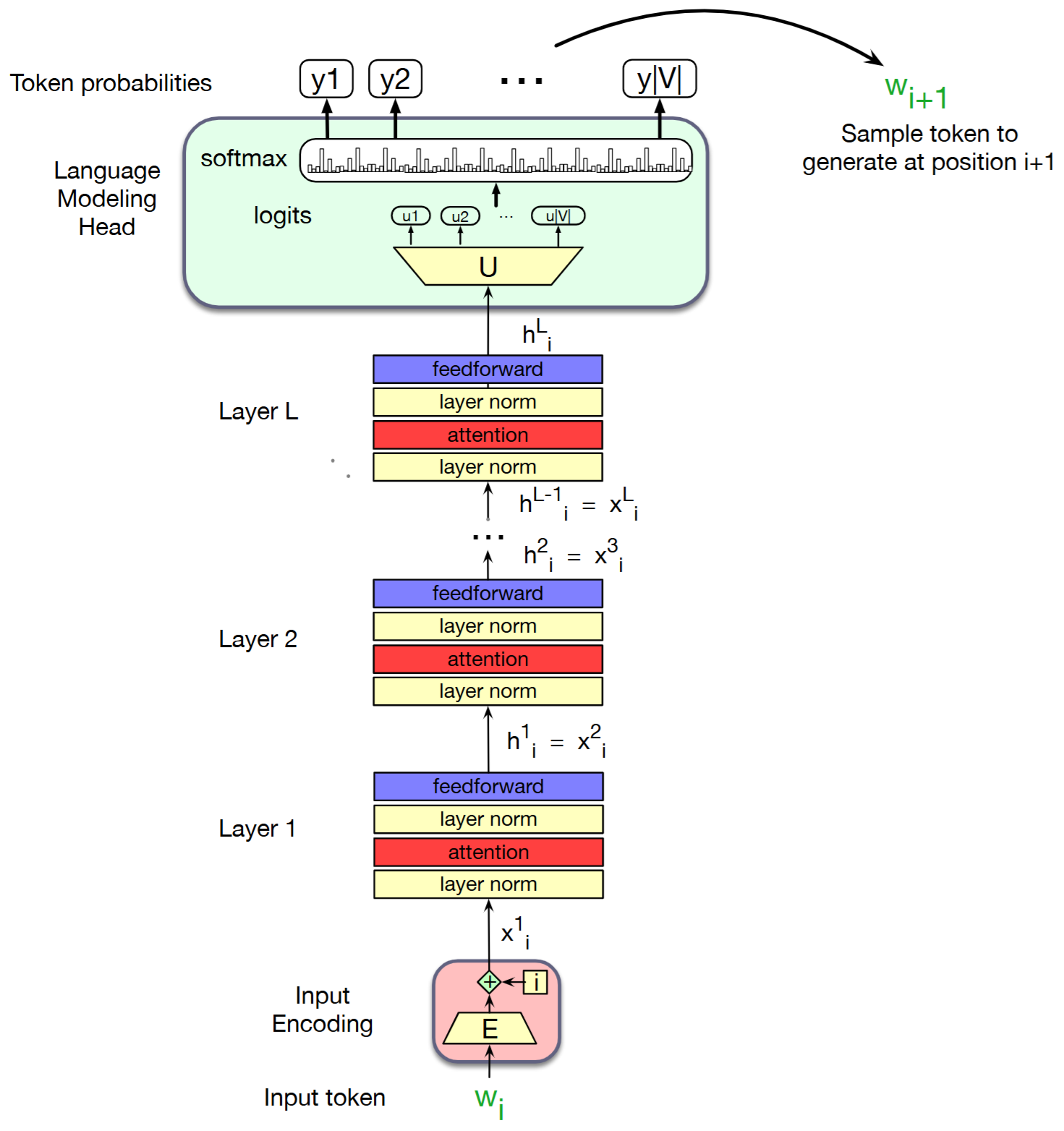

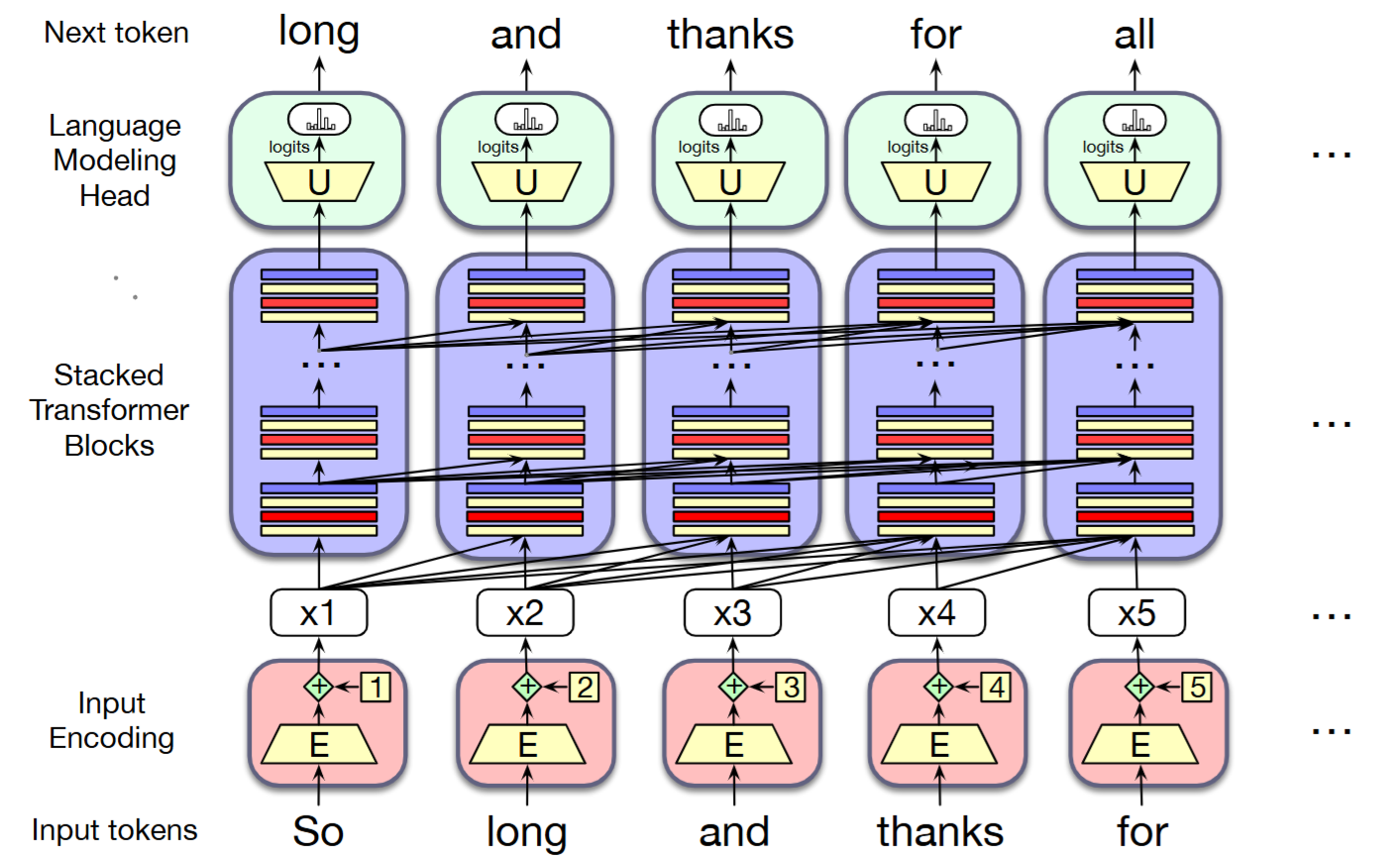

The Transformer Architecture

The main idea is to pay attention to the context of each word in a sentence when modelling language. For example, if context is "Thanks for all the" and we want to know how likely the next word is "fish":

We want to discover the probability distribution over a vocabulary

where

The Transformer Architecture

The transformer architecture solves this problem by:

- Tokenisation: Convert sentence into tokens.

- Input and Positional Embedding: Convert input tokens into ordered embedded vectors.

- Self-Attention: Determine the relevance of each word to others in the sequence.

- Feed-Forward Neural Network: Pass the attention outputs through a feed-forward neural network to consolidate learnt patterns.

- Residual Connections and Layer Normalisation: Apply residual connections and layer normalisation to stabilise and improve training.

- Output Layer: Use a linear layer followed by a softmax function to generate the final output probabilities.

The Transformer Architecture

Large Language Models (LLMs)

- GPT-3: Known for generating human-like text, it can perform tasks such as translation, question answering, and text completion.

- BERT: Excels in understanding the context of words in a sentence, making it ideal for tasks like sentiment analysis and named entity recognition.

- T5 (Text-to-Text Transfer Transformer): Converts all NLP tasks into a text-to-text format, enabling it to handle tasks like summarisation and translation.

- RoBERTa: An optimised version of BERT, it improves performance on tasks like text classification and language inference.

- ...

Large Language Models (LLMs)

Large Language Models (LLMs)

Large Language Models (LLMs)

It is similar to a neural network training:

- Data Collection: Gather a large and relevant dataset for the specific domain or task.

- Pre-processing: Clean and pre-process the data to ensure it is in a suitable format for training.

- Model Selection: Choose a pre-trained LLM that is most suitable for the task at hand.

- Supervised Learning: Prompt engineering, error calculation, and adjusting weights using gradient descent and backpropagation.

- Evaluation: Assess the performance of the fine-tuned model using appropriate metrics and validation datasets.

- Deployment: Deploy the fine-tuned model for use in real-world applications.

Large Language Models (LLMs)

The process combines approaches from symbolic AI and databases:

- Data Collection: Gather a knowledge base that the RAG system can query.

- Pre-processing: Organise the knowledge base to ensure efficient retrieval and LLM integration.

- Model Selection: Choose a pre-trained LLM that can integrate with the retrieval system.

- Retrieval Integration: Using the knowledge base and the LLM in response to queries.

- Evaluation: Assess the performance of the RAG system by using appropriate metrics.

- Deployment: Deploy the RAG system for real-time applications, ensuring it can access and retrieve information efficiently.

Large Language Models (LLMs)

Typical prompt-engineering workflow:

- Task definition: Specify what output format and style you need.

- Baseline prompt: Write a clear instruction (zero-shot) or add 1-5 examples (few-shot).

- Iterate and test: Evaluate outputs, add system messages, or reorder examples to reduce errors and bias.

- Guardrails: Include refusals, safety clauses, or value alignment statements.

- Automation: Use prompt templates or tools like LangChain/LlamaIndex to inject dynamic context.

- Deployment: Store the prompt with version control and monitor performance over time.

The Data Science Process

Machine Learning Pipeline

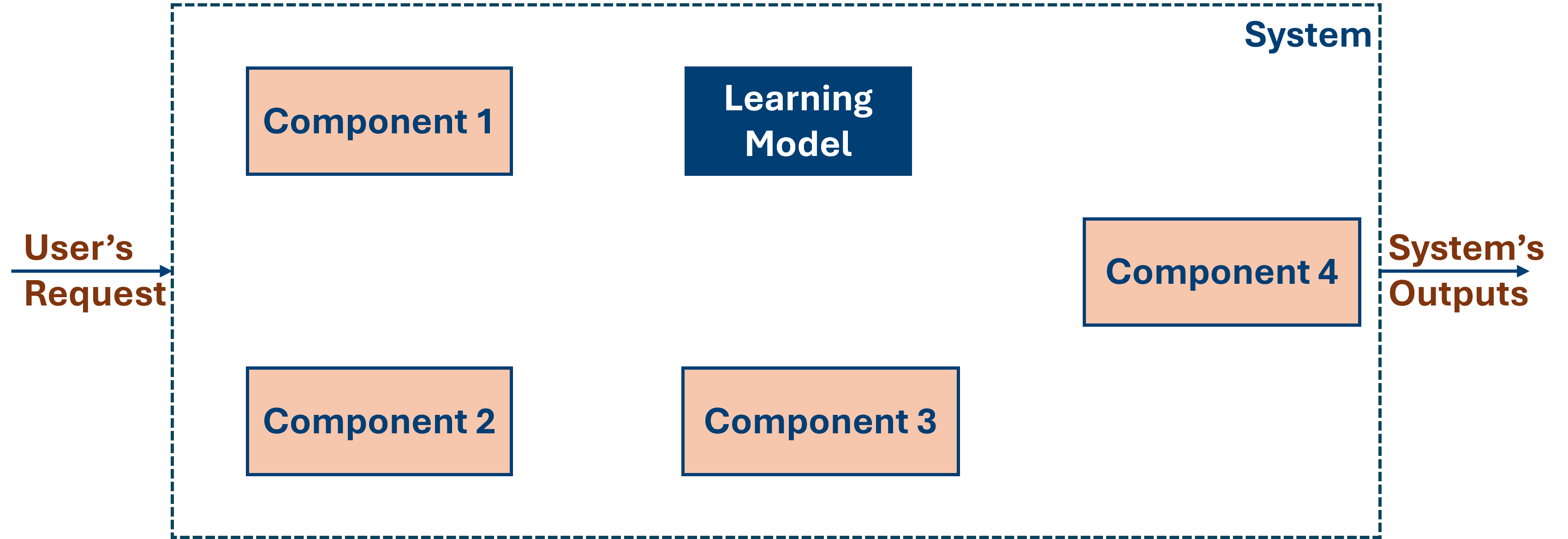

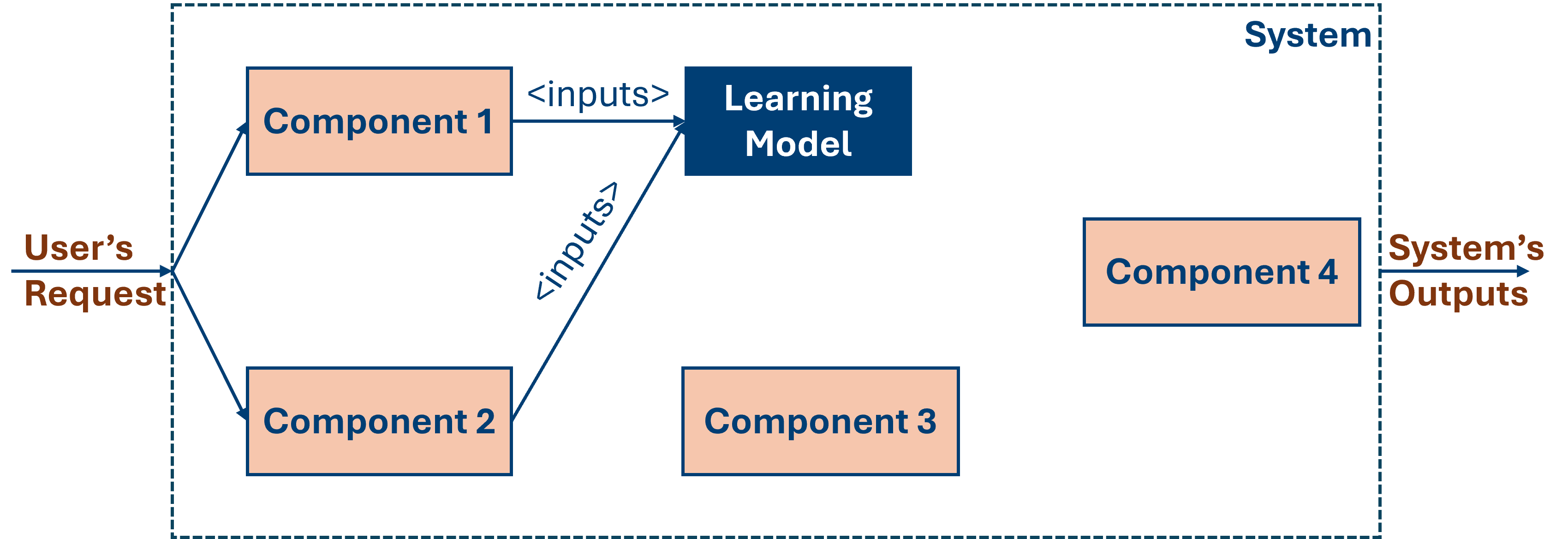

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

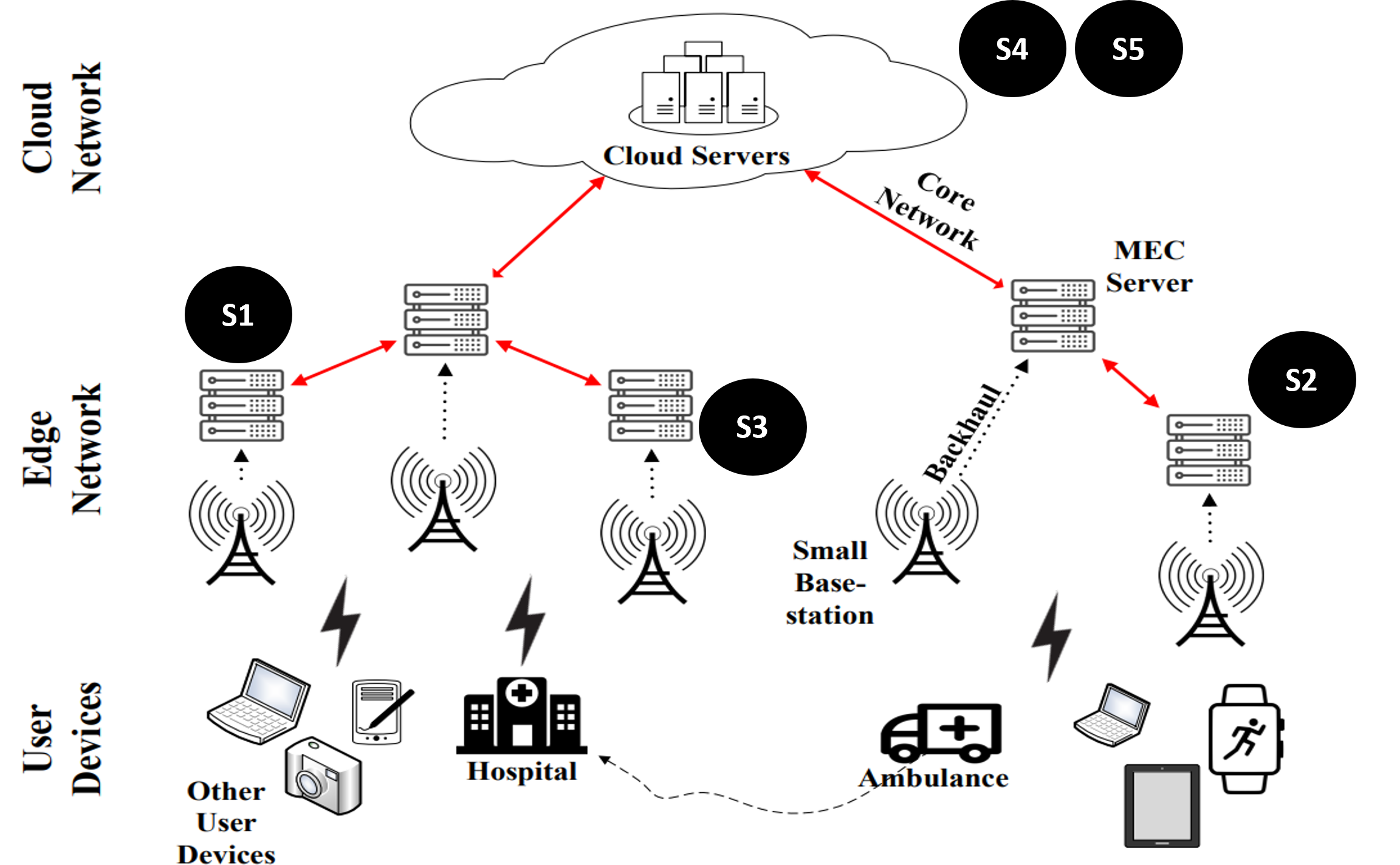

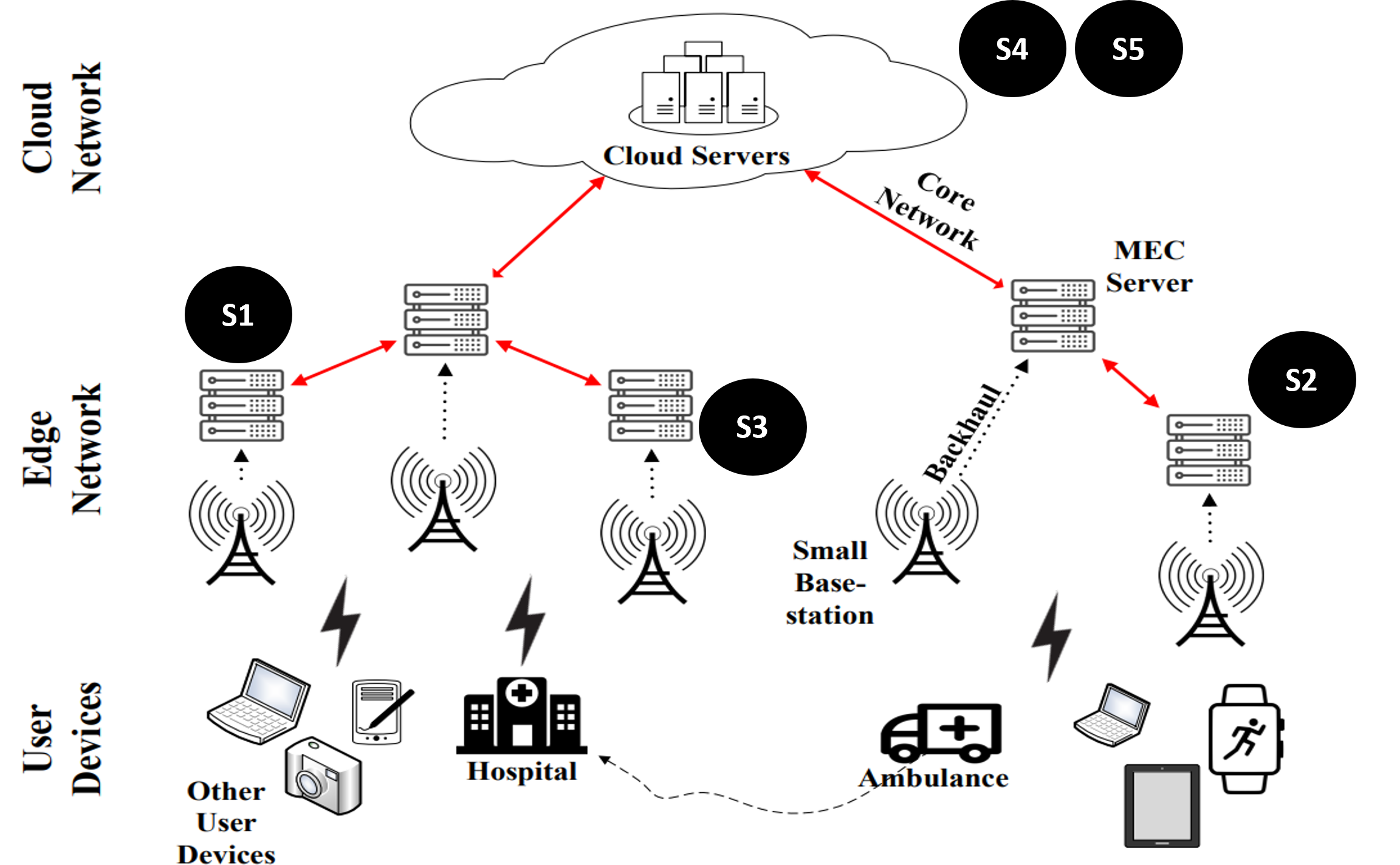

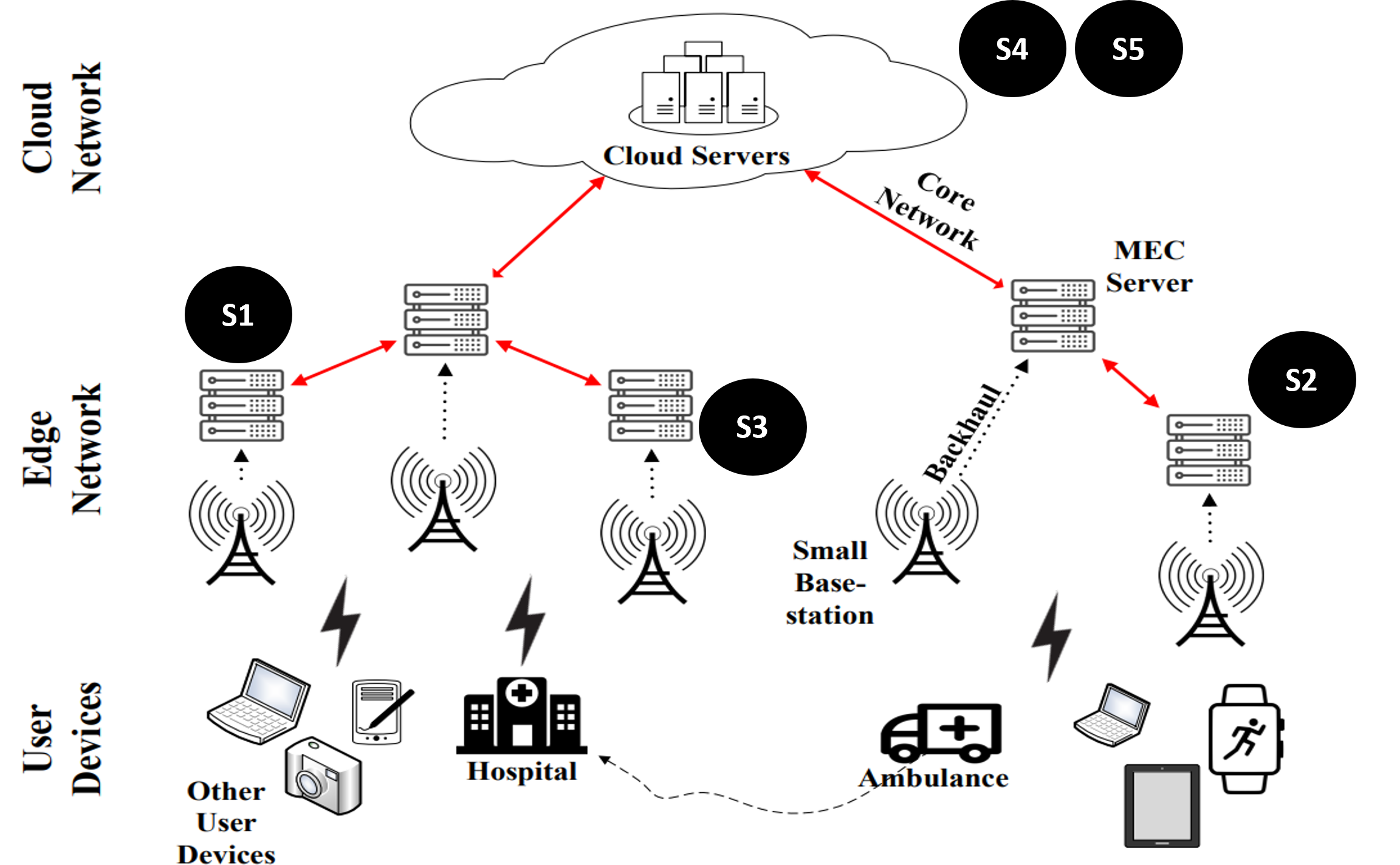

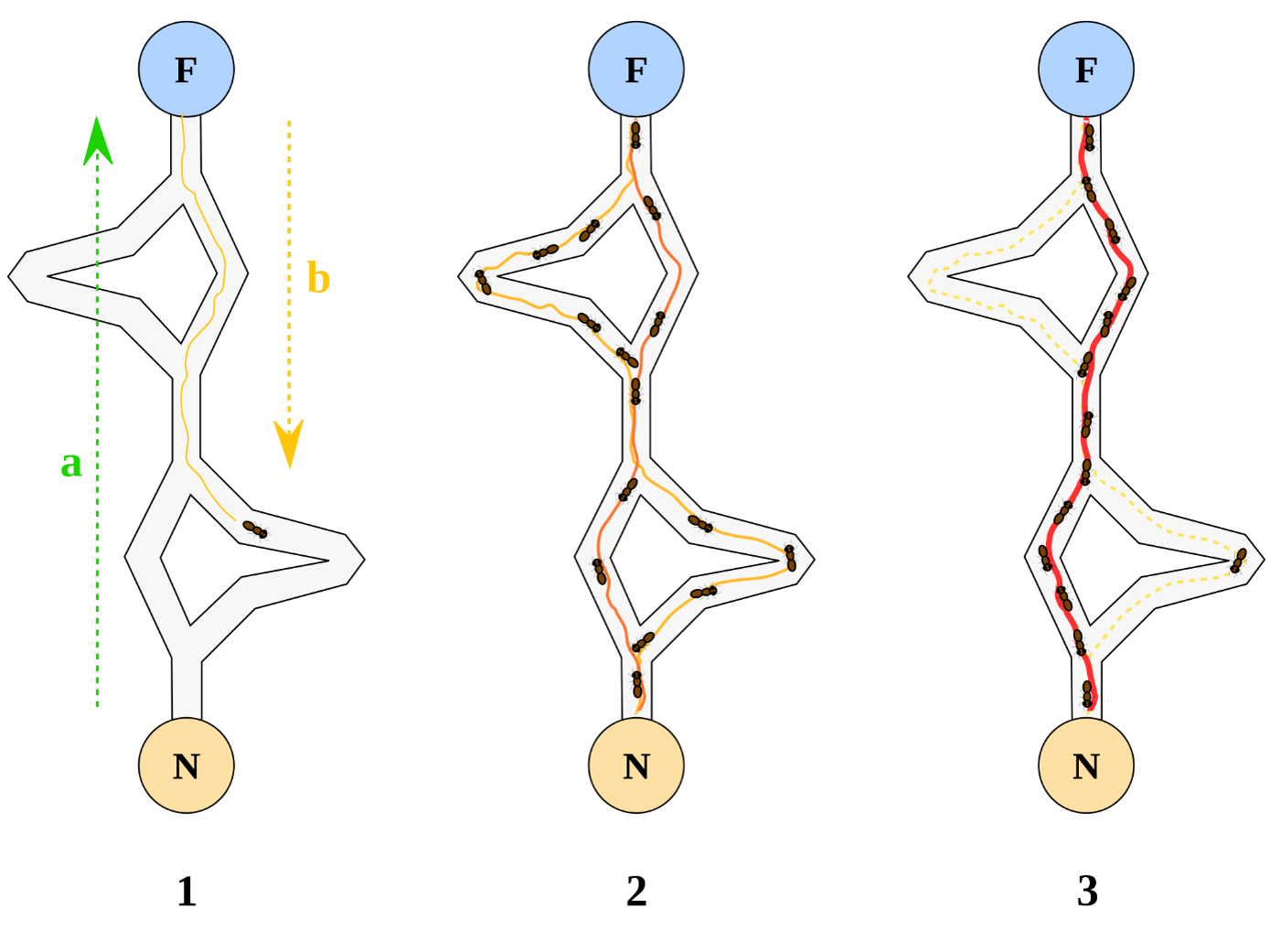

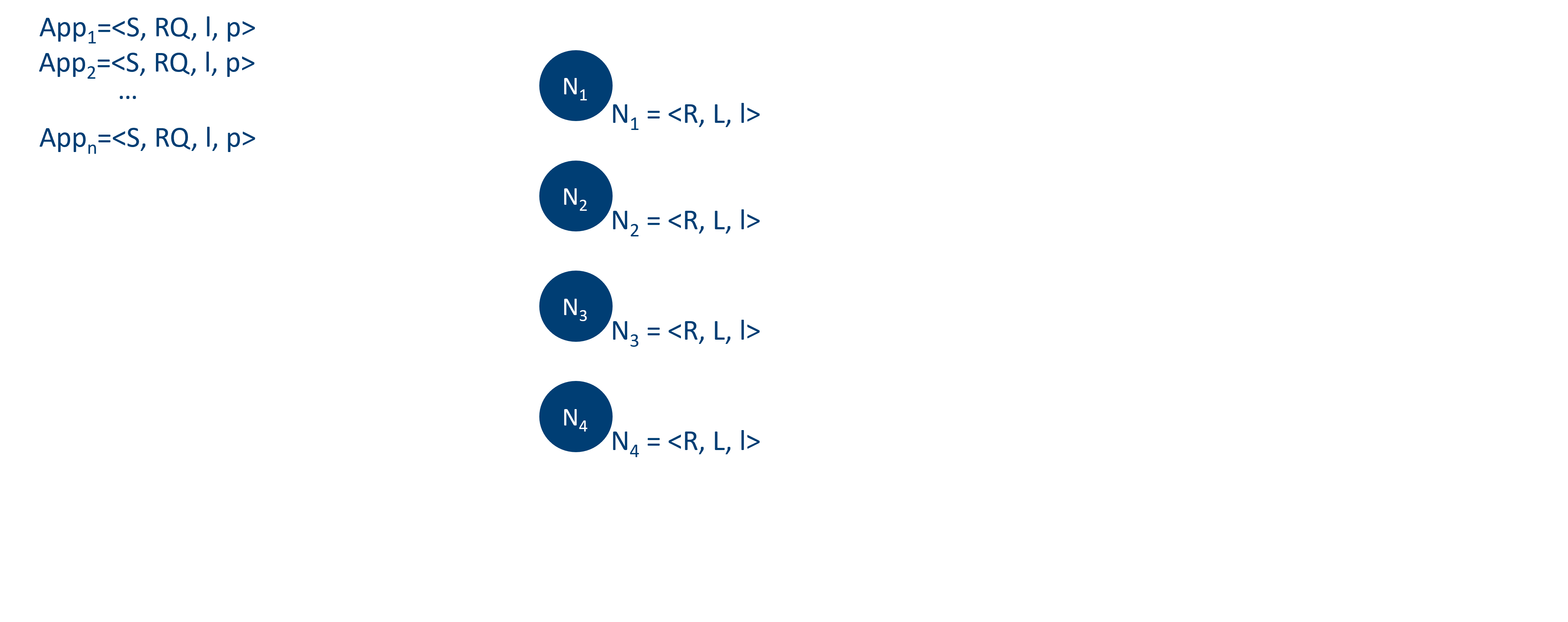

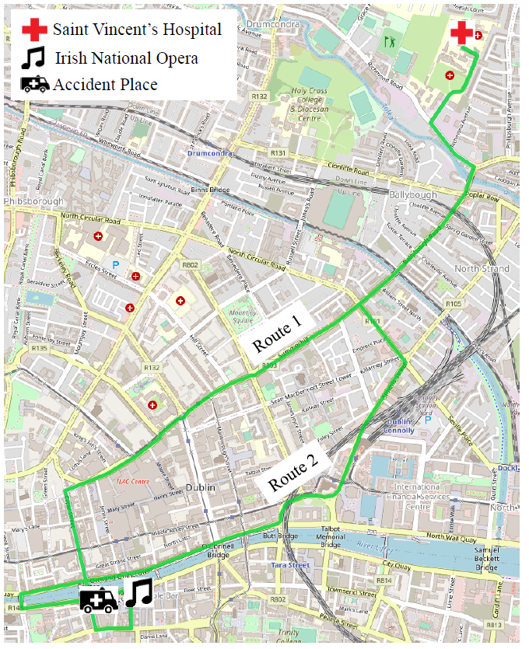

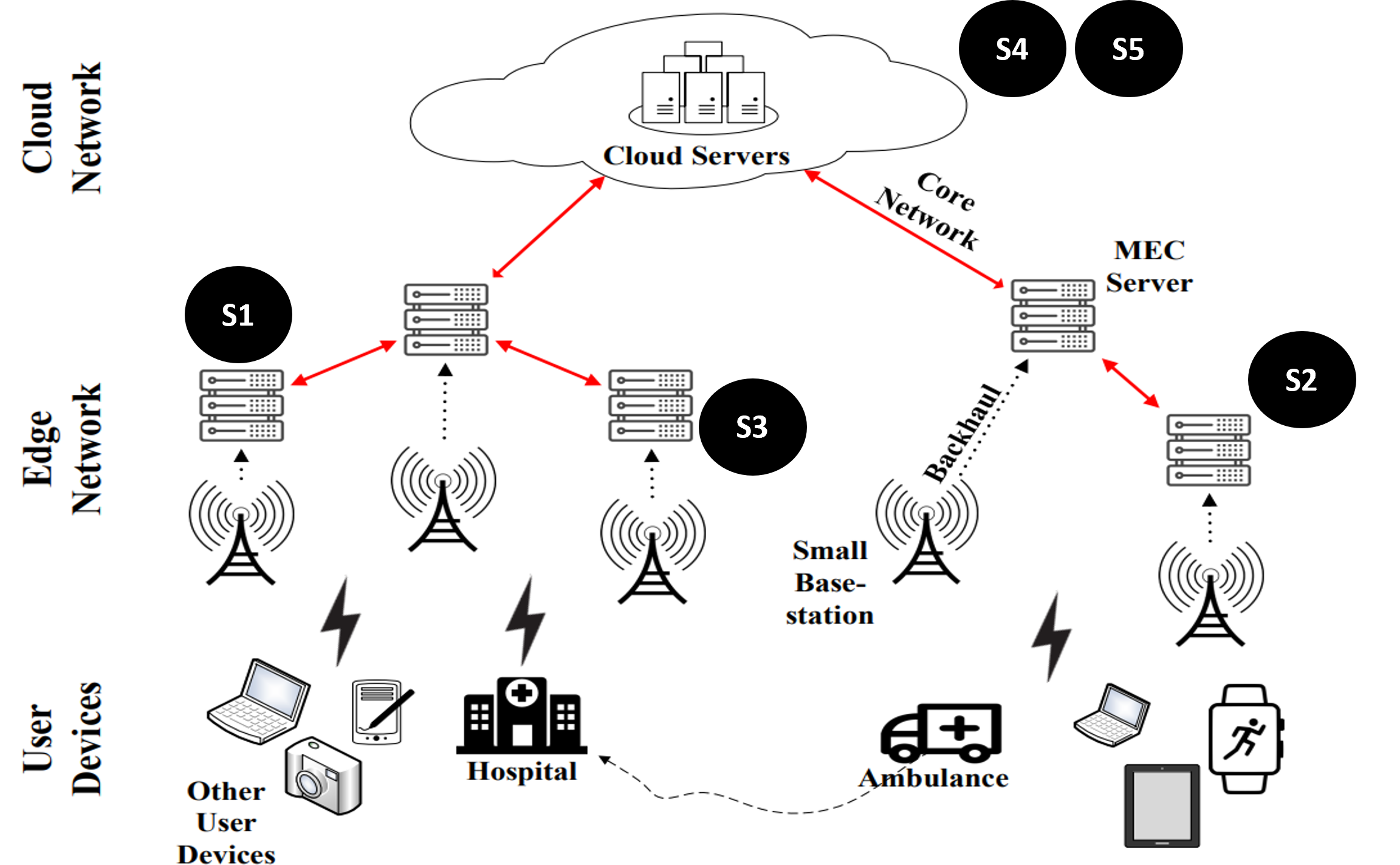

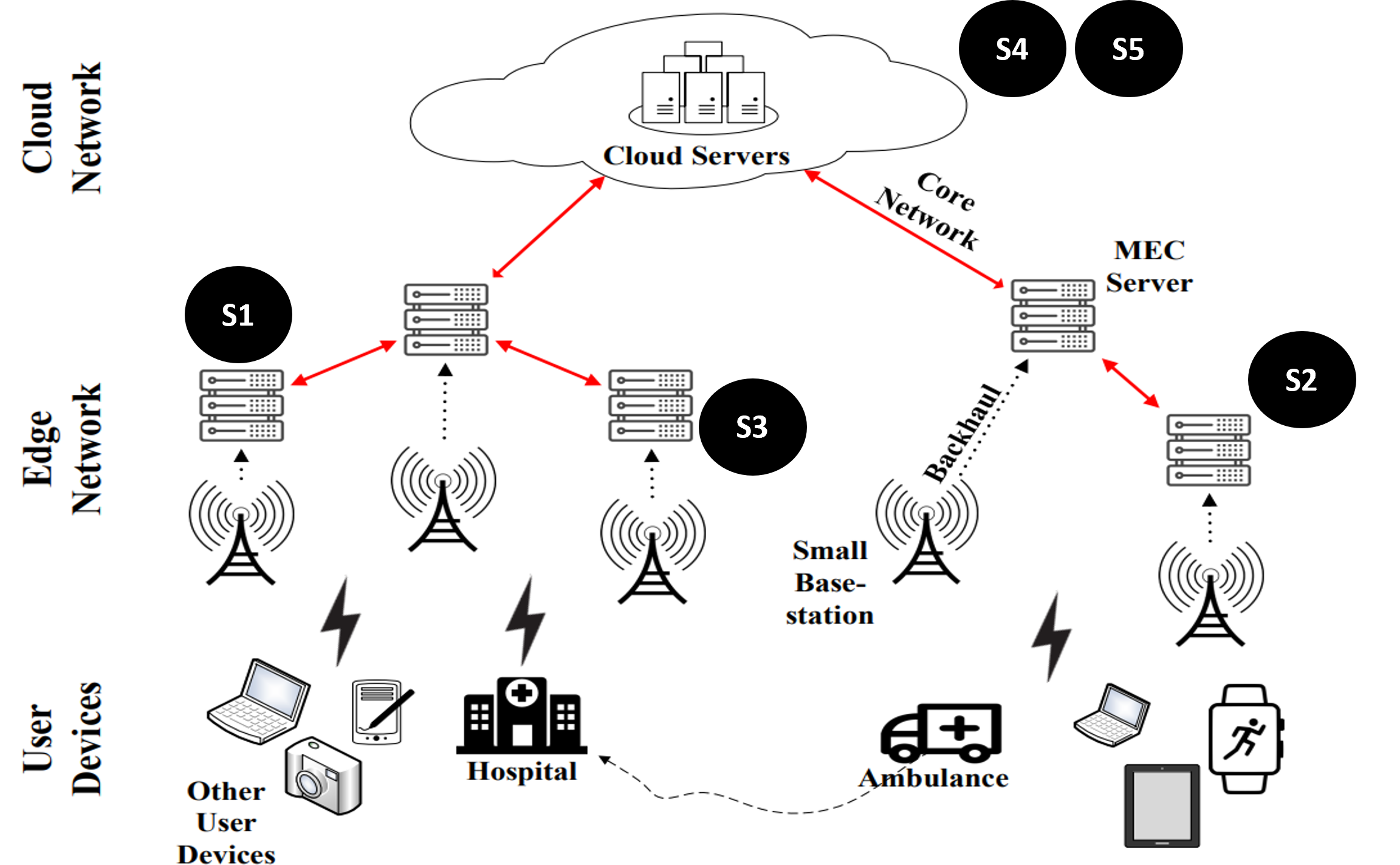

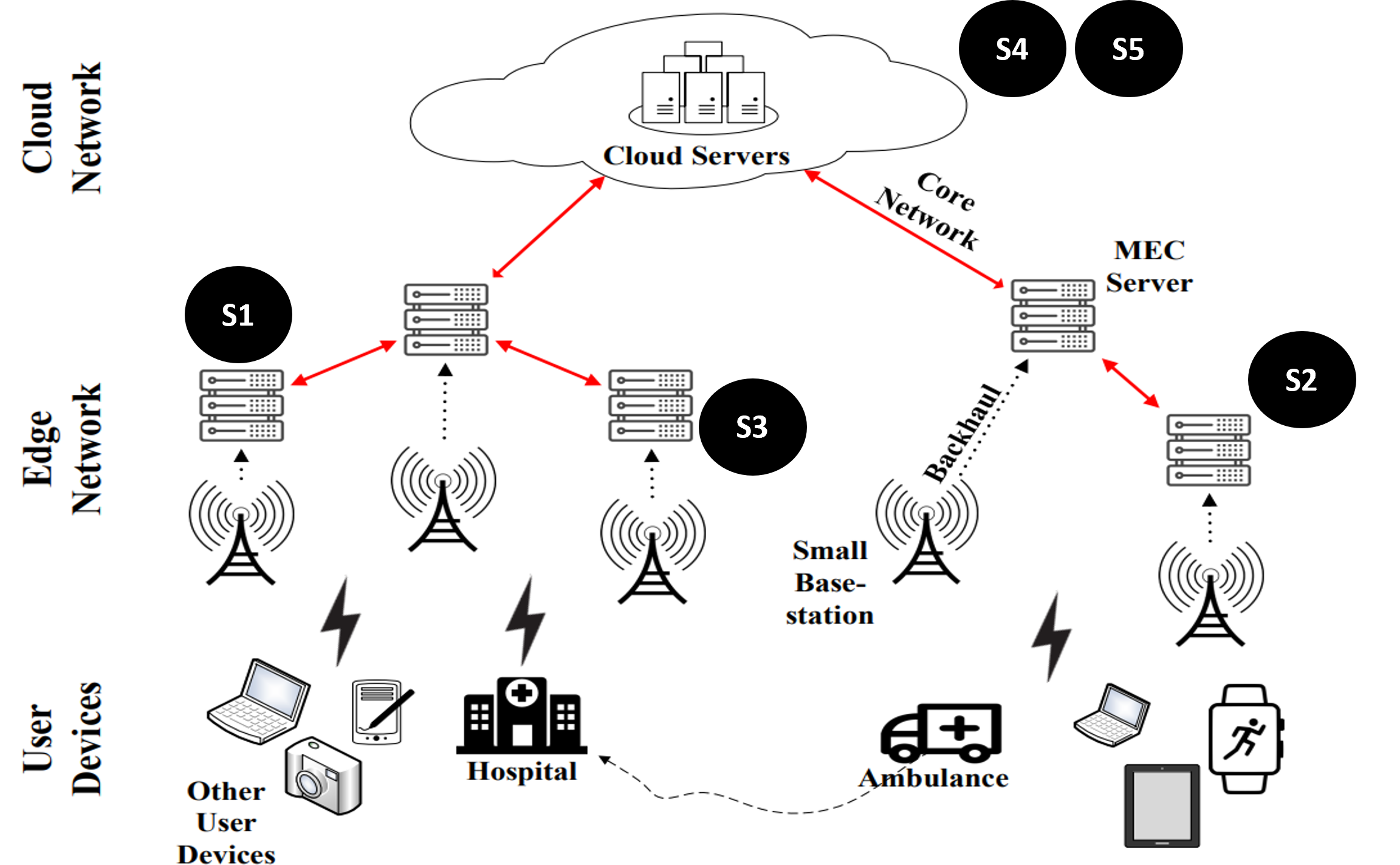

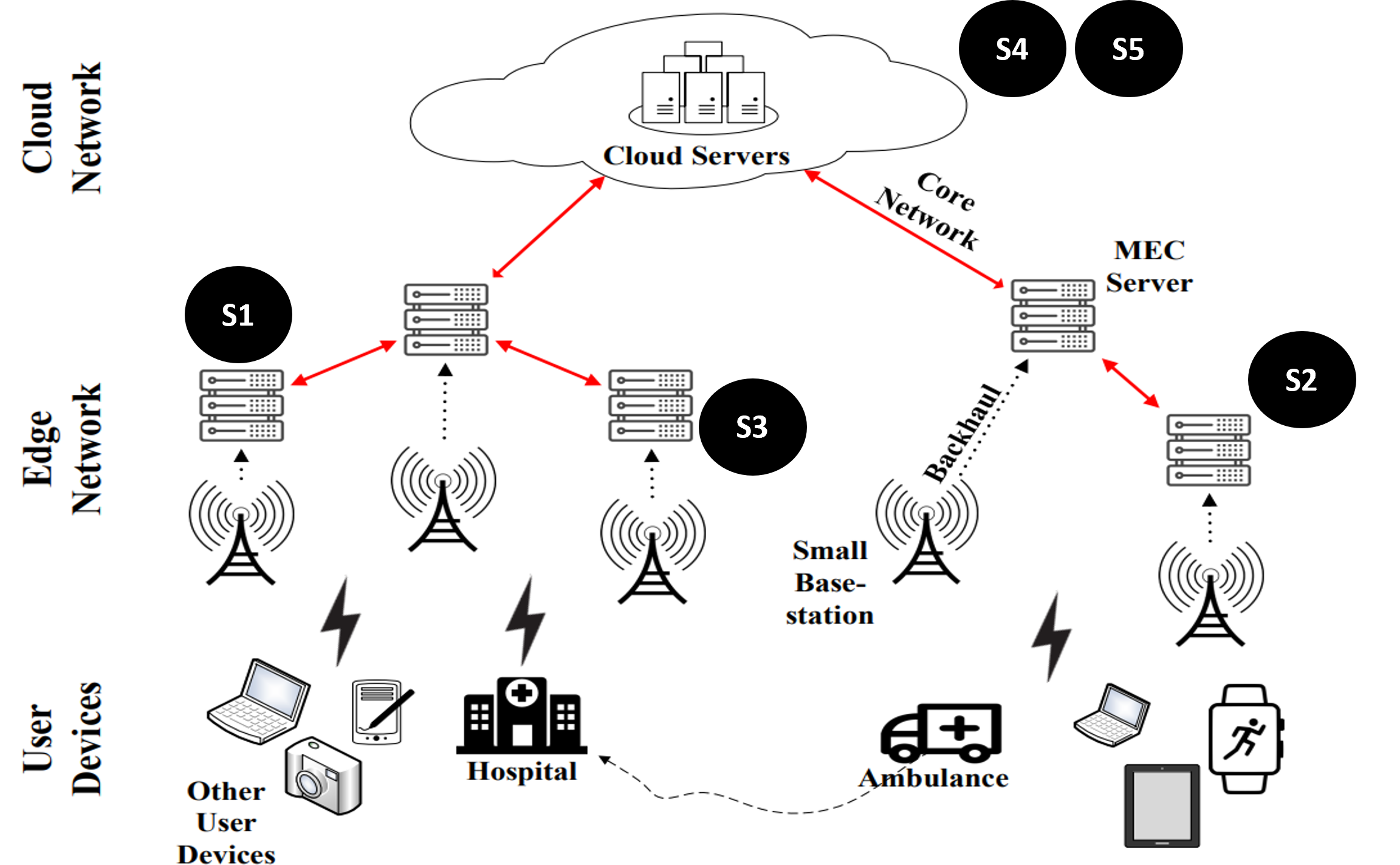

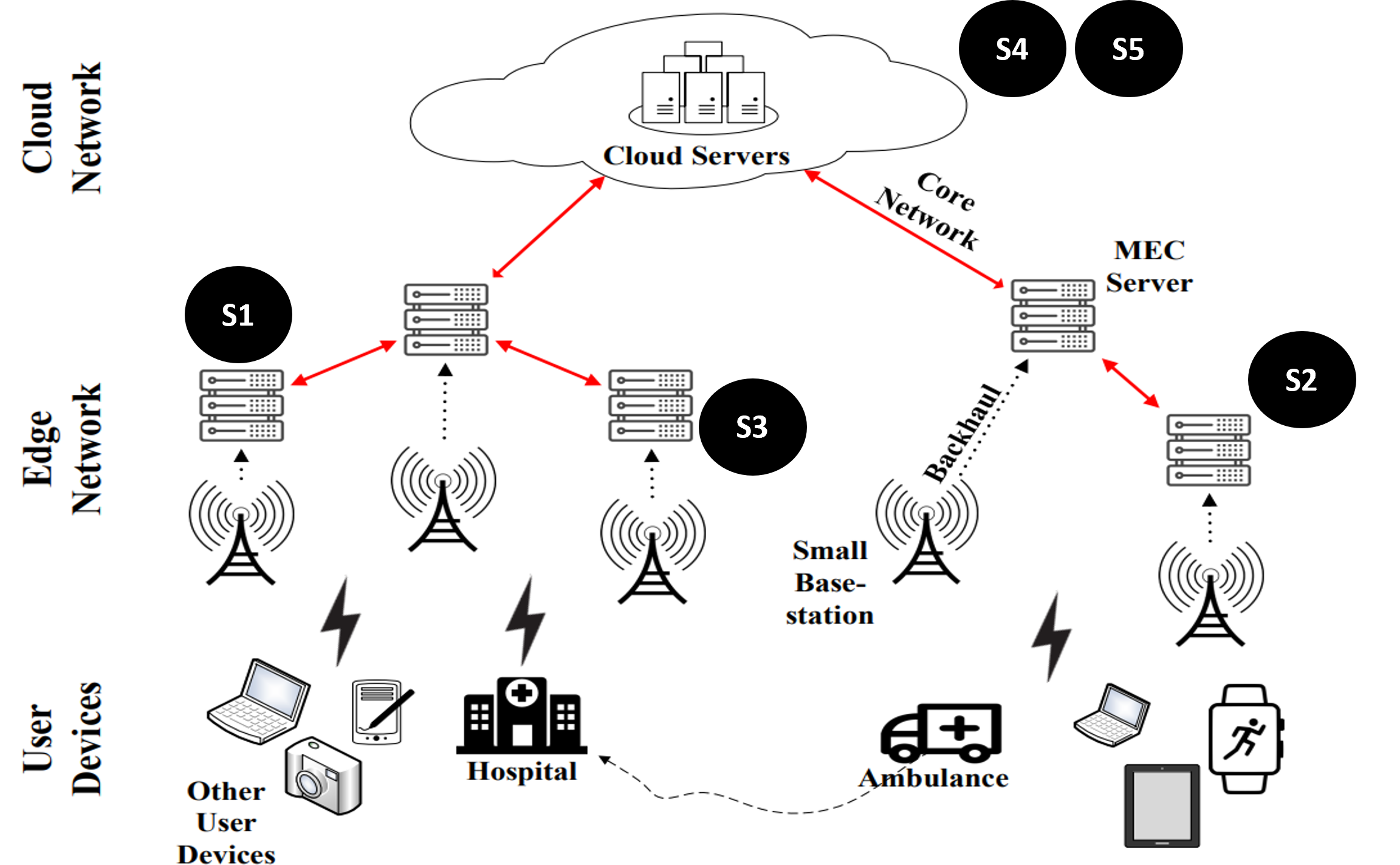

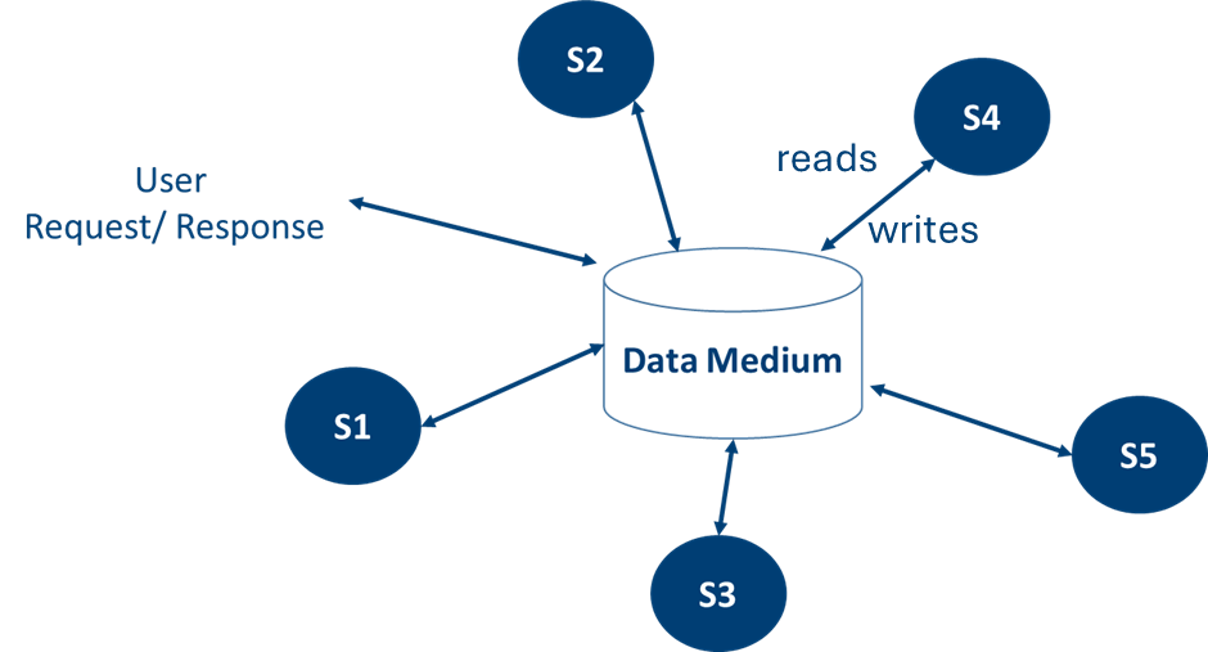

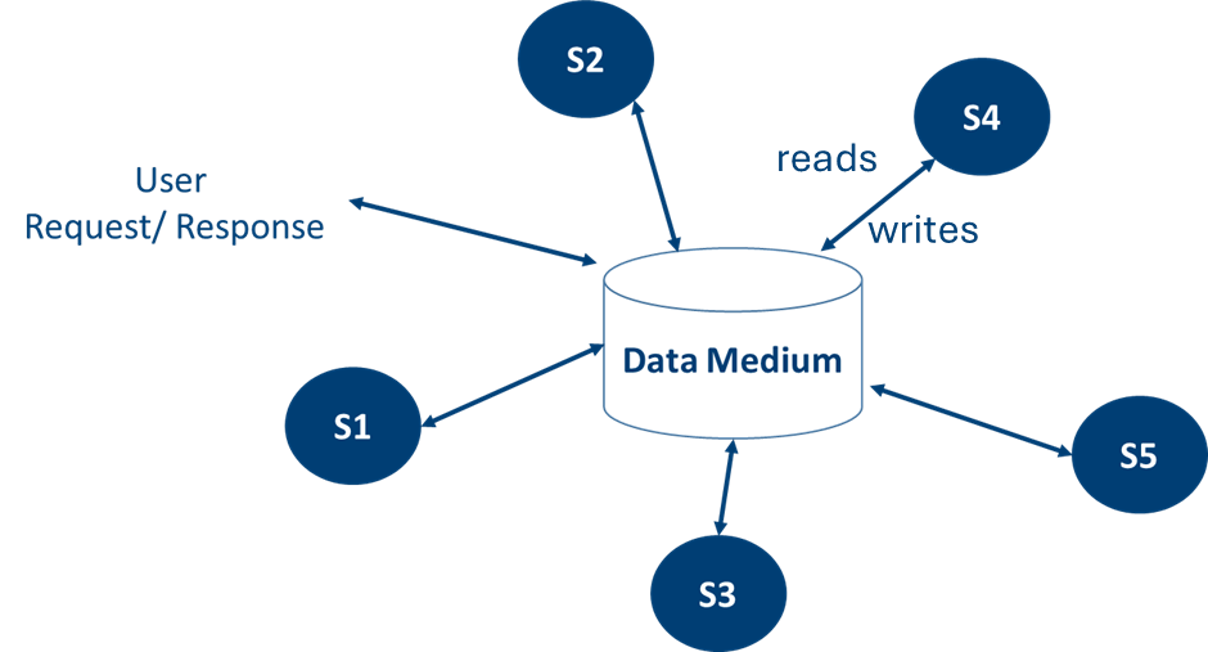

Dynamic Service Placement in Edge Computing

AI Systems

Dynamic Service Placement in Edge Computing

- Edge servers are located close to end users, allowing for local data processing.

- Services run on edge servers, which have limited resources.

- The challenge is to determine the optimal allocation of services and edge servers to minimize latency while considering resource constraints.

- This challenge is referred to as the Service Placement Problem.

AI Systems

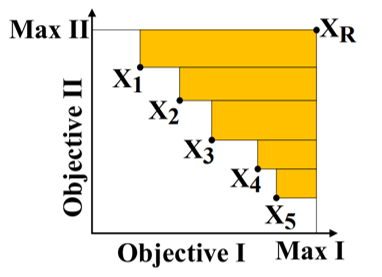

Dynamic Service Placement in Edge Computing

Objective Functions:

Subject to:

AI Systems

Dynamic Service Placement in Edge Computing

Objective Functions:

Subject to:

AI Systems

Dynamic Service Placement in Edge Computing

Objective Functions:

Subject to:

AI Systems

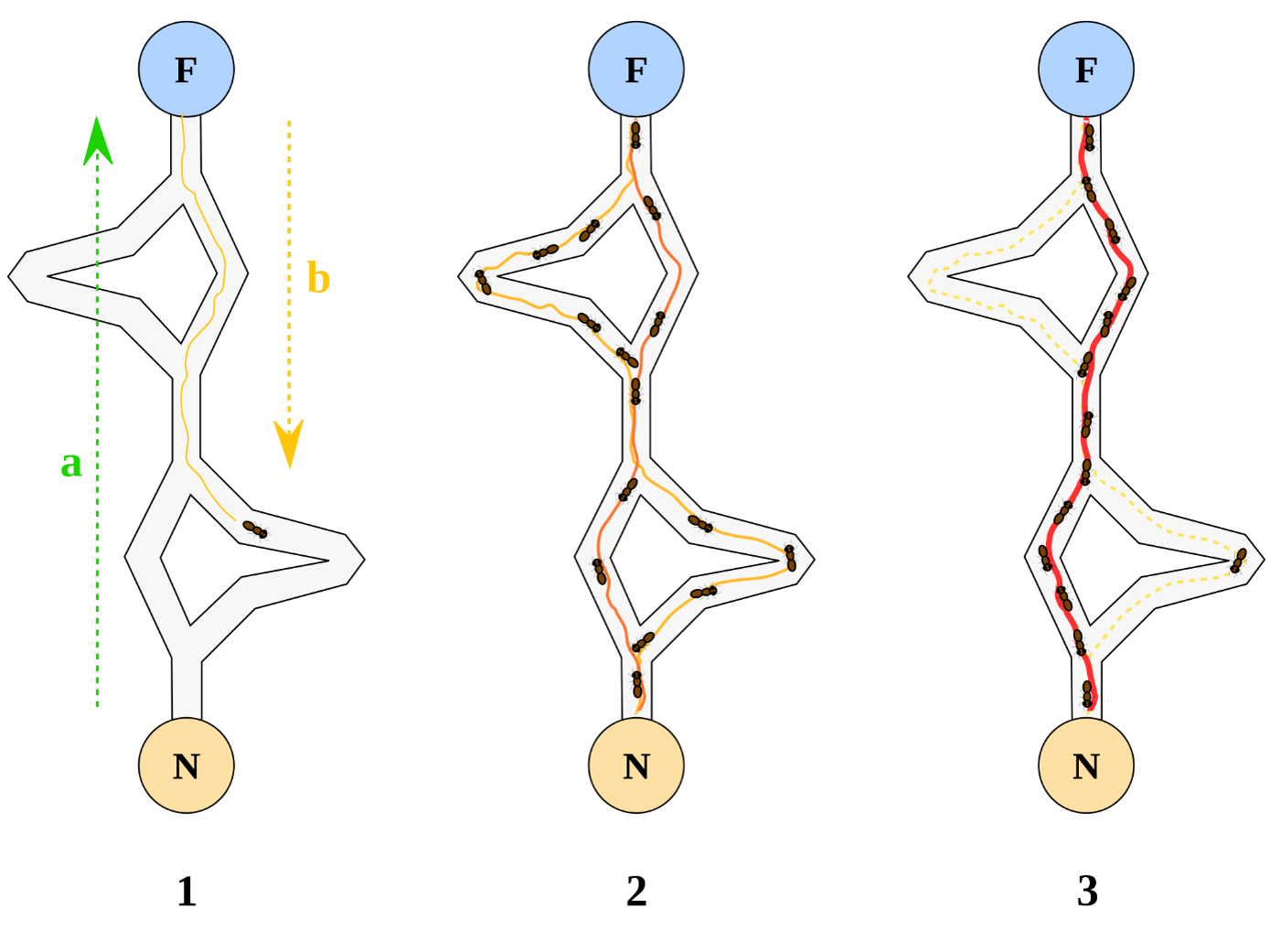

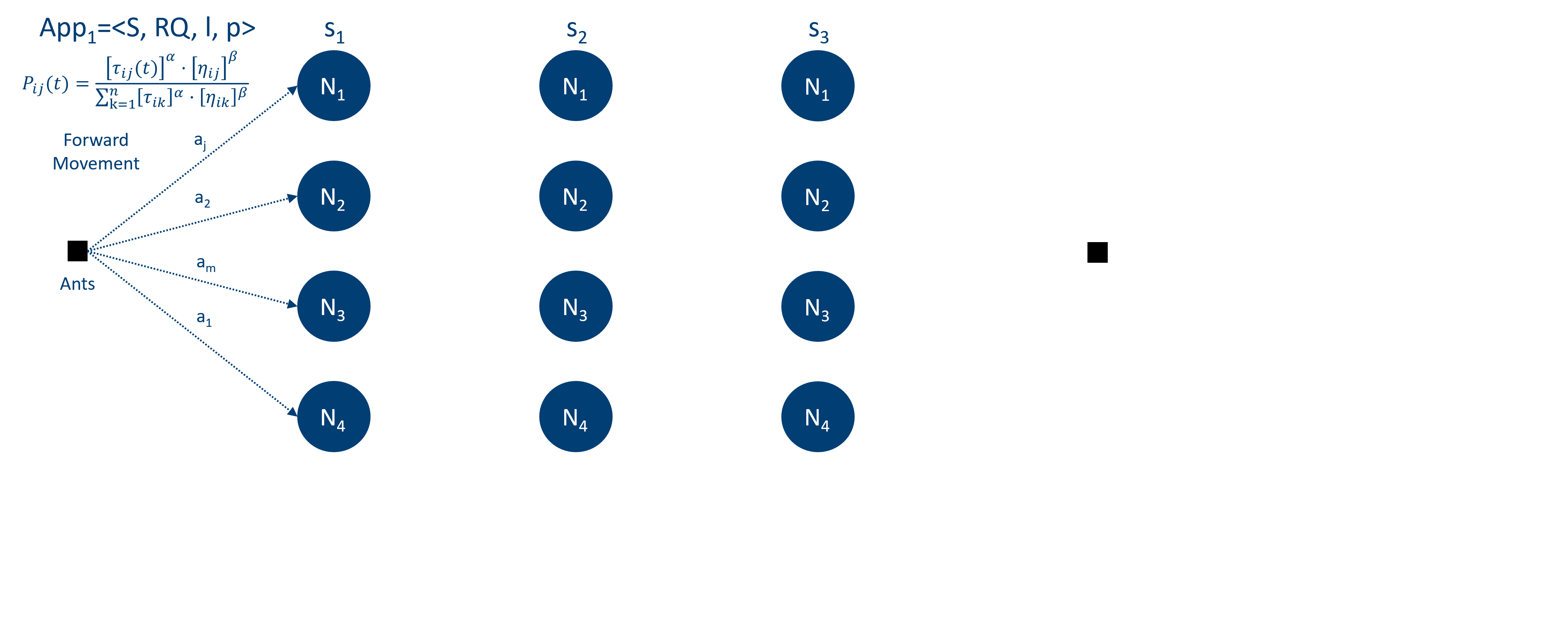

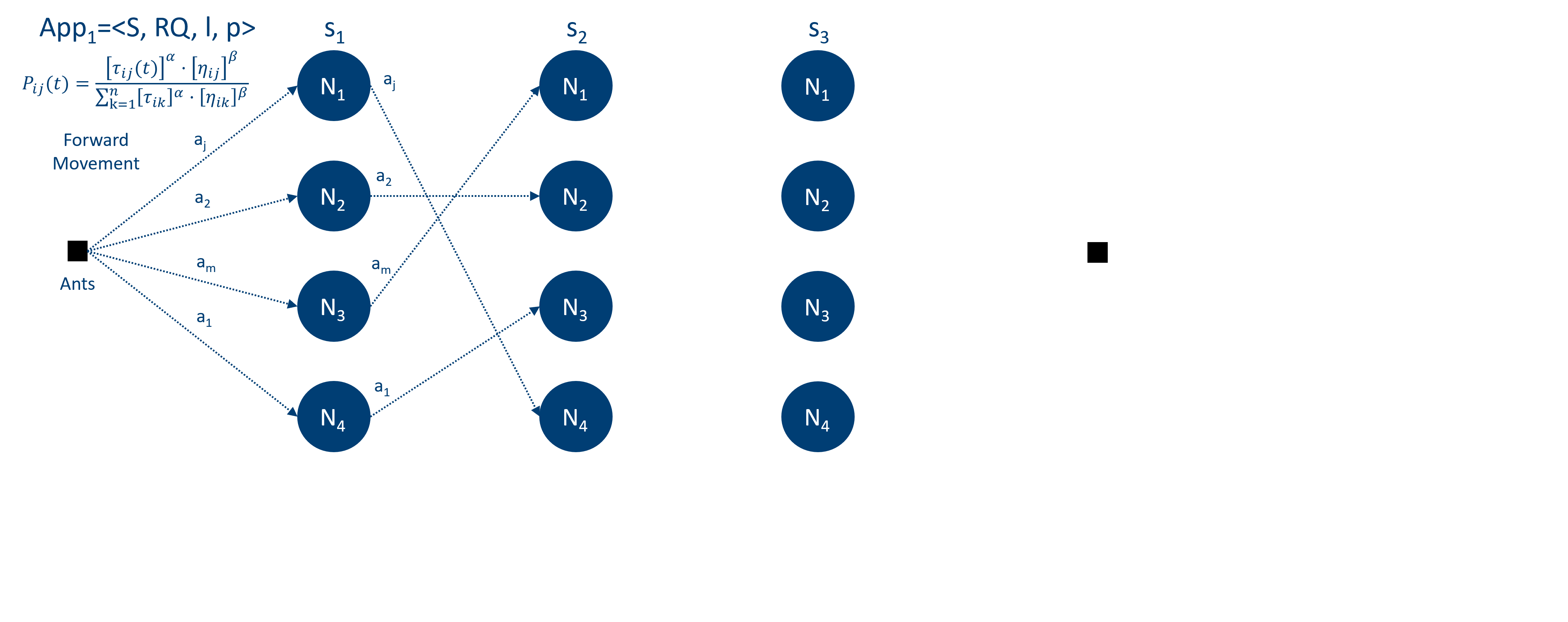

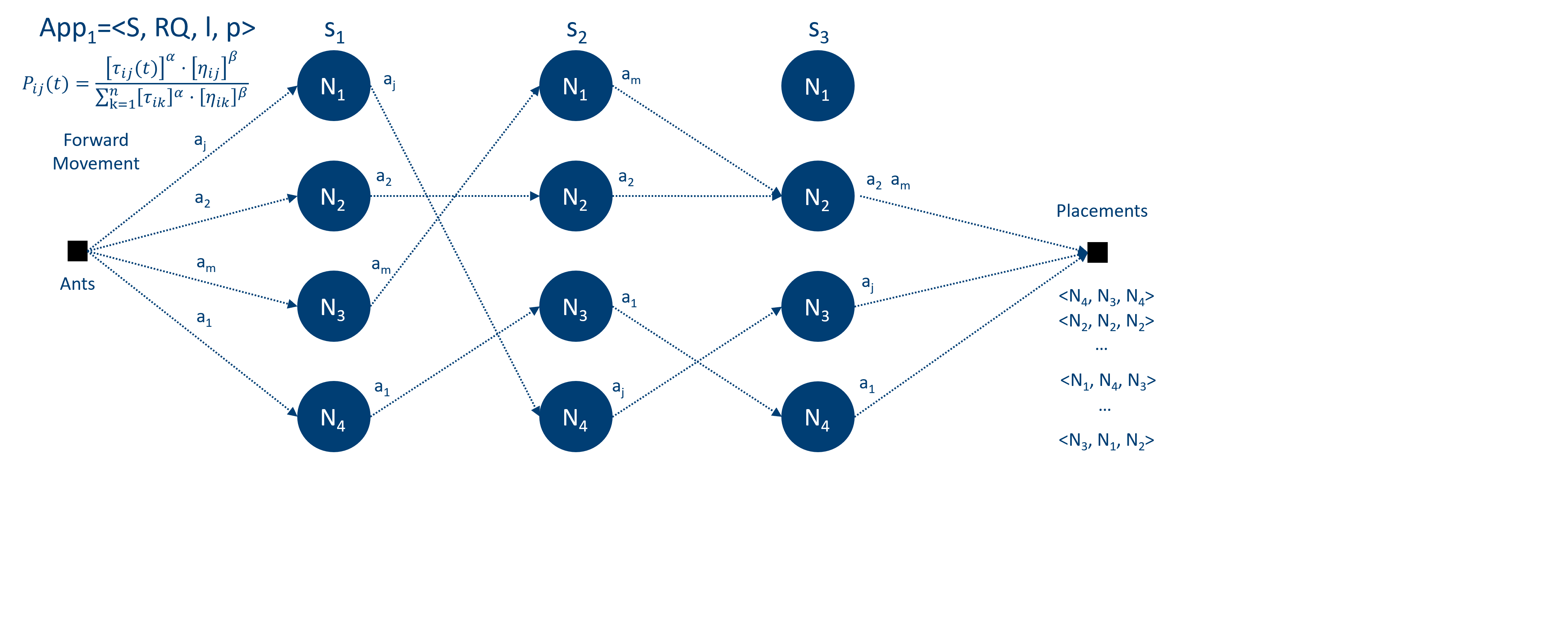

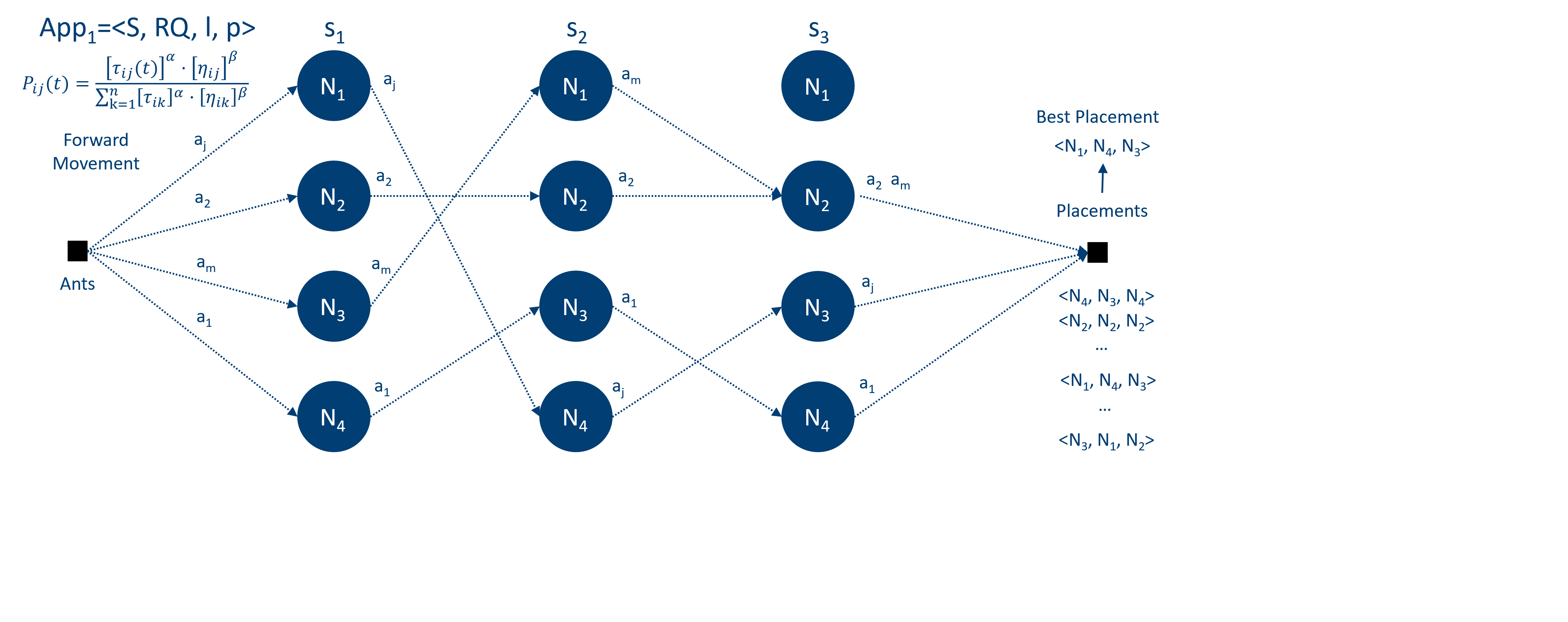

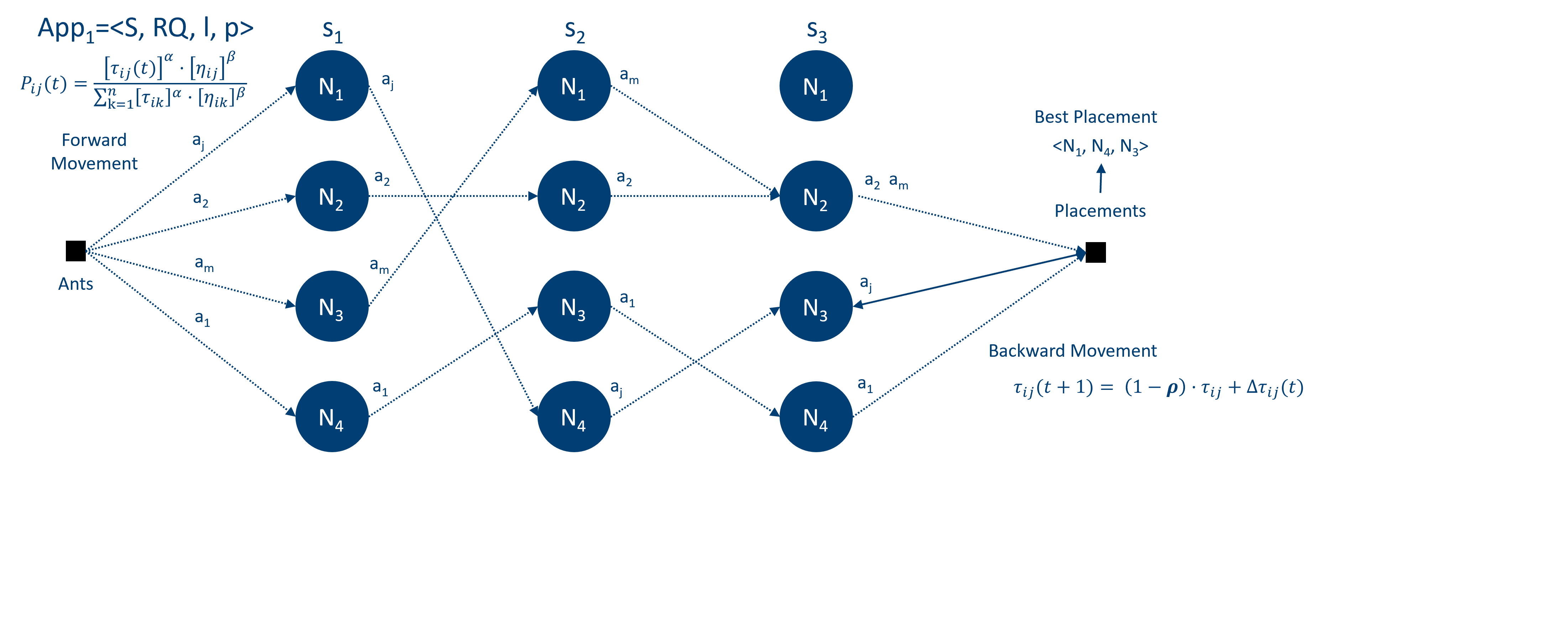

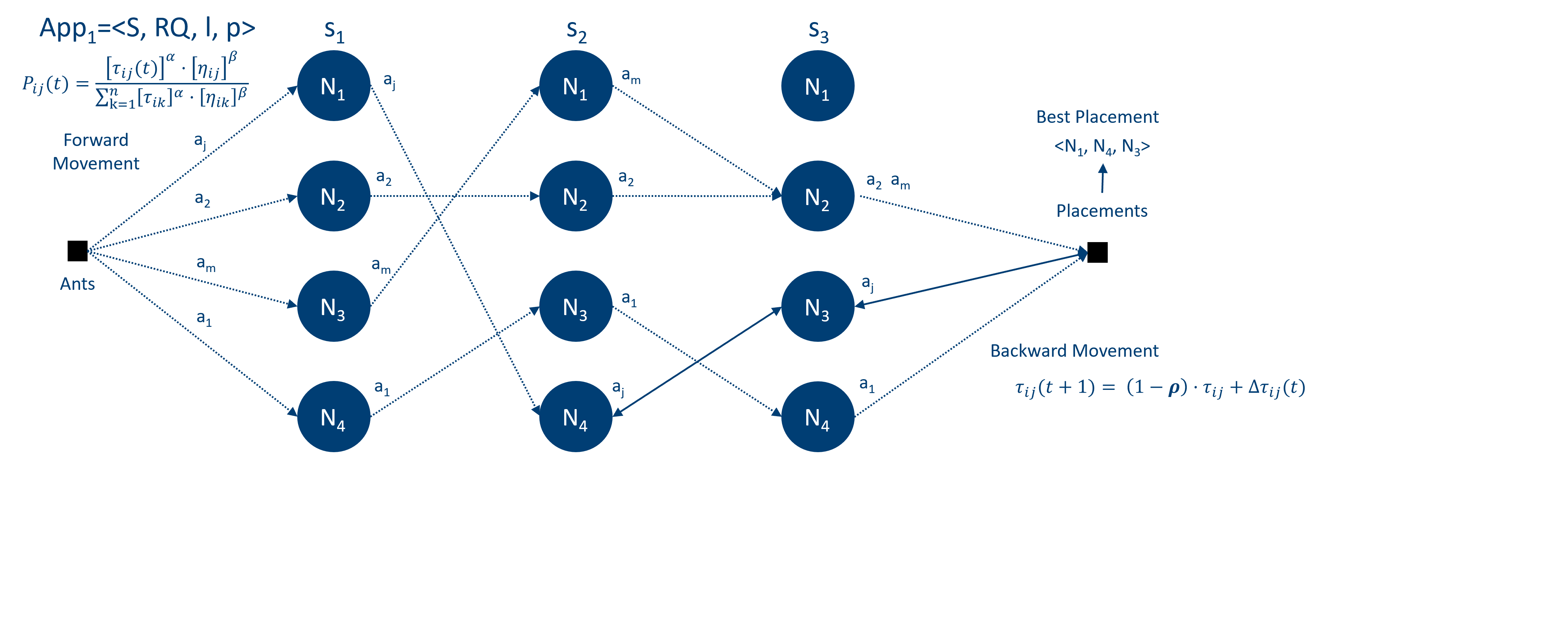

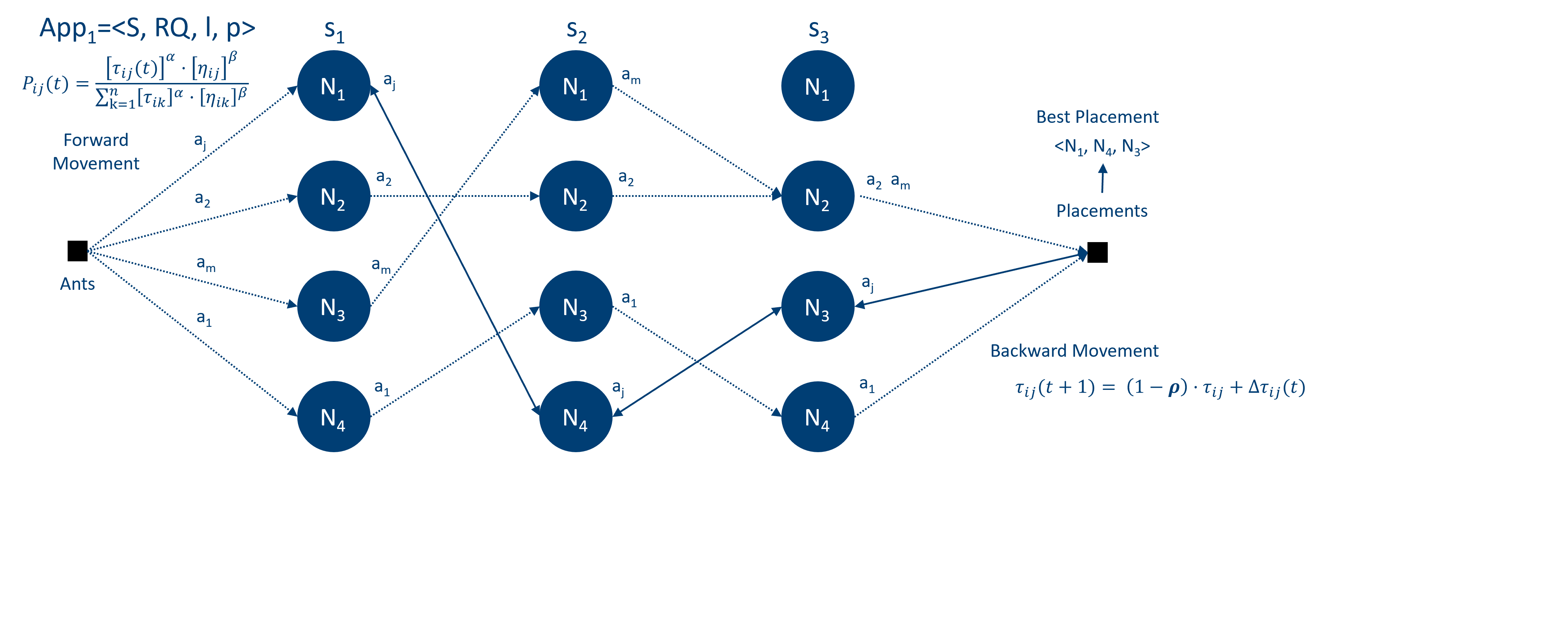

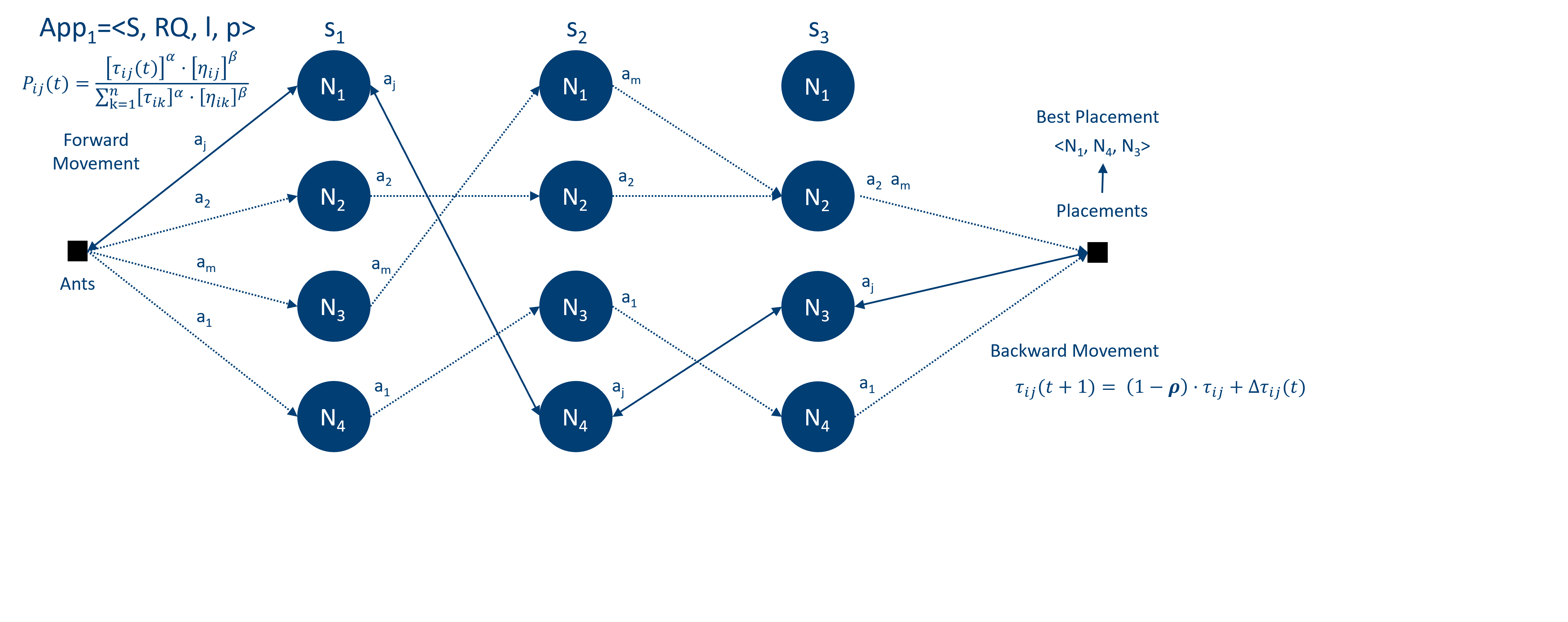

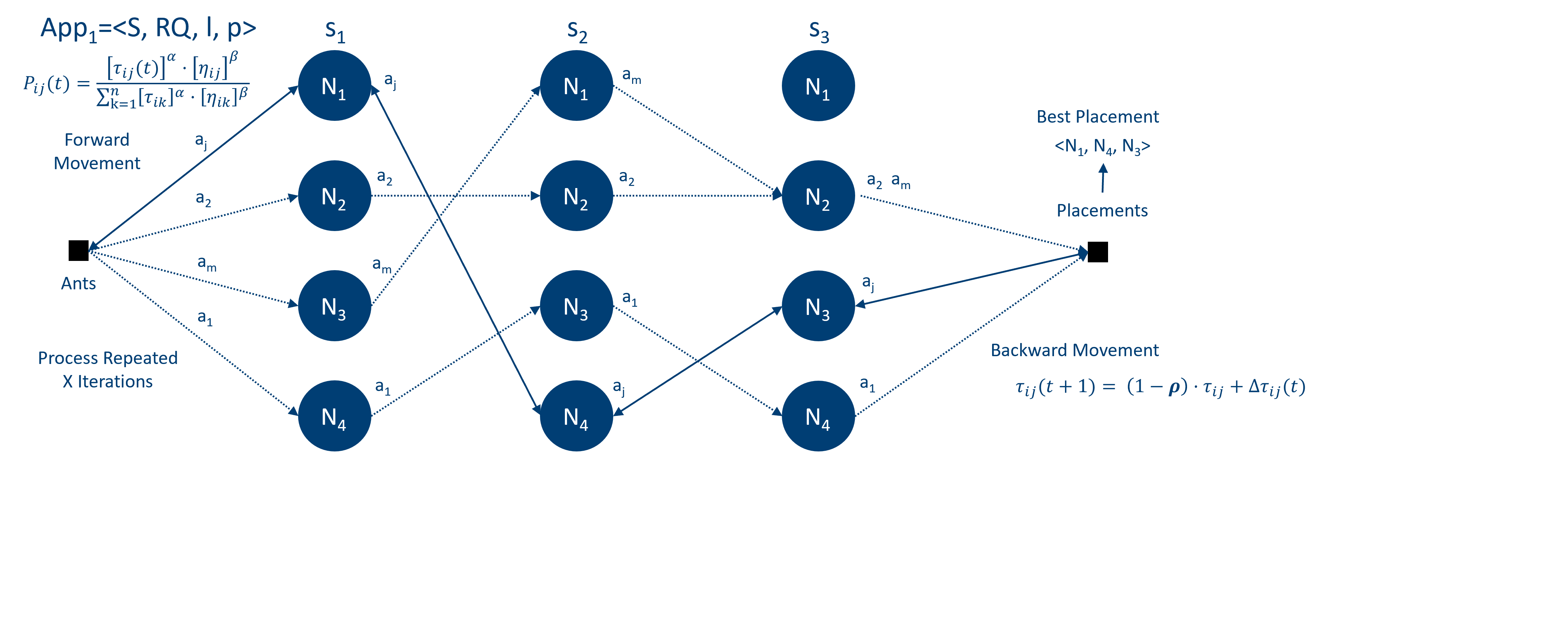

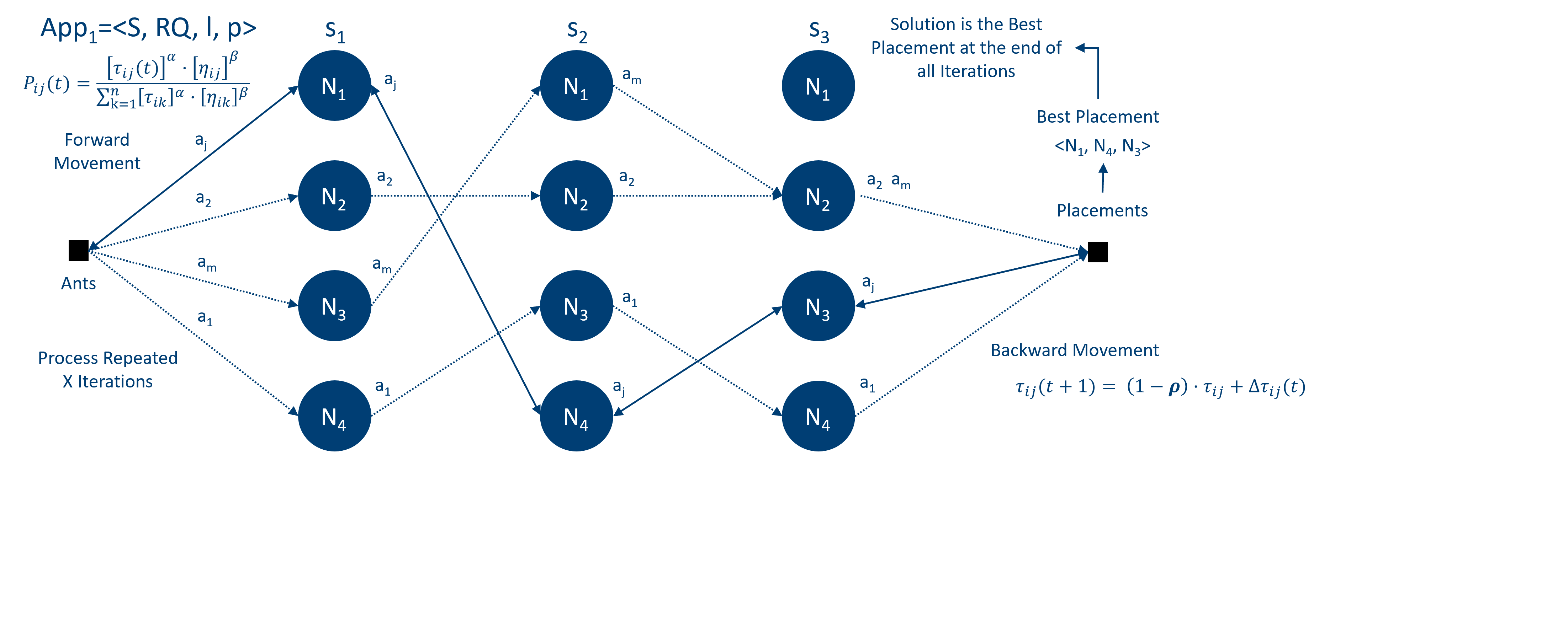

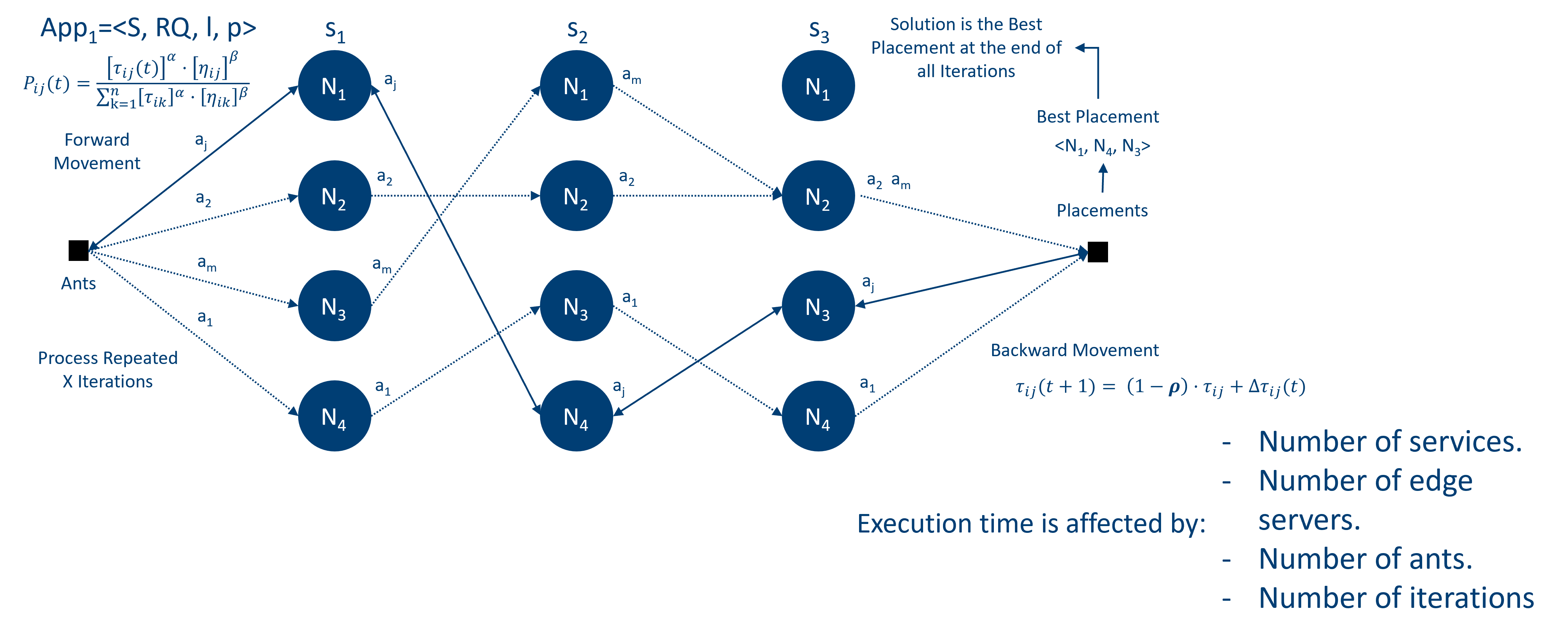

Ant Colony Optimization Algorithm

Where:

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

AI Systems

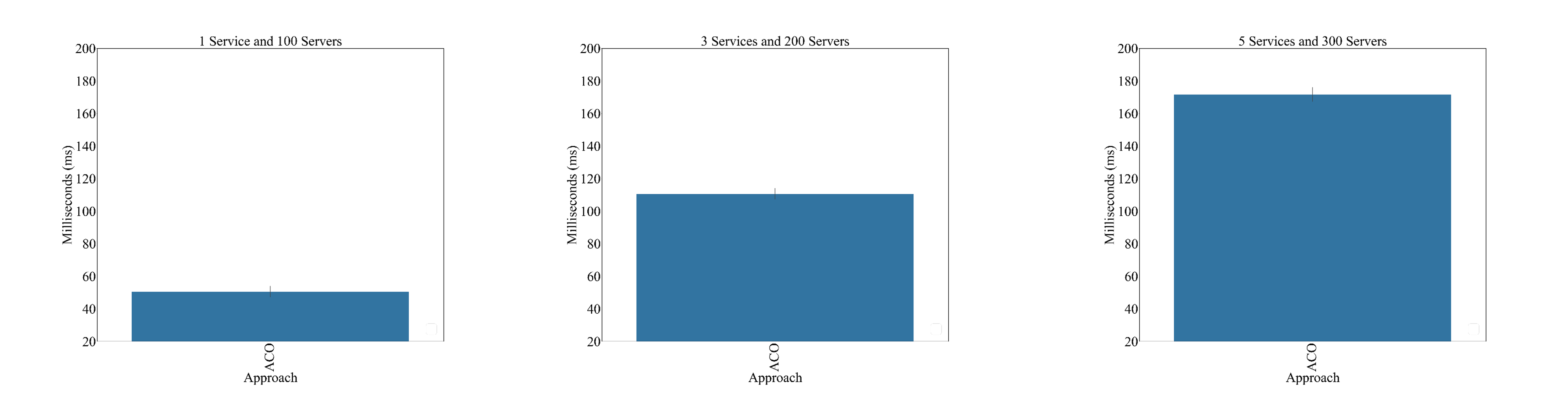

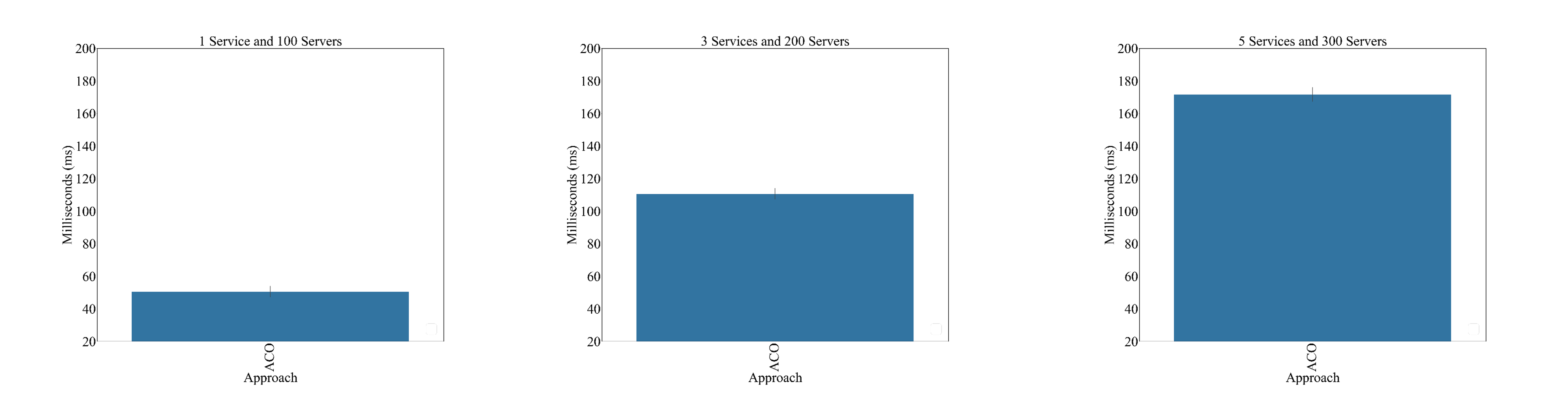

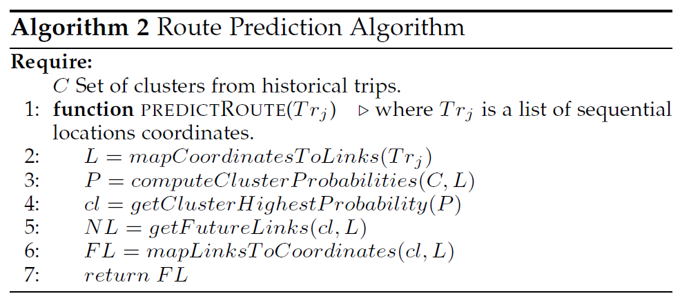

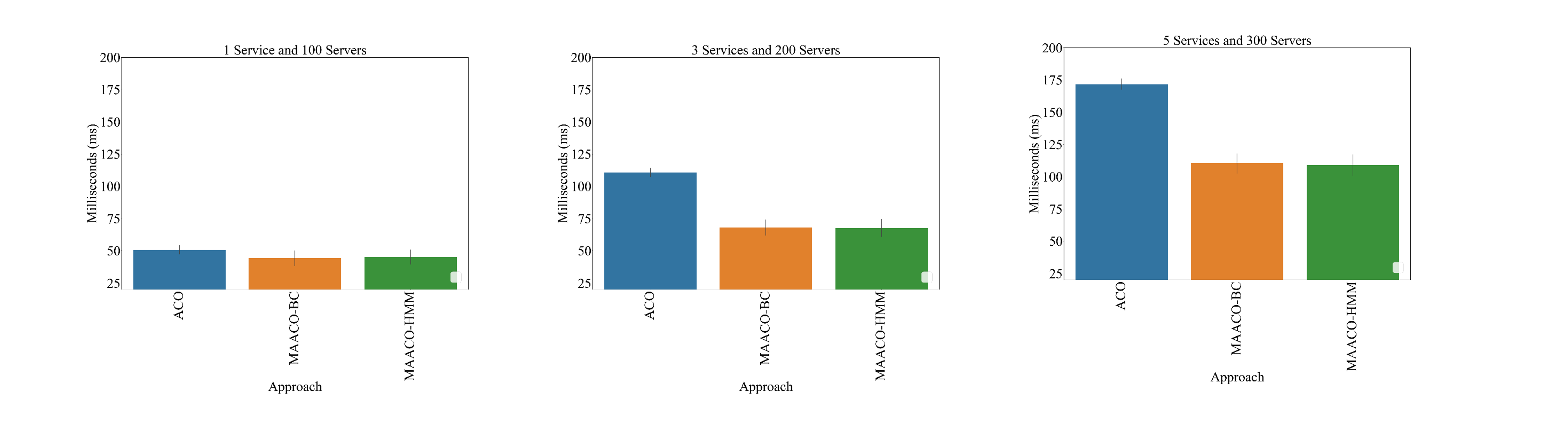

This execution time does not suit low-latency requirements, but that is how ACO is designed.

AI Systems

This execution time does not suit low-latency requirements, but that is how ACO is designed.

AI Systems

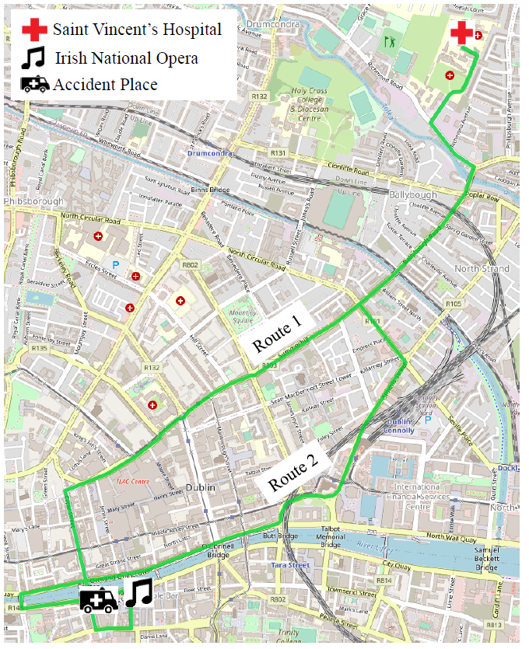

We should analyse the problem first:

AI Systems

We should analyse the problem first:

Variables we cannot reduce

- Number of services

- Number of iterations

- Number of ants

AI Systems

We should analyse the problem first:

Variables we cannot reduce

- Number of services

- Number of iterations

- Number of ants

We can reduce the number of servers, how?

We can pre-select edge servers by predicting user locations.

AI Systems

We should analyse the problem first:

Variables we cannot reduce

- Number of services

- Number of iterations

- Number of ants

We can reduce the number of servers, how?

We can pre-select edge servers by predicting user locations.

AI Systems

AI Systems

AI Systems

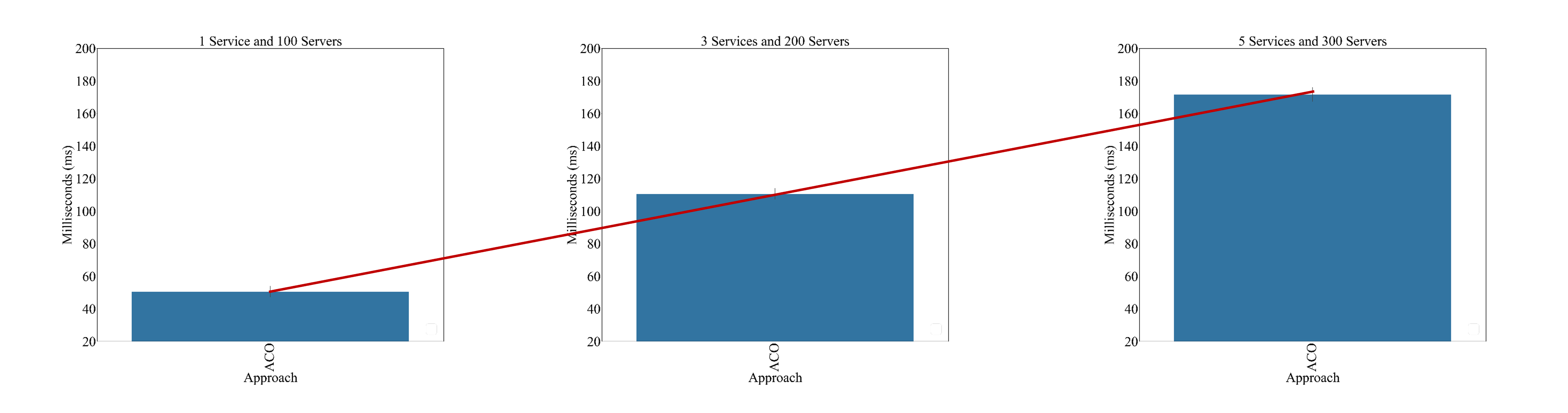

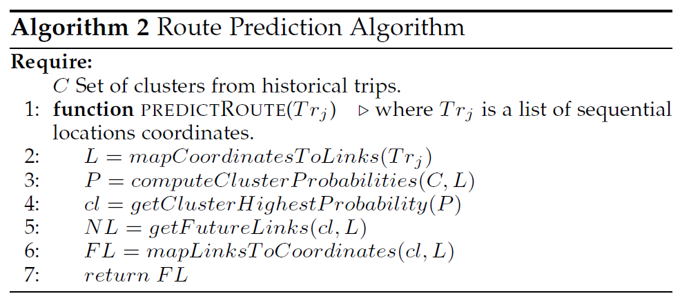

Selecting edge servers close to current and future users' location. We used two approaches that cluster historical trips and use these clusters to predict the next link in the user's path:

- Bayesian Classifier

- Hidden Markov Model

AI Systems

AI Systems

Bayesian Classifier

Hidden Markov Model

AI Systems

Bayesian Classifier

- Transition matrix depends on the number of streets in a city.

Hidden Markov Model

- Frequency matrix depends on the number of streets in a city.

AI Systems

Bayesian Classifier

- Transition matrix depends on the number of streets in a city.

- A lot of data (i.e., trips) are needed to train the model.

- Training time is now an issue!

- We assumed a limited number of streets in our work.

Hidden Markov Model

- Frequency matrix depends on the number of streets in a city.

- A lot of data (i.e., trips) are needed to train the model.

- Training time is now an issue!

- We assumed a limited number of streets in our work.

AI Systems

Bayesian Classifier

- Transition matrix depends on the number of streets in a city.

- A lot of data (i.e., trips) are needed to train the model.

- Training time is now an issue!

- We assumed a limited number of streets in our work.

Hidden Markov Model

- Frequency matrix depends on the number of streets in a city.

- A lot of data (i.e., trips) are needed to train the model.

- Training time is now an issue!

- We assumed a limited number of streets in our work.

Again, new design decisions are needed to deploy these algorithms in the real-world.

AI Systems

AI Systems

Hardware considerations:

AI Systems

Hardware considerations:

- Data Collection: Ensure sufficient storage capacity for large datasets and high-speed data transfer capabilities.

- Model Training: Invest in powerful GPUs or TPUs to handle intensive computations and reduce training time.

- Model Deployment: Consider edge devices for real-time processing and scalability of the deployment infrastructure.

- Maintenance and Updates: Plan for hardware upgrades and maintenance to accommodate evolving model requirements.

AI Systems

Software considerations:

AI Systems

Software considerations:

- Data Management: Implement efficient data preprocessing and cleaning pipelines to ensure high-quality input for models.

- Model Development: Utilize frameworks like TensorFlow or PyTorch for building and experimenting with different model architectures.

- Version Control: Use tools like Git to manage code versions and collaborate effectively with team members.

- Continuous Integration/Continuous Deployment (CI/CD): Set up automated testing and deployment pipelines to streamline updates and ensure reliability.

- Scalability: Design software architecture to support scaling, such as using microservices or serverless computing for flexible resource management.

- Security: Implement robust security measures to protect data privacy and model integrity.

AI as a Service

AI as a Service

AI as a Service

AI as a Service

AI as a Service

SOA is a design pattern in which services are provided between components, through a communication protocol over a network.

AI as a Service

SOA is a design pattern in which services are provided between components, through a communication protocol over a network.

Microservices are an architectural style that structures an application as a collection of small, autonomous services. Each microservice is self-contained and implements a business capability.

AI as a Service

SOA is a design pattern in which services are provided between components, through a communication protocol over a network.

Microservices are an architectural style that structures an application as a collection of small, autonomous services. Each microservice is self-contained and implements a business capability.

The concept of "Everything as a Service" (XaaS) extends the principles of SOA and microservices by offering comprehensive services over the internet. XaaS encompasses a wide range of services, including infrastructure, platforms, and software.

AI as a Service

AI as a Service (AIaaS) enables us to access and expose AI capabilities over the internet. We can integrate AI tools such as machine learning models, natural language processing, and computer vision into our applications leveraging SOA and microservices features.

AI as a Service

from flask import Flask, request, jsonify

app = Flask(__name__)

class SentimentAnalysisService:

def __init__(self, model):

self.model = model

def analyze_sentiment(self, text):

sentiment_score = self.model.predict(text)

if sentiment_score > 0.5:

return "Positive"

elif sentiment_score < -0.5:

return "Negative"

else:

return "Neutral"

...

@app.route('/analyze', methods=['POST'])

def analyze():

data = request.get_json()

text_to_analyze = data.get('text', '')

sentiment = service.analyze_sentiment(text_to_analyze)

return jsonify({'sentiment': sentiment})

...

MLOps

MLOps

MLOps

MLOps

MLOps is a set of practices and tools that support deploying and maintaining ML models in production reliably and efficiently. The goal is to automate and streamline the ML pipeline. These practices and tools include all the pipeline stages from data collection, model training, and deployment to monitoring and governance. We aim to ensure that ML models are robust, scalable, and continuously delivering value.

MLOps

- Automated data collection

- Automated model training and validation

- Continuous integration and continuous deployment

- Monitoring and logging

- Governance and compliance

- Scalability and reliability

MLOps

import mlflow

import logging

from datetime import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class ModelMonitor:

def __init__(self, model_name):

self.model_name = model_name

mlflow.set_tracking_uri("http://localhost:5000")

def log_prediction(self, input_data, prediction,

actual=None, model_version="1.0"):

"""Log model predictions for monitoring"""

with mlflow.start_run():

mlflow.log_params({

"input_size": len(input_data),

"model_version": model_version,

"timestamp": datetime.now().isoformat()

})

mlflow.log_metric("prediction", prediction)

if actual is not None:

mlflow.log_metric("actual", actual)

mlflow.log_metric("error", abs(prediction - actual))

logger.info(f"Prediction logged: {prediction}")

def monitor_drift(self, current_stats, baseline_stats):

"""Monitor for data drift"""

drift_score = self.calculate_drift(current_stats, baseline_stats)

mlflow.log_metric("drift_score", drift_score)

if drift_score > 0.1: # Threshold

logger.warning(f"Data drift detected: {drift_score}")

MLOps

import mlflow

import logging

from datetime import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class ModelMonitor:

def __init__(self, model_name):

self.model_name = model_name

mlflow.set_tracking_uri("http://localhost:5000")

def log_prediction(self, input_data, prediction,

actual=None, model_version="1.0"):

"""Log model predictions for monitoring"""

with mlflow.start_run():

mlflow.log_params({

"input_size": len(input_data),

"model_version": model_version,

"timestamp": datetime.now().isoformat()

})

mlflow.log_metric("prediction", prediction)

if actual is not None:

mlflow.log_metric("actual", actual)

mlflow.log_metric("error", abs(prediction - actual))

logger.info(f"Prediction logged: {prediction}")

def monitor_drift(self, current_stats, baseline_stats):

"""Monitor for data drift"""

drift_score = self.calculate_drift(current_stats, baseline_stats)

mlflow.log_metric("drift_score", drift_score)

if drift_score > 0.1: # Threshold

logger.warning(f"Data drift detected: {drift_score}")

MLOps

MLOps

MLOps

Conclusions

Conclusions

Conclusions

Overview

- AI Systems

- AI as a Service

- MLOps

Conclusions

Overview

- AI Systems

- AI as a Service

- MLOps

Course Summary

- Machine Learning Context

- Machine Learning Definition

- The Problem First

- Data Orientation

- Data Quality

- Supervised and Reinforcement Learning

- Large Language Models

- AI Systems

AI History - Machine Learning Age (2001 - present)

ML Definition

Our ML projects must have a purpose...

The ML Adoption Process