ML Deployment

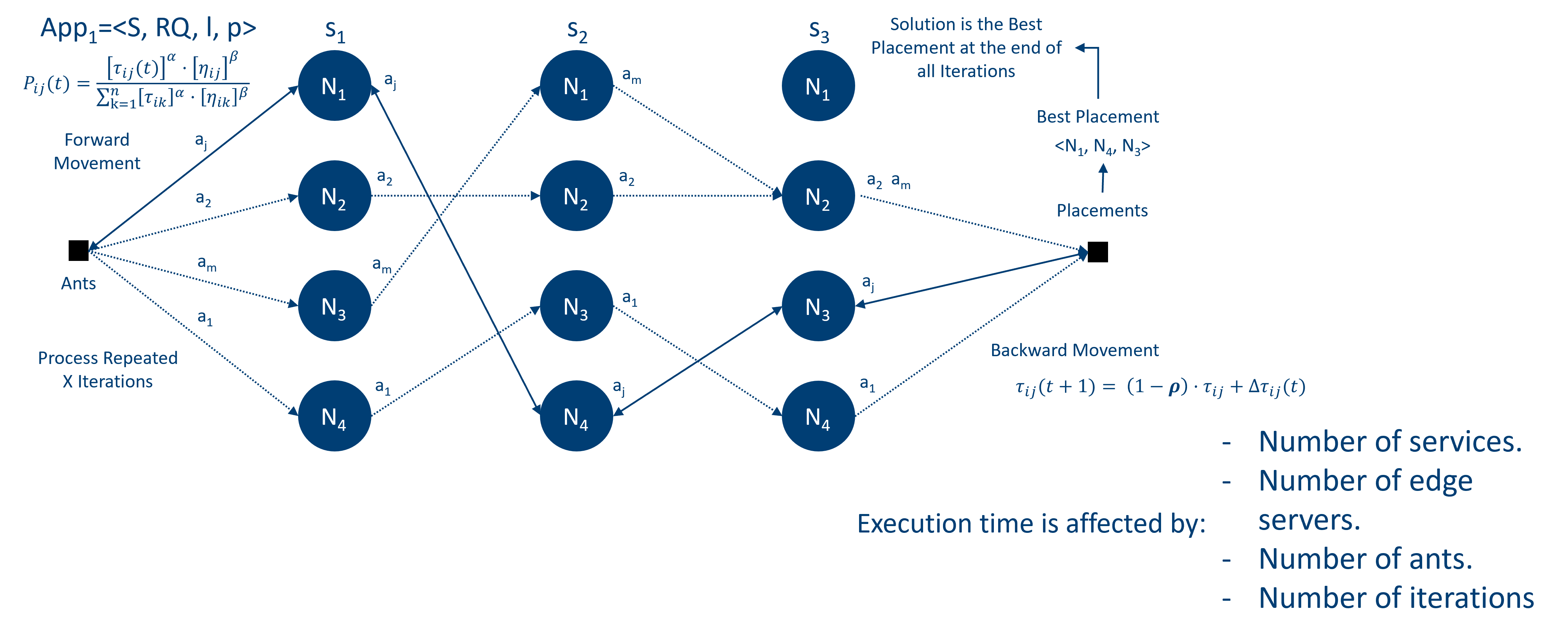

Ant Colony Optimization Algorithm

Where:

ML Deployment

AI Systems

ML Deployment

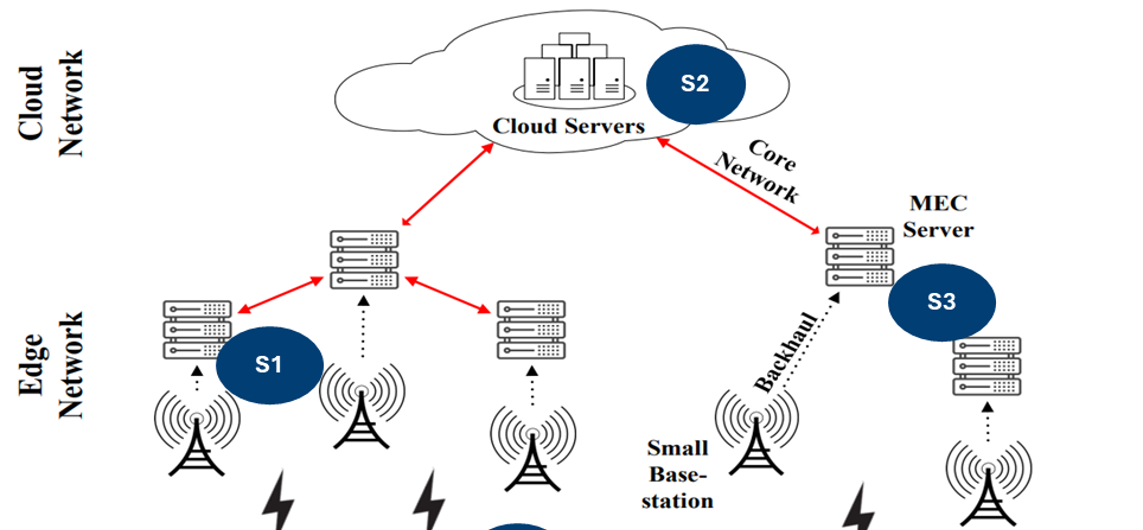

Hardware considerations:

ML Deployment

Hardware considerations:

- Data Collection: Ensure sufficient storage capacity for large datasets and high-speed data transfer capabilities.

- Model Training: Invest in powerful GPUs or TPUs to handle intensive computations and reduce training time.

- Model Deployment: Consider edge devices for real-time processing and scalability of the deployment infrastructure.

- Maintenance and Updates: Plan for hardware upgrades and maintenance to accommodate evolving model requirements.

ML Deployment

Software considerations:

ML Deployment

Software considerations:

- Data Management: Implement efficient data preprocessing and cleaning pipelines to ensure high-quality input for models.

- Model Development: Utilize frameworks like TensorFlow or PyTorch for building and experimenting with different model architectures.

- Version Control: Use tools like Git to manage code versions and collaborate effectively with team members.

- Continuous Integration/Continuous Deployment (CI/CD): Set up automated testing and deployment pipelines to streamline updates and ensure reliability.

- Scalability: Design software architecture to support scaling, such as using microservices or serverless computing for flexible resource management.

- Security: Implement robust security measures to protect data privacy and model integrity.

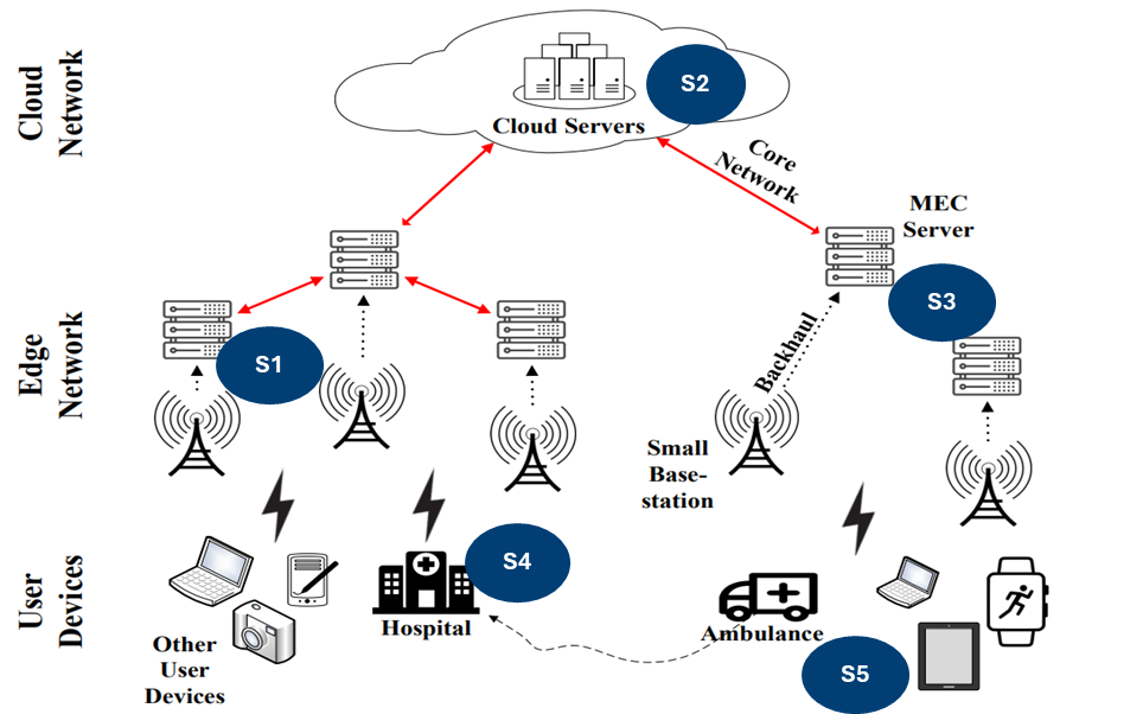

AI as a Service

AI as a Service

AI as a Service

AI as a Service

AI as a Service

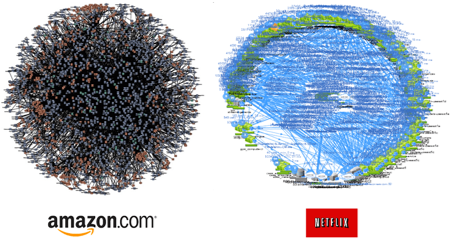

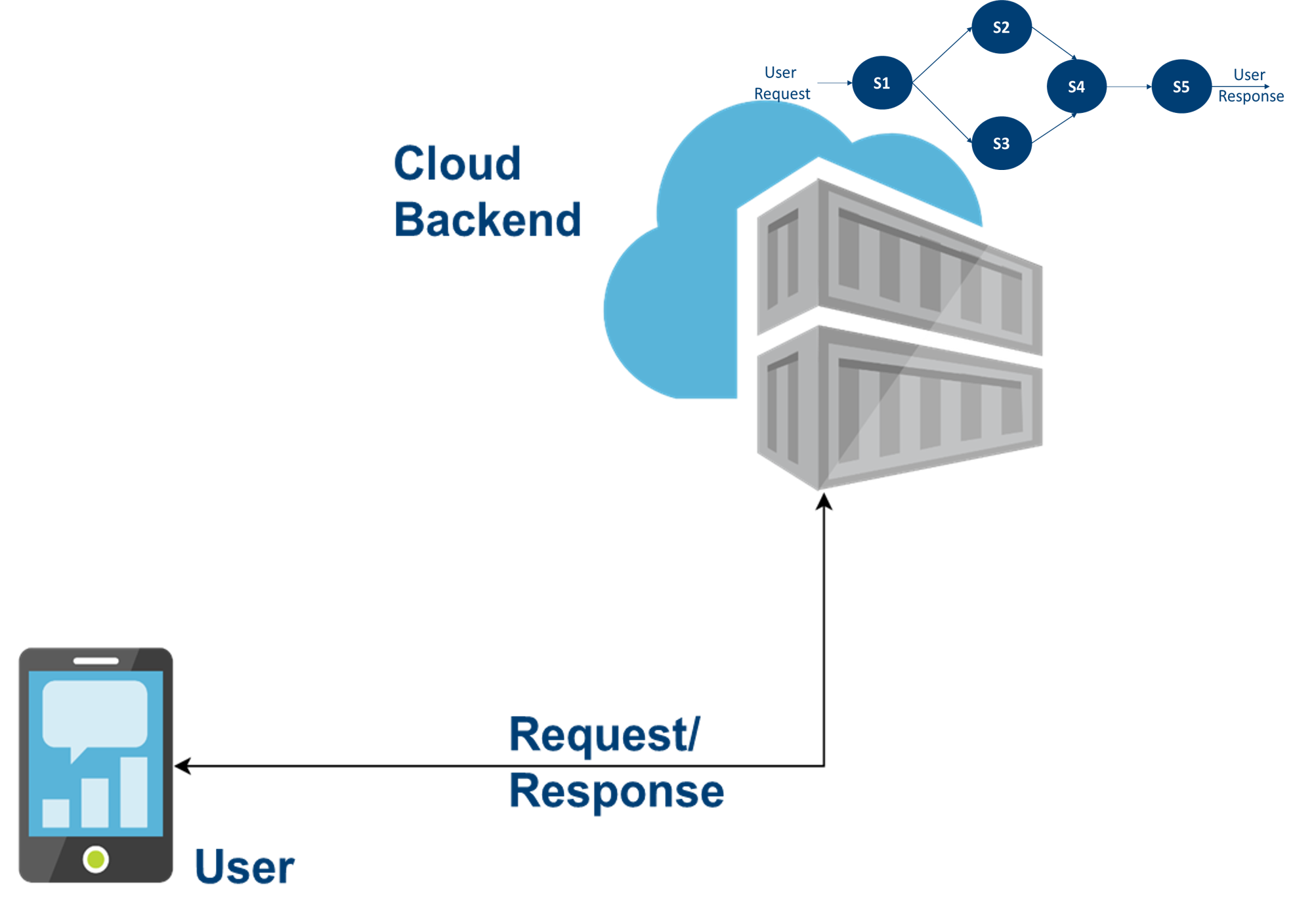

SOA is a design pattern in which services are provided between components, through a communication protocol over a network.

AI as a Service

SOA is a design pattern in which services are provided between components, through a communication protocol over a network.

Microservices are an architectural style that structures an application as a collection of small, autonomous services. Each microservice is self-contained and implements a business capability.

AI as a Service

SOA is a design pattern in which services are provided between components, through a communication protocol over a network.

Microservices are an architectural style that structures an application as a collection of small, autonomous services. Each microservice is self-contained and implements a business capability.

The concept of "Everything as a Service" (XaaS) extends the principles of SOA and microservices by offering comprehensive services over the internet. XaaS encompasses a wide range of services, including infrastructure, platforms, and software.

AI as a Service

AI as a Service (AIaaS) enables us to access and expose AI capabilities over the internet. We can integrate AI tools such as machine learning models, natural language processing, and computer vision into our applications leveraging SOA and microservices features.

AI as a Service

from flask import Flask, request, jsonify

app = Flask(__name__)

class SentimentAnalysisService:

def __init__(self, model):

self.model = model

def analyze_sentiment(self, text):

sentiment_score = self.model.predict(text)

if sentiment_score > 0.5:

return "Positive"

elif sentiment_score < -0.5:

return "Negative"

else:

return "Neutral"

...

@app.route('/analyze', methods=['POST'])

def analyze():

data = request.get_json()

text_to_analyze = data.get('text', '')

sentiment = service.analyze_sentiment(text_to_analyze)

return jsonify({'sentiment': sentiment})

...

MLOps

MLOps

MLOps

MLOps

MLOps is a set of practices and tools that support deploying and maintaining ML models in production reliably and efficiently. The goal is to automate and streamline the ML pipeline. These practices and tools include all the pipeline stages from data collection, model training, and deployment to monitoring and governance. We aim to ensure that ML models are robust, scalable, and continuously delivering value.

MLOps

- Automated data collection

- Automated model training and validation

- Continuous integration and continuous deployment

- Monitoring and logging

- Governance and compliance

- Scalability and reliability

MLOps

import mlflow

import logging

from datetime import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class ModelMonitor:

def __init__(self, model_name):

self.model_name = model_name

mlflow.set_tracking_uri("http://localhost:5000")

def log_prediction(self, input_data, prediction,

actual=None, model_version="1.0"):

"""Log model predictions for monitoring"""

with mlflow.start_run():

mlflow.log_params({

"input_size": len(input_data),

"model_version": model_version,

"timestamp": datetime.now().isoformat()

})

mlflow.log_metric("prediction", prediction)

if actual is not None:

mlflow.log_metric("actual", actual)

mlflow.log_metric("error", abs(prediction - actual))

logger.info(f"Prediction logged: {prediction}")

def monitor_drift(self, current_stats, baseline_stats):

"""Monitor for data drift"""

drift_score = self.calculate_drift(current_stats, baseline_stats)

mlflow.log_metric("drift_score", drift_score)

if drift_score > 0.1: # Threshold

logger.warning(f"Data drift detected: {drift_score}")

MLOps

import mlflow

import logging

from datetime import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class ModelMonitor:

def __init__(self, model_name):

self.model_name = model_name

mlflow.set_tracking_uri("http://localhost:5000")

def log_prediction(self, input_data, prediction,

actual=None, model_version="1.0"):

"""Log model predictions for monitoring"""

with mlflow.start_run():

mlflow.log_params({

"input_size": len(input_data),

"model_version": model_version,

"timestamp": datetime.now().isoformat()

})

mlflow.log_metric("prediction", prediction)

if actual is not None:

mlflow.log_metric("actual", actual)

mlflow.log_metric("error", abs(prediction - actual))

logger.info(f"Prediction logged: {prediction}")

def monitor_drift(self, current_stats, baseline_stats):

"""Monitor for data drift"""

drift_score = self.calculate_drift(current_stats, baseline_stats)

mlflow.log_metric("drift_score", drift_score)

if drift_score > 0.1: # Threshold

logger.warning(f"Data drift detected: {drift_score}")

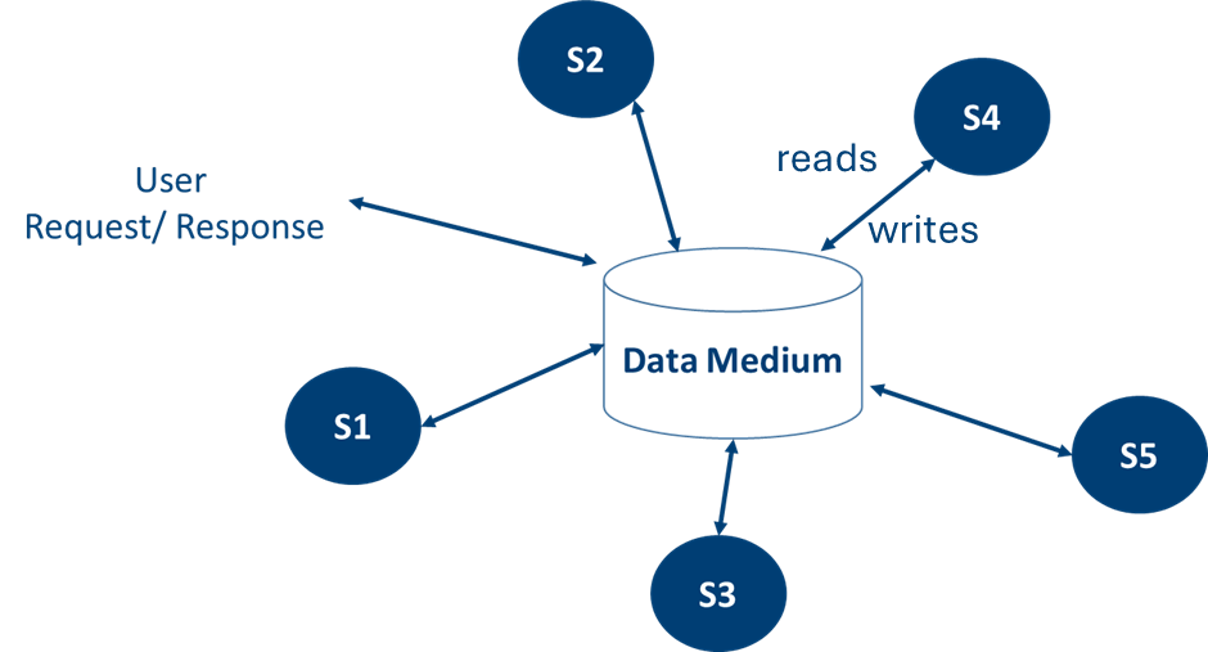

Data Orientation

Data-Orientation

Data-Orientation

Focus on Operations

Data-Orientation

Focus on Operations

Data-Orientation

Focus on Operations

- Separation of concerns

- High availability

- Scalability

- Low latency

Data-Orientation

Focus on Operations

- Separation of concerns

- High availability

- Scalability

- Low latency

Data-Orientation

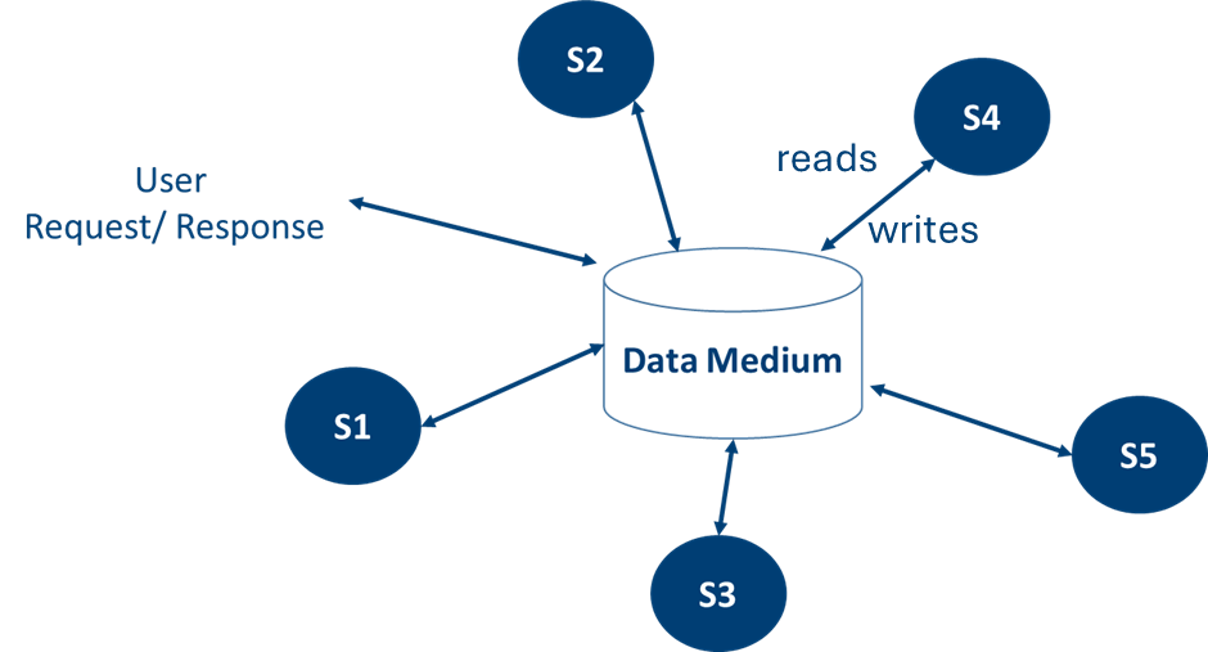

The Data Dichotomy: “While data-driven systems are about exposing data, service-oriented architectures are about hiding data.” (Stopford, 2016)

Data-Orientation

The Data Dichotomy: “While data-driven systems are about exposing data, service-oriented architectures are about hiding data.” (Stopford, 2016)

We need to design systems prioritising data!

Data-Orientation

Data-Orientation

Data-Oriented Architectures

Data-First Systems

- Data is available by design

- Traceability and monitoring

- Interpretability

Data-Orientation

Data-Oriented Architectures

Data-First Systems

- Data is available by design

- Traceability and monitoring

- Interpretability

Data-Orientation

Data-Oriented Architectures

Prioritise Decentralisation

- Super-low latency requirements

- Privacy by design

Data-Orientation

Data-Oriented Architectures

Openness

- Sustainable solutions

- Data ownership

Data-Orientation

Conclusions

Conclusions

Conclusions

Overview

- Service Oriented Architectures

- AI as a Service

- MLOps

- Data-Oriented Architectures

Conclusions

Overview

- Service Oriented Architectures

- AI as a Service

- MLOps

- Data-Oriented Architectures

Course Overview

- AI History and ML Context

- Scientific Approach

- ML Adoption Process

- The Systems Engineering Approach

- Data Science Process and ML Pipeline

- AI as a Service

Course Overview

"It seems to me what is called for is an exquisite balance between two conflicting needs: the most skeptical scrutiny of all hypotheses that are served up to us and at the same time a great openness to new ideas. Obviously those two modes of thought are in some tension. But if you are able to exercise only one of these modes, whichever one it is, you’re in deep trouble. (The Burden of Skepticism, Sagan, 1987)

Course Overview

Course Overview

Course Overview

The systems engineering approach is better equipped than the ML community to facilitate the adoption of this technology by prioritising the problems and their context before any other aspects.

Course Overview