Last Time

"It seems to me what is called for is an exquisite balance between two conflicting needs: the most skeptical scrutiny of all hypotheses that are served up to us and at the same time a great openness to new ideas. Obviously those two modes of thought are in some tension. But if you are able to exercise only one of these modes, whichever one it is, you’re in deep trouble. (The Burden of Skepticism, Sagan, 1987)

Last Time

Last Time

The ML Adoption Process

The ML Adoption Process

The ML Adoption Process

The ML Adoption Process

The ML Adoption Process

The ML Adoption Process

The ML Adoption Process

The Problem First

The Problem First

The Problem First

The Problem First

The Problem First

Important questions:

- What are the people's needs?

- Why is the problem important?

- What are the problem constraints?

- What are the important variables to consider?

- What are the relevant metrics?

- What is the data we need?

- Do we need ML?

- ...

The Problem First

The Problem First

The systems engineering approach is better equipped than the ML community to facilitate the adoption of this technology by prioritising the problems and their context before any other aspects.

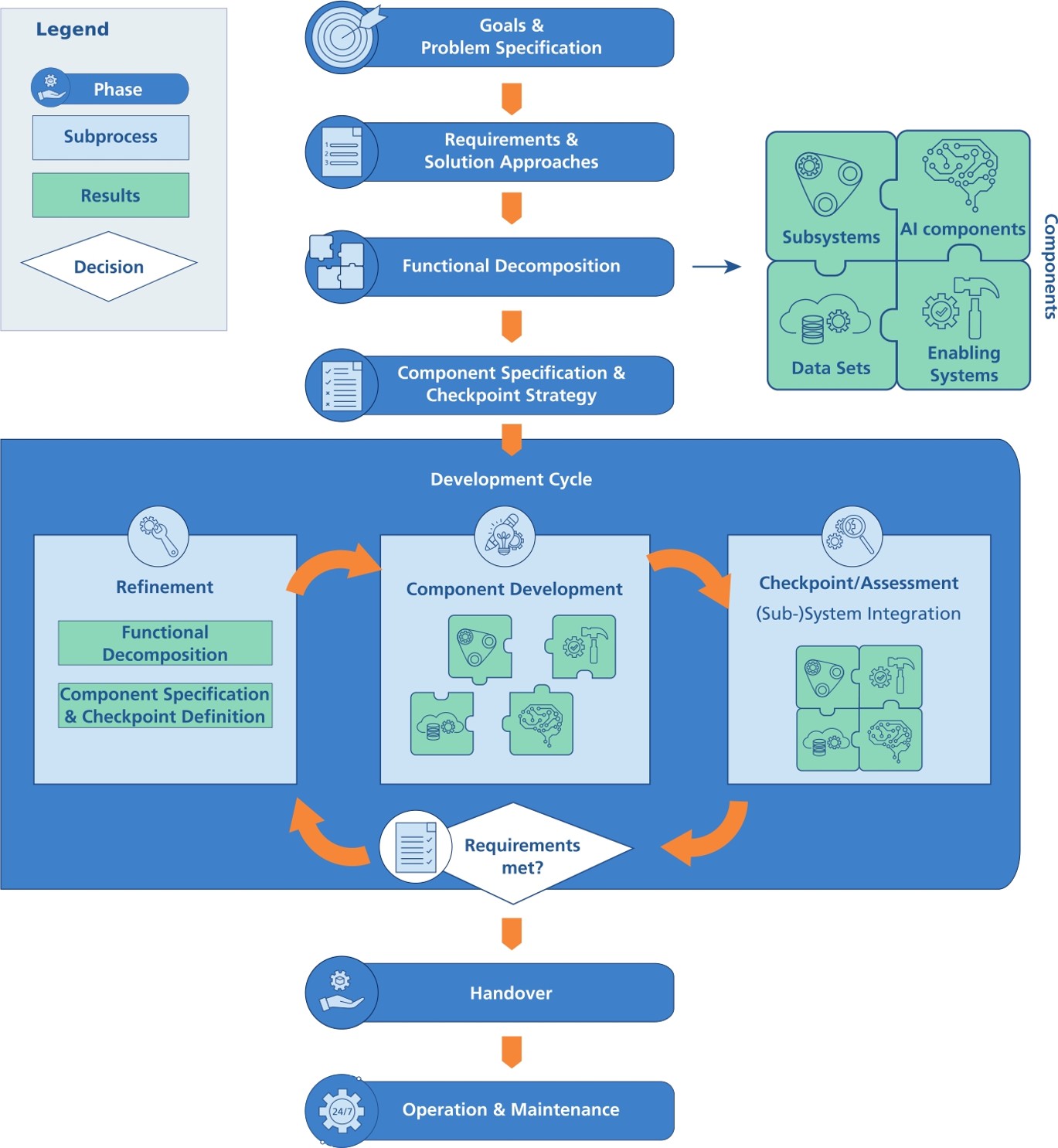

The Systems Engineering Approach

The Systems Engineering Approach

The Systems Engineering Approach

The Systems Engineering Approach

The Systems Engineering Approach in Times of LLMs

The Systems Engineering Approach in Times of LLMs

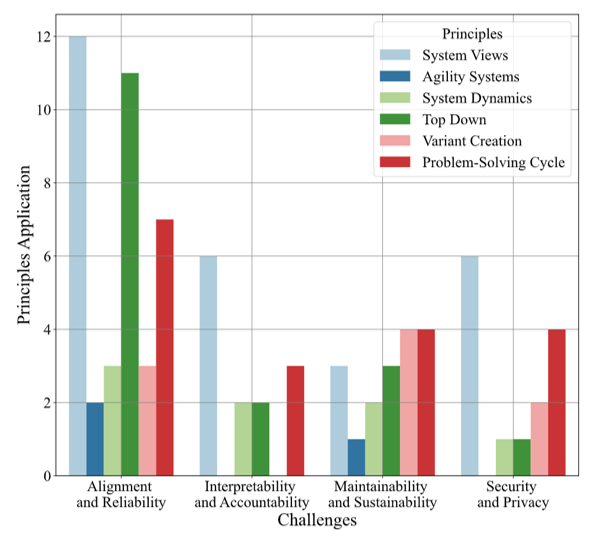

How does current research use the systems engineering approach to address challenges similar to the ones LLMs impose on socio-technical systems?

The Systems Engineering Approach in Times of LLMs

The Systems Engineering Approach in Times of LLMs

LLMs Applications Challenges

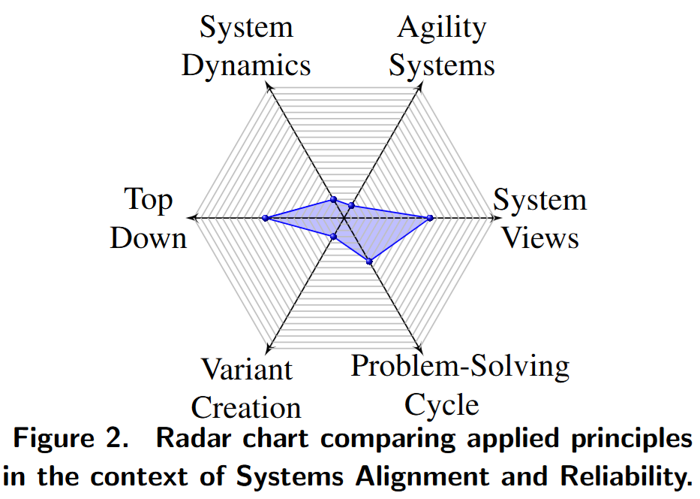

- Alignment and reliability

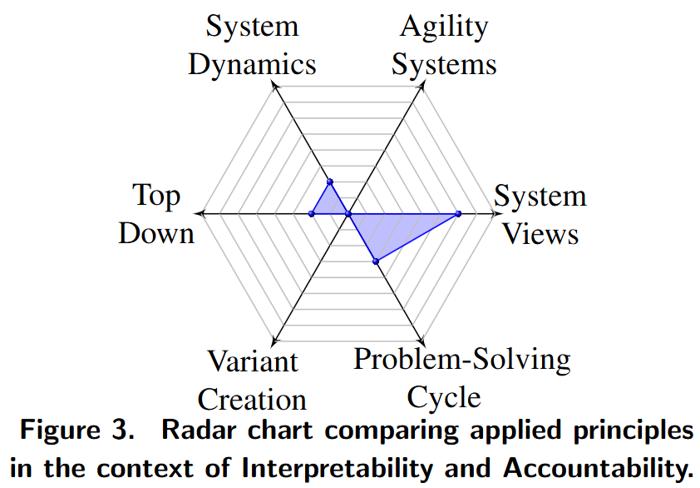

- Interpretability and accountability

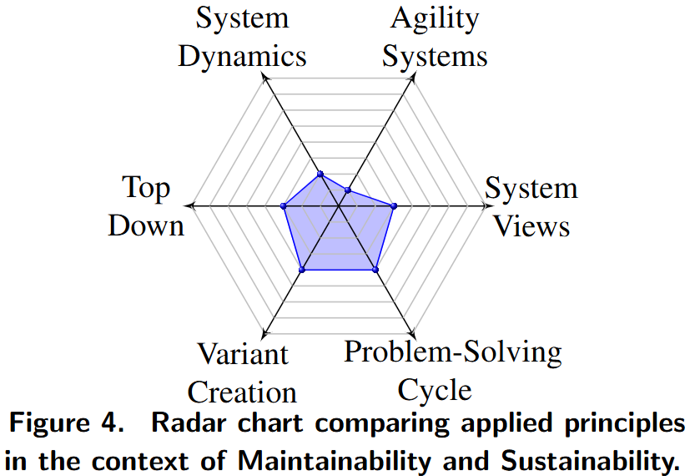

- Maintainability and sustainability

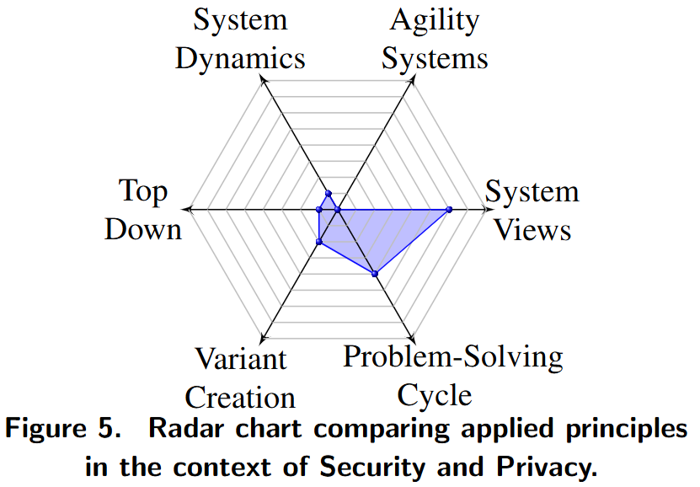

- Security and privacy

The Systems Engineering Approach in Times of LLMs

LLMs Applications Challenges

- Alignment and reliability

- Interpretability and accountability

- Maintainability and sustainability

- Security and privacy

Research Question: How can we address these challenges to deploy LLMs into socio-technical systems effectively and safely?

The Systems Engineering Approach in Times of LLMs

LLMs Applications Challenges

- Alignment and reliability

- Interpretability and accountability

- Maintainability and sustainability

- Security and privacy

Research Question: How can we address these challenges to deploy LLMs into socio-technical systems effectively and safely?

Hypothesis: The Systems Engineering approach can help by prioritising the problem and its context before any solution.

The Systems Engineering Approach in Times of LLMs

LLMs Applications Challenges

- Alignment and reliability

- Interpretability and accountability

- Maintainability and sustainability

- Security and privacy

A survey of research works that apply systems engineering principles to address these challenges when deploying AI-based systems.

The Systems Engineering Approach in Times of LLMs

A survey of research works that apply systems engineering principles to address these challenges when deploying AI-based systems.

The Systems Engineering Approach in Times of LLMs

The Systems Engineering Approach in Times of LLMs

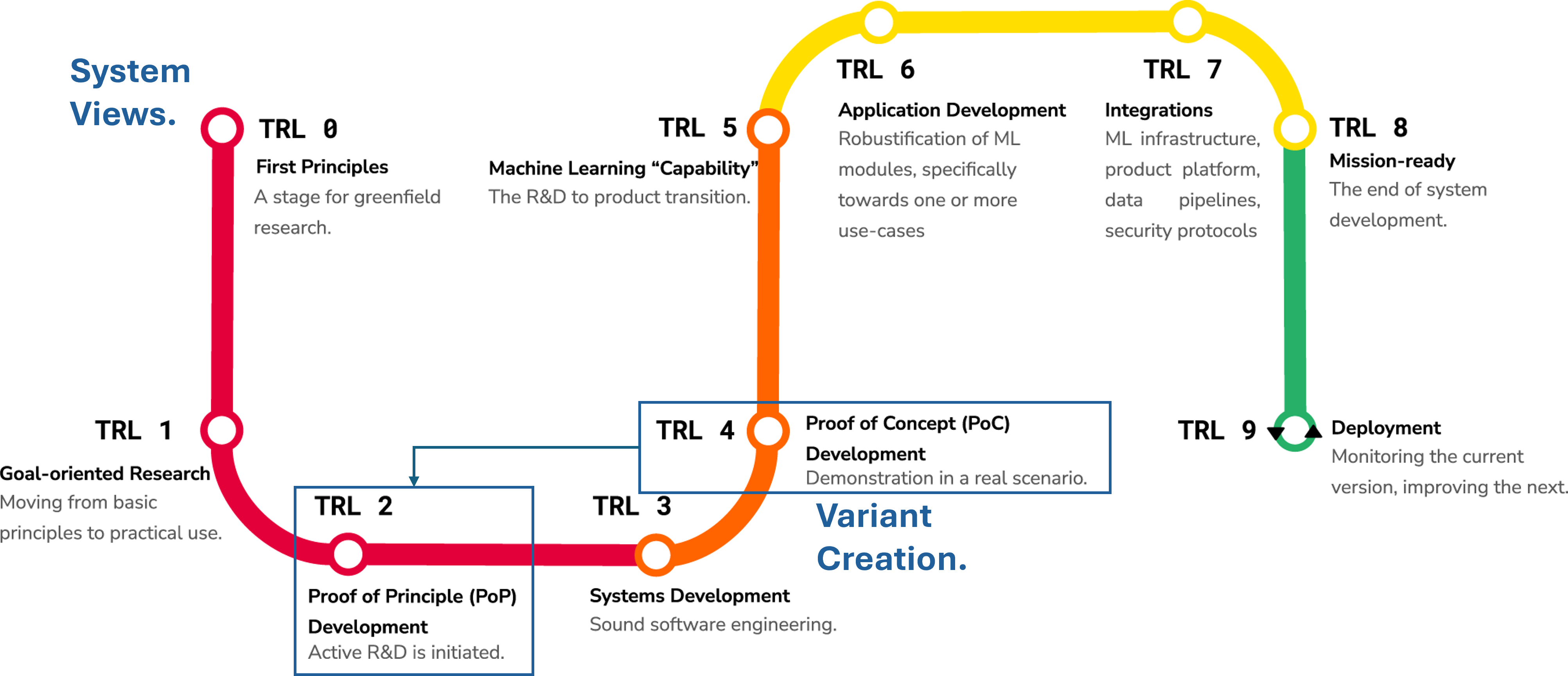

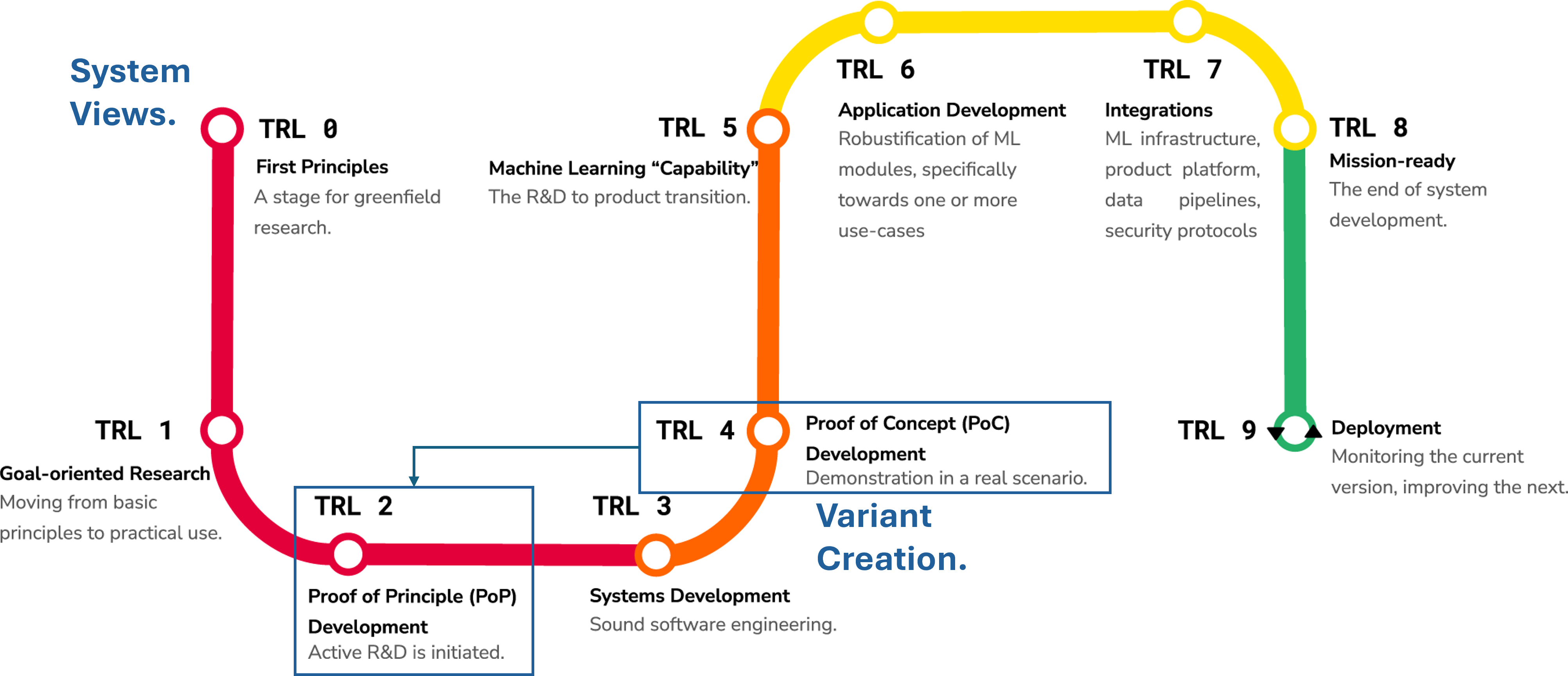

MLTRL - Technology Readiness Levels for Machine Learning Systems

Learn more at (Lavin et al., 2022)

The Systems Engineering Approach in Times of LLMs

The Systems Engineering Approach in Times of LLMs

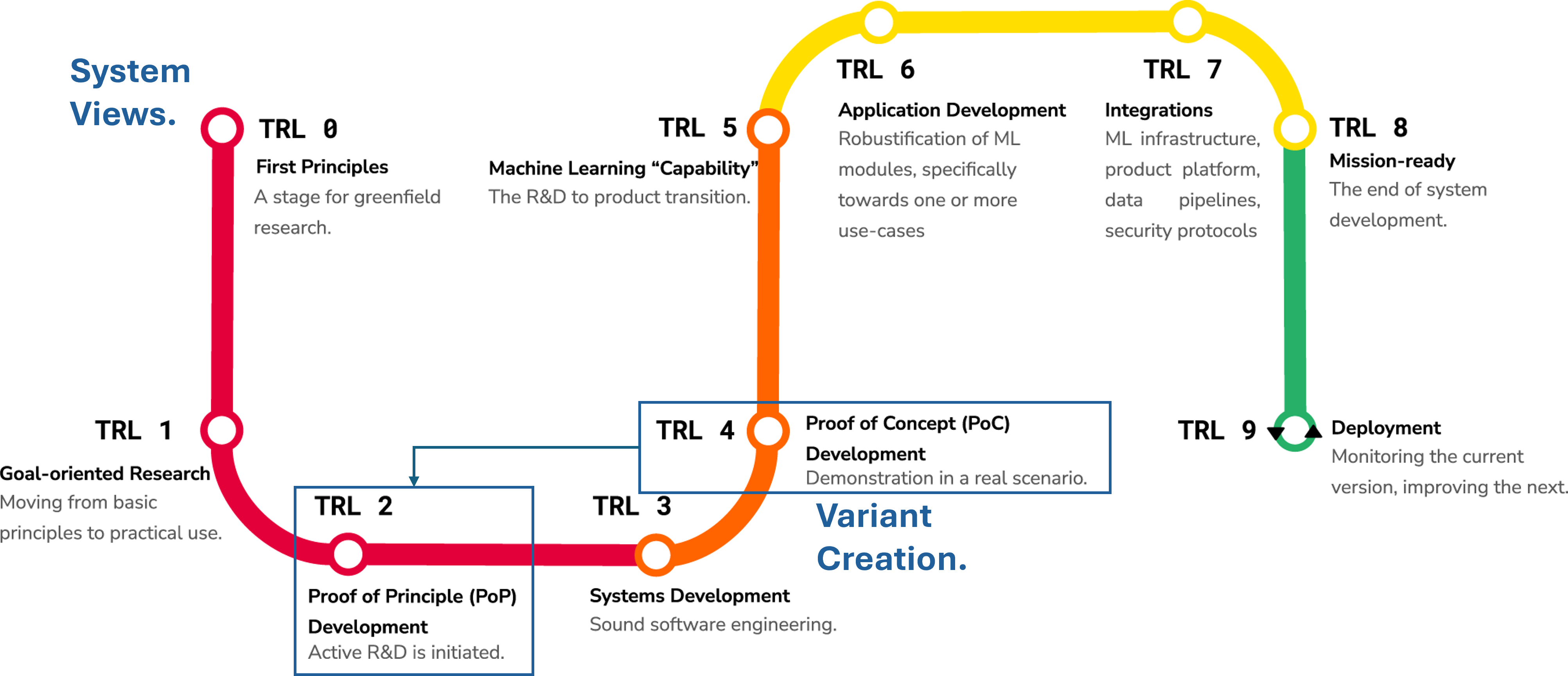

PAISE® – Process Model for AI Systems Engineering

Learn more at (Hasterok & Stompe, 2022)

The Systems Engineering Approach in Times of LLMs

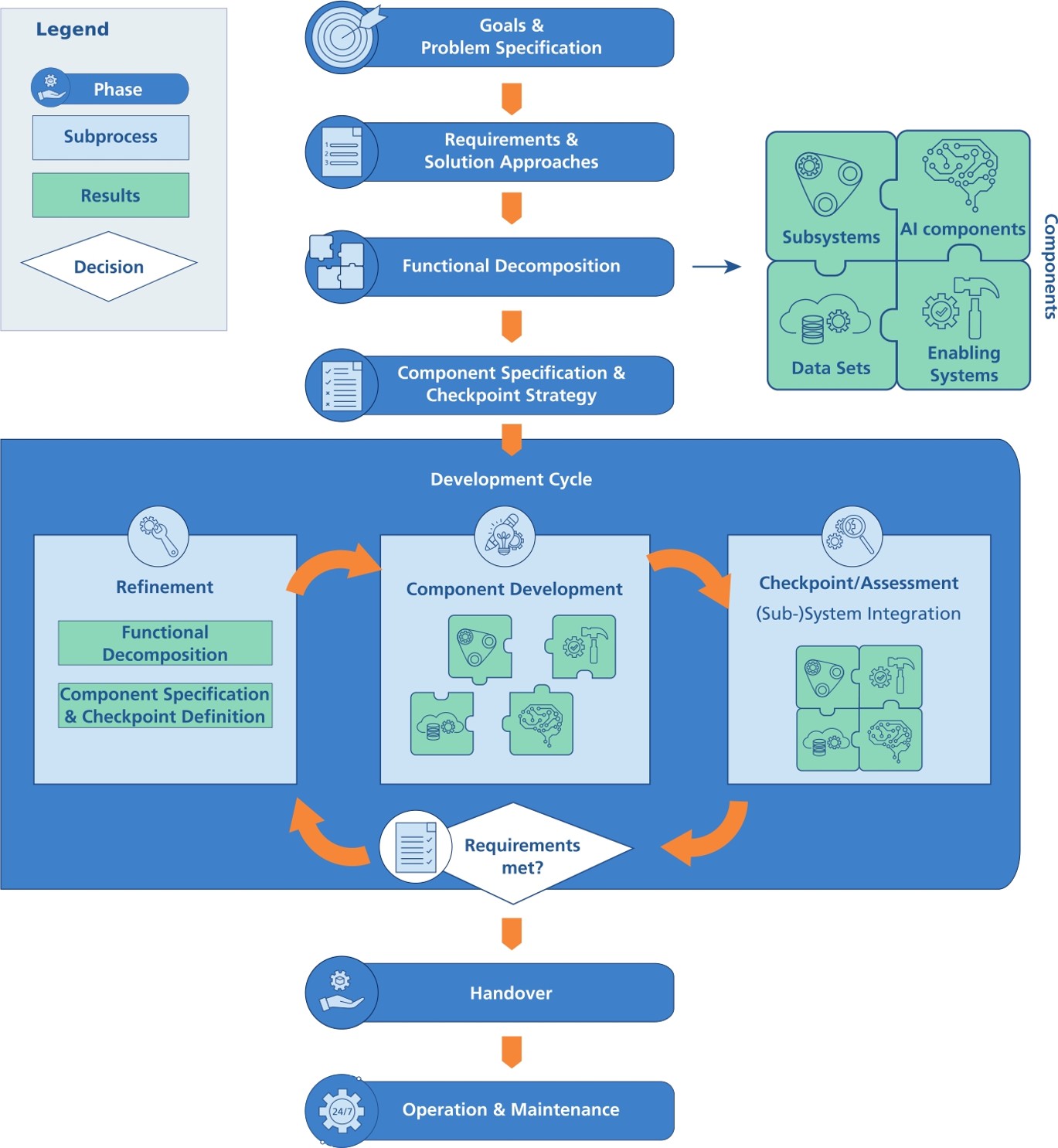

ACDANS – System of Systems Engineering Approach for Complex Deterministic and Nondeterministic Systems

Learn more at (Hershey, 2021)

The Systems Engineering Approach in Times of LLMs

The Systems Engineering Approach in Times of LLMs

Results contrast with the way we work today.

"Move Fast and Break Things" (Zuckerberg, 2014)

- Move fast and deliver working software

- Embrace failure as a learning opportunity

- Prioritise speed and agility

- ...

The Systems Engineering Approach in Times of LLMs

Results contrast with the way we work today.

"Move Fast and Break Things" (Zuckerberg, 2014)

- Move fast and deliver working software

- Embrace failure as a learning opportunity

- Prioritise speed and agility

- ...

The Systems Engineering Approach in Times of LLMs

Inserting ML components in our software systems lowers the bar for these systems to be qualified as critical systems. Learn more at (Cabrera et al., 2025)

We need to be careful when designing, developing, deploying, and decommissioning ML-based systems.

The Data Science Process

The Data Science Process

The Data Science Process

The Data Science Process

The Data Science Process

Machine Learning Pipeline

ML Pipeline vs ML-based System

Data Access

Data Access

Data Access

Does the data even exist?

Data Access

No, it does not exist!

Data Access

Primary Data Collection

- Questionnaires

- Interviews

- Focus group interviews

- Surveys

- Case studies

- Process analysis

- Experimental method

- Statistical method

- ...

No, it does not exist!

Data Access

Primary Data Collection

- Questionnaires

- Interviews

- Focus group interviews

- Surveys

- Case studies

- Process analysis

- Experimental method

- Statistical method

- ...

- Expensive data collection processes

- Particular methodologies

- None fits all needs

Data Access

Yes, it does exist!

Data Access

Secondary Data Collection

- Published printed sources

- Books, journals, magazines, newspapers

- Government records

- Census data

- Public sector records

- Electronic sources (e.g., public datasets, websites, etc.)

- ...

Yes, it does exist!

Data Access

Secondary Data Collection

- Published printed sources

- Books, journals, magazines, newspapers

- Government records

- Census data

- Public sector records

- Electronic sources (e.g., public datasets, websites, etc.)

- ...

- Validity and reliability concerns

- Outdated data

- Relevancy issue

Data Access

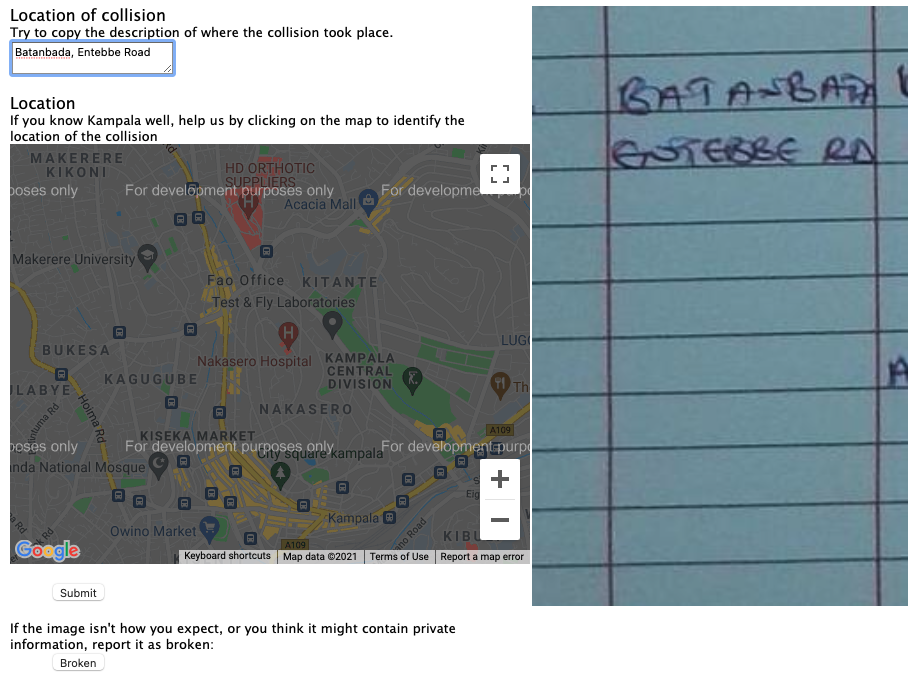

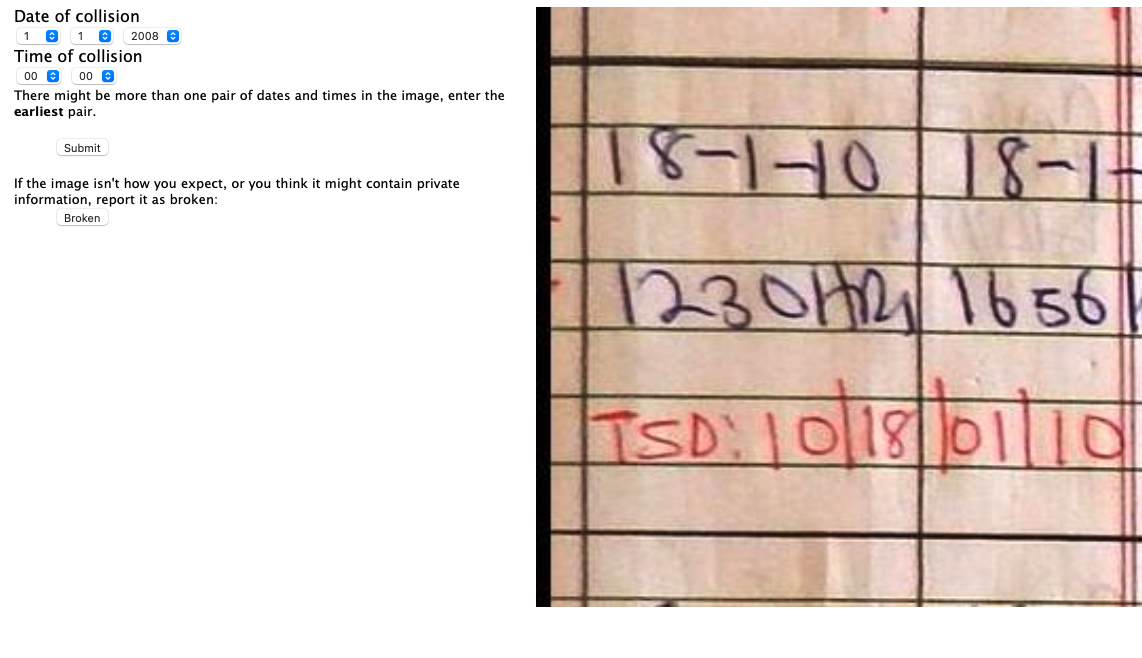

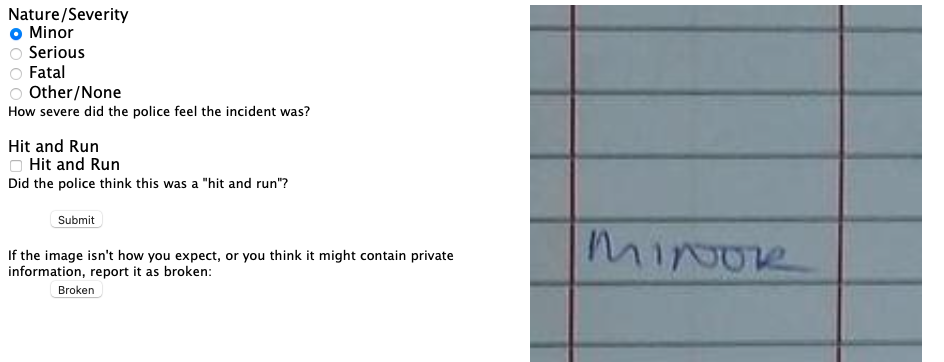

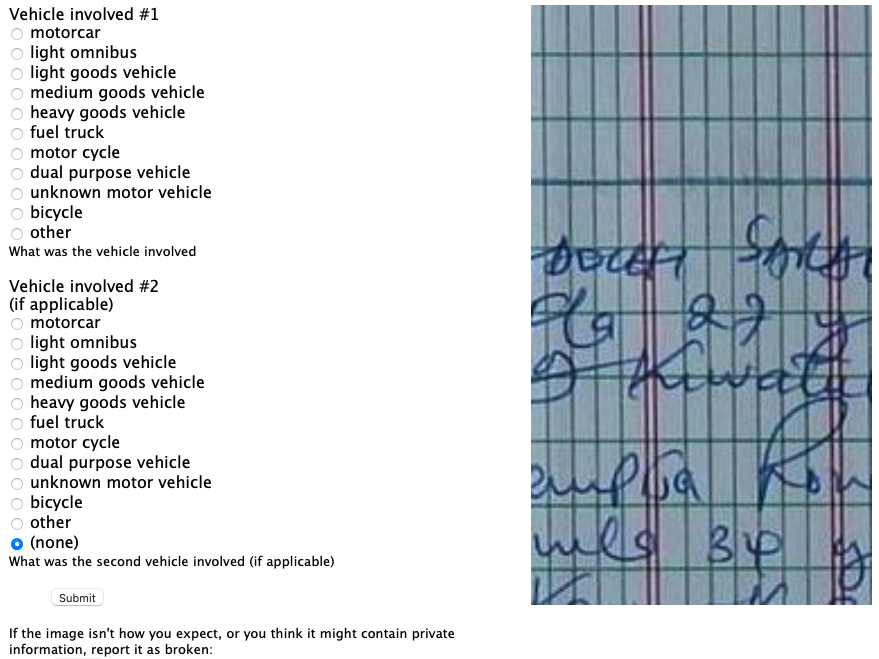

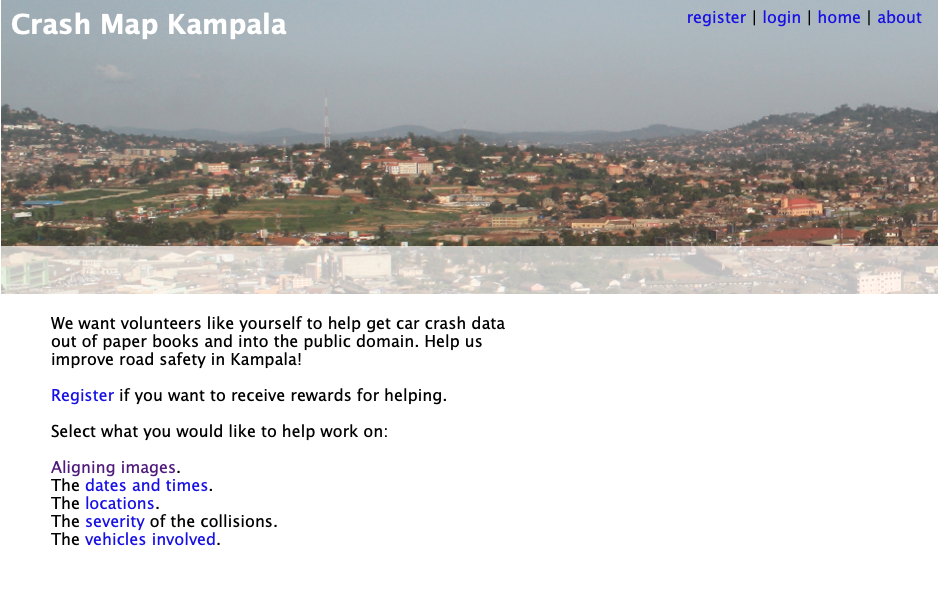

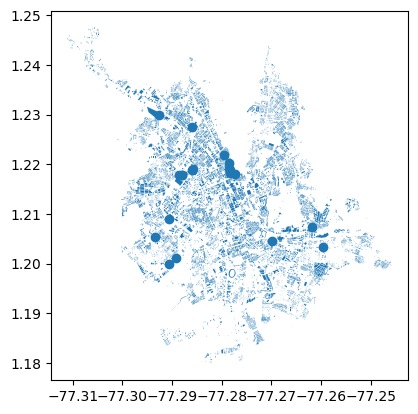

Crash Map Kampala

- Road traffic accidents are a leading cause of death for the young in many contexts

- Data is difficult to access and made impossible any analysis of the problem

Data Access

Crash Map Kampala

- Road traffic accidents are a leading cause of death for the young in many contexts

- Data is difficult to access and made impossible any analysis of the problem

Data Access

Crash Map Kampala

- Road traffic accidents are a leading cause of death for the young in many contexts

- Data is difficult to access and made impossible any analysis of the problem

Data Access

Crash Map Kampala

- Road traffic accidents are a leading cause of death for the young in many contexts

- Data is difficult to access and made impossible any analysis of the problem

Data Access

Crash Map Kampala

- Road traffic accidents are a leading cause of death for the young in many contexts

- Data is difficult to access and made impossible any analysis of the problem

Data Access

The dataset creation is a critical process that cannot be skipped if we want to implement Data Science or ML projects

We can assume datasets exist, but they will likely enable toy projects not relevant for our society

Data Access

Data Access

Using your own files

The most common are comma separated values files (i.e., CSV files).

Data Access

Using your own files

The most common are comma separated values files (i.e., CSV files).

import pandas as pd

dataset = pd.read_csv('path/to/your/dataset.csv')

print("Dataset shape:", dataset.shape)

print("\nFirst few rows:")

print(dataset.head())

Data Access

Using built-in datasets

Different repositories are available online. For example, OpenML or Tensorflow datasets

Data Access

Using built-in datasets

Different datasets repositories are available online. For example, OpenML, an open source platform for sharing datasets and experiments.

from sklearn.datasets import fetch_openml

iris = fetch_openml(name='iris', version=1, as_frame=True)

print("Iris dataset shape:", iris.data.shape)

print("\nFirst few rows:")

print(iris.data.head())

Data Access

Using built-in datasets

- The Iris dataset is a built-in dataset in scikit-learn

- It contains 150 samples of iris flowers, each with 4 features

- The target variable is the species of the iris flower

- It is commonly used for classification tasks

- Look at the dataset documentation, such as the Iris dataset documentation

from sklearn.datasets import fetch_openml

iris = fetch_openml(name='iris', version=1, as_frame=True)

print("Iris dataset shape:", iris.data.shape)

print("\nFirst few rows:")

print(iris.data.head())

Data Access

Using built-in datasets

- Another popular dataset: CIFAR-10 from Tensorflow datasets

- Contains 60,000 32x32 color images

- 10 different classes

- Commonly used for image classification

import tensorflow as tf

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data()

print("Training data shape:", x_train.shape)

print("Test data shape:", x_test.shape)

Data Access

Accessing data via APIs

- APIs are interfaces that allow different systems to communicate with each other

- Servers expose these interfaces

- Clients consume these interfaces (i.e., client-server architecture)

- They communicate through the internet

import requests

url = '<url_of_the_dataset>'

response = requests.get(url)

if response.status_code == 200:

with open("." + file_name_part_1, "wb") as file:

file.write(response.content)

Data Access

Accessing data via APIs

- The UK Price Paid data for housing in dates back to 1995 and contains millions of transactions

- This database is available at gov.uk

- The total data is over 4 gigabytes in size and it is available in a single file or in multiple files split by years and semester

- The example downloads the data for the first semester of 2020

import requests

url = 'http://prod.publicdata.landregistry.gov.uk.s3-website-eu-west-1.amazonaws.com/pp-2020-part1.csv'

response = requests.get(url)

if response.status_code == 200:

with open("." + file_name_part_1, "wb") as file:

file.write(response.content)

Data Access

Accessing data via APIs

- The UK Price Paid data for housing in dates back to 1995 and contains millions of transactions

- This database is available at gov.uk

- The total data is over 4 gigabytes in size and it is available in a single file or in multiple files split by years and semester

- The example downloads the data for the first semester of 2020

import requests

import pandas as pd

url = 'http://prod.publicdata.landregistry.gov.uk.s3-website-eu-west-1.amazonaws.com/pp-2020-part1.csv'

response = requests.get(url)

if response.status_code == 200:

with open("." + file_name_part_1, "wb") as file:

file.write(response.content)

dataset = pd.read_csv('pp-2020-part1.csv')

print("Dataset shape:", dataset.shape)

print("\nFirst few rows:")

print(dataset.head())

Data Access

Accessing data via APIs

- The OpenPostcode Geo dataset provides additional information about the houses

- It is a dataset of British postcodes with easting, northing, latitude, and longitude and with additional fields for geospace applications, including postcode area, postcode district, postcode sector, incode, and outcode

- This must be unzipped

import requests

import pandas as pd

import zipfile

import io

url = 'https://www.getthedata.com/downloads/open_postcode_geo.csv.zip'

response = requests.get(url)

if response.status_code == 200:

with zipfile.ZipFile(io.BytesIO(response.content)) as zip_ref:

zip_ref.extractall('open_postcode_geo')

dataset = pd.read_csv('open_postcode_geo/open_postcode_geo.csv')

print("Dataset shape:", dataset.shape)

print("\nFirst few rows:")

print(dataset.head())

Data Access

Accessing data via APIs

- OpenStreetMaps (OSM) is a collaborative project to create a free editable map of the world. Explore OSM.

- OSM enables the creation of custom maps, geospatial analysis, and location-based services

- It is open source and anyone can access it

- The data lacks the structure we are used to

- We need to install the Python module first

%pip install osmnx

Data Access

Accessing data via APIs

- This example shows how to download the points of interest (POIs) of Pasto, Nariño, Colombia, using Python

import osmnx as ox

import pandas as pd

place = "Pasto, Nariño, Colombia"

pois = ox.features_from_place(place, tags={'amenity': True})

pois_df = pd.DataFrame(pois)

print(f"Number of POIs found: {len(pois_df)}")

print("\nSample of POIs:")

print(pois_df[['amenity', 'name']].head())

pois_df.to_csv('pasto_pois.csv', index=False)

Data Access

Accessing data via APIs

- We can also plot the city buildings

import osmnx as ox

import pandas as pd

place = "Pasto, Nariño, Colombia"

buildings = ox.features_from_place(place, tags={'building': True})

buildings.plot()

Data Access

Accessing data via APIs

- We can also plot the city buildings

Data Access

Joining datasets

We can join multiple datasets to improve our data access

For example, the postcode dataset can enrich the UK Price Paid data by adding coordinates information. We should join these datasets using the common aspects between them (i.e., postcode).

Data Access

Joining datasets

We can join multiple datasets to improve our data access

For example, the postcode dataset can enrich the UK Price Paid data by adding coordinates information. We should join these datasets using the common aspects between them (i.e., postcode).

price_paid = pd.read_csv('pp-2020-part1.csv')

postcodes = pd.read_csv('open_postcode_geo/open_postcode_geo.csv')

merged_data = pd.merge(

price_paid,

postcodes,

on='postcode',

how='inner'

)

print("Original Price Paid dataset shape:", price_paid.shape)

print("Original Postcodes dataset shape:", postcodes.shape)

print("Merged dataset shape:", merged_data.shape)

print("\nSample of merged data:")

print(merged_data[['postcode', 'price', 'latitude', 'longitude']].head())

merged_data.to_csv('price_paid_with_coordinates.csv', index=False)

Data Access

Web scraping datasets

- Get the text from web pages

- Operate as a web explorer programmatically

- Parse the content, which can be unstructured

Data Access

Web scraping datasets

- Get the text from web pages

- Operate as a web explorer programmatically

- Parse the content, which can be unstructured

from bs4 import BeautifulSoup

import time

try:

url = '<url_of_the_website>'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

response = requests.get(url, headers=headers)

response.raise_for_status()

soup = BeautifulSoup(response.text, 'html.parser')

data = [p.text for p in soup.find_all('p')]

return data

except Exception as e:

print(f"Error scraping data: {str(e)}")

return None

Data Access

Creating synthetic datasets

- Mimics real-world variables and their relations

- Randomised generation of features

- Generation of target variables, representing feature relationships

- Relationships depend on expert's domain knowledge

Data Access

Creating synthetic datasets

- Mimics real-world variables and their relations

- Randomised generation of features

- Generation of target variables, representing feature relationships

- Relationships depend on expert's domain knowledge

import numpy as np

X = np.random.randn(n_samples, n_features)

y = np.zeros(n_samples)

for i in range(n_samples):

if X[i, 0] + X[i, 1] > 0:

y[i] = 0

elif X[i, 2] * X[i, 3] > 0:

y[i] = 1

else:

y[i] = 2

Data Assess

Data Assess

Data Assess

After collecting the data (i.e., data access), we need to perform a data assessment process to understand the data, identify and mitigate data quality issues, uncover patterns, and gain insights.

Data Assess

Data Cleaning

Process of detecting and correcting (or removing) corrupt or inaccurate records.

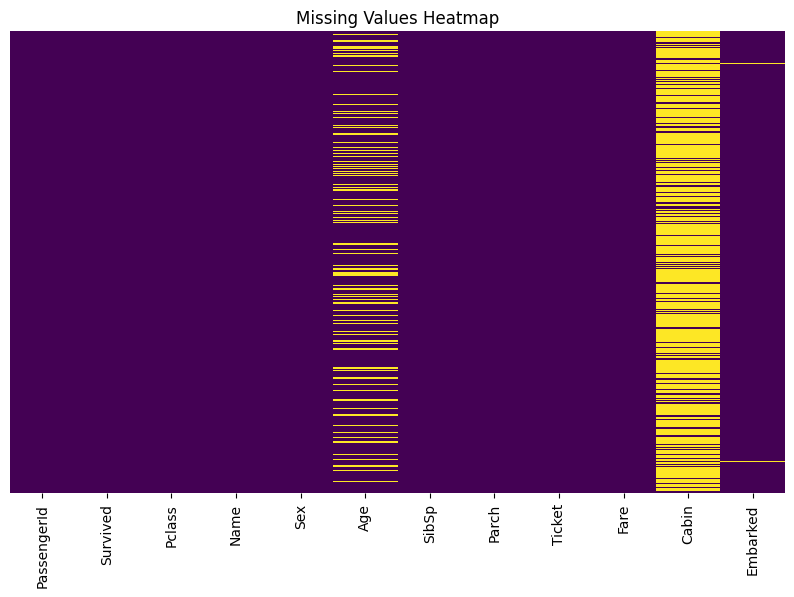

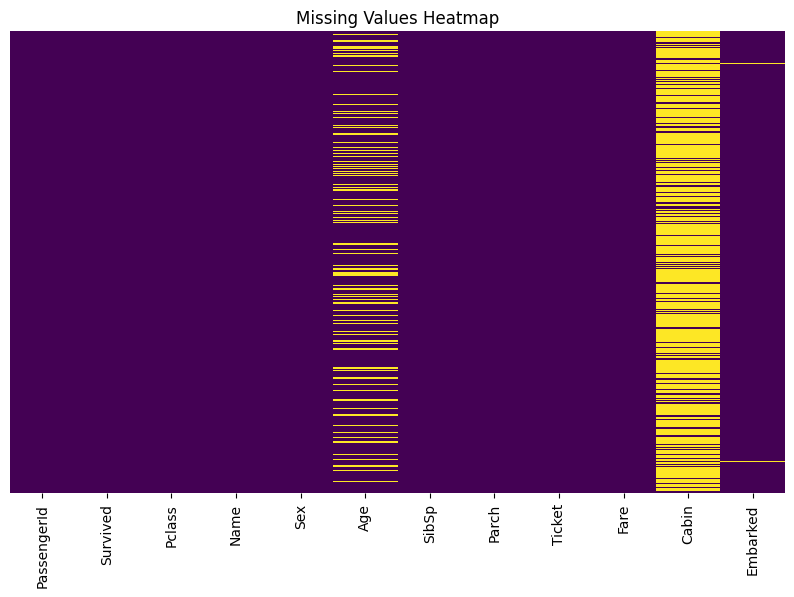

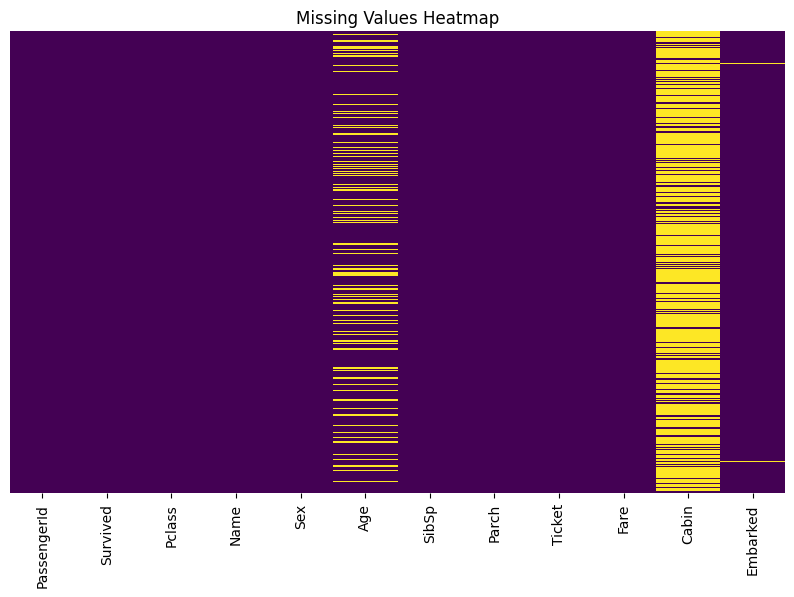

Data Cleaning

Missing Values

Missing data points in the dataset

- Data collection errors

- System failures

- Information not available

- Data entry mistakes

Data Cleaning

Missing Values

Missing data points in the dataset

- Data collection errors

- System failures

- Information not available

- Data entry mistakes

Data Cleaning

Missing Values

Missing data points in the dataset

- Deletion: Remove rows or columns with missing values

- Imputation: Fill missing values with estimated values

- Advanced techniques: Use machine learning models to predict missing values

Data Cleaning

from sklearn.linear_model import LinearRegression

features_for_age = ['Pclass', 'SibSp', 'Parch', 'Fare']

X_train = titanic_data.dropna(subset=['Age'])[features_for_age]

y_train = titanic_data.dropna(subset=['Age'])['Age']

reg_imputer = LinearRegression()

reg_imputer.fit(X_train, y_train)

X_missing = titanic_data[titanic_data['Age'].isnull()][features_for_age]

predicted_ages = reg_imputer.predict(X_missing)

titanic_data_reg = titanic_data.copy()

titanic_data_reg.loc[titanic_data_reg['Age'].isnull(), 'Age'] = predicted_ages

titanic_data['Age_Regression'] = titanic_data_reg['Age']

Data Cleaning

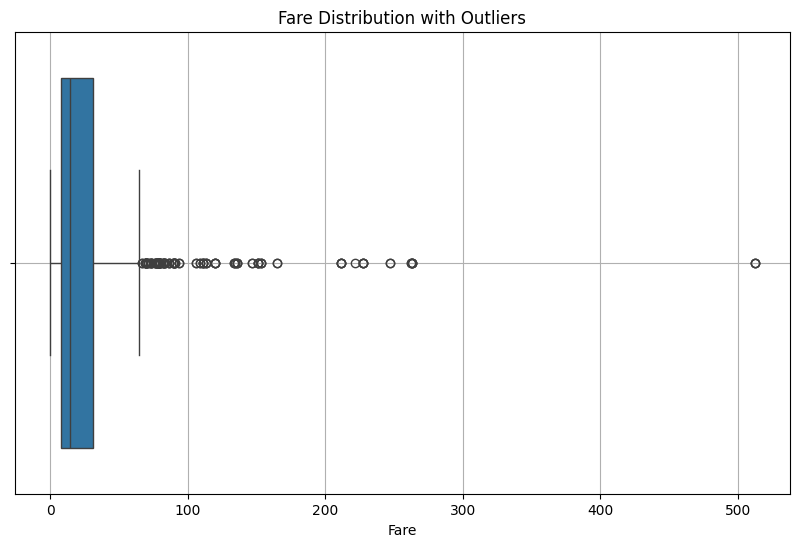

Outliers

Data points that significantly deviate from the rest of the data

- Measurement errors

- Data entry mistakes

- Rare but valid observations

- System malfunctions

Data Cleaning

Outliers

Data points that significantly deviate from the rest of the data

- Measurement errors

- Data entry mistakes

- Rare but valid observations

- System malfunctions

Data Cleaning

Outliers

Data points that significantly deviate from the rest of the data

- Capping: Limit values to a range

- Log Transformation: Reduce the impact of extreme values

Data Cleaning

def cap_outliers(df, column):

Q1 = df[column].quantile(0.25)

Q3 = df[column].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

df[column + '_capped'] = df[column].clip(lower=lower_bound, upper=upper_bound)

return df

Data Assess

Data Preprocessing

Data Preprocessing

Process of transforming raw data into a format suitable for machine learning while ensuring data quality and consistency.

Data Preprocessing

Feature Scaling

Transforming numerical features to a common scale

- All features contribute equally to the model

- Algorithms converge faster

- Features with larger scales do not dominate the model

Data Preprocessing

Feature Scaling

Standardisation (Z-score): Centers data around 0 with unit variance

Data Preprocessing

scaler = StandardScaler()

cols = [col + '_st' for col in num_feat]

data[cols] = scaler.fit_transform(data[num_feat])

Data Preprocessing

Feature Scaling

Min-Max scaling: Scales data to a fixed range [0,1]

Data Preprocessing

scaler = MinMaxScaler()

cols = [col + '_minmax' for col in num_feat]

data[cols] = scaler.fit_transform(data[num_feat])

Data Assess

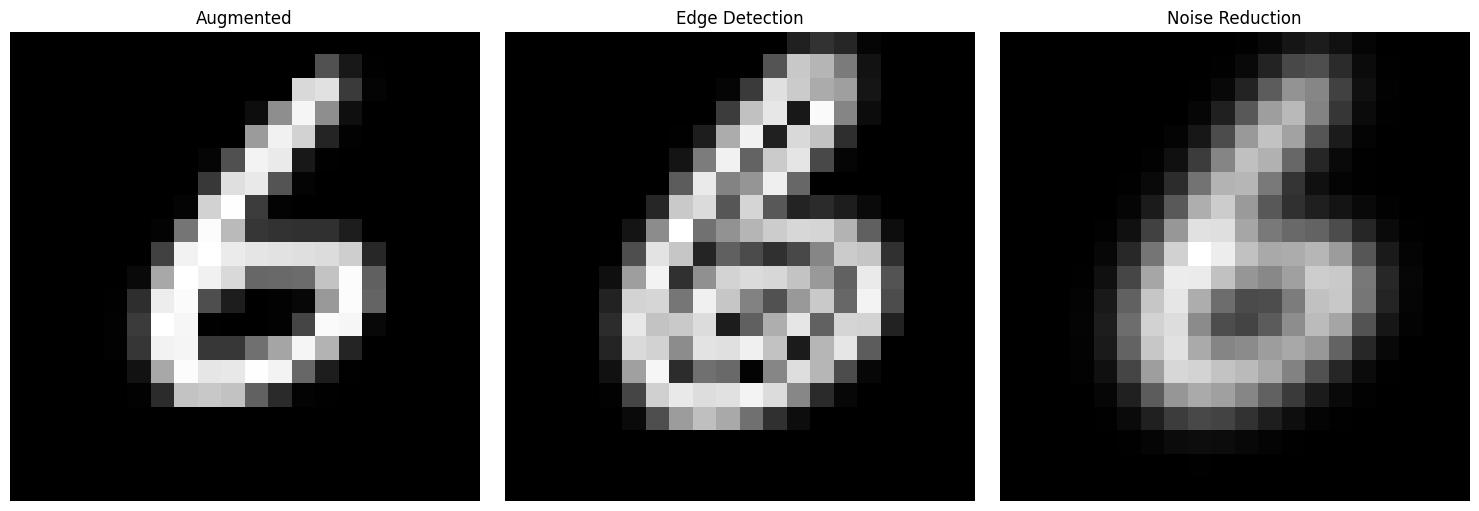

Data Augmentation

Data Augmentation

Process of increasing the size and diversity of our datasets.

Data Augmentation

Increasing diversity to make our models more robust

- Adding controlled noise

- Random rotations

- ...

Data Augmentation

Increasing diversity to make our models more robust

- Adding controlled noise

- Random rotations

- ...

Data Augmentation

def augment_image(img, angle_range=(-15, 15)):

angle = np.random.uniform(angle_range[0], angle_range[1])

rot = rotate(img.reshape(20, 20), angle, mode='edge')

noise = np.random.normal(0, 0.05, rot.shape)

aug = rot + (noise * (rot > 0.1))

return aug.flatten()

Data Augmentation

def augment_image(img, angle_range=(-15, 15)):

angle = np.random.uniform(angle_range[0], angle_range[1])

rot = rotate(img.reshape(20, 20), angle, mode='edge')

noise = np.random.normal(0, 0.05, rot.shape)

aug = rot + (noise * (rot > 0.1))

return aug.flatten()

Data Augmentation

Increasing the size of our dataset to improve model generalisation

- Interpolating between existing data points

- Applying domain-specific transformations

- Generating synthetic data using GANs

- ...

Data Augmentation

def numerical_smote(data, k=5):

aug_data = []

for i in range(len(data)):

uniq_values = np.unique(data[data != data[i]])

dists = np.abs(uniq_values - data[i])

k_neigs = uniq_values[np.argsort(dists)[:k]]

for neig in k_neigs:

sample = data[i] + np.random.random() * (neig - data[i])

aug_data.append(sample)

return np.array(aug_data)

SMOTE (Synthetic Minority Over-sampling Technique)

- Using the k-nearest neighbours

- Interpolation between the original data point and the neighbour

Data Assess

Feature Engineering

Process of creating, transforming, and selecting features in our data, combining domain knowledge and creativity.

Feature Engineering

Creating new features in our data can help to capture important patterns or relations in the data

- Extracting information from existing features

- Combining existing features

- Adding domain knowledge rules

- ...

Feature Engineering

Creating new features in our data can help to capture important patterns or relations in the data

- Extracting information from existing features

- Combining existing features

- Adding domain knowledge rules

- ...

data['AgeGroup'] = pd.cut(data['Age'],

bins=[0, 12, 18, 35, 60],

labels=['Child',

'Teenager',

'Young Adult',

'Adult'])

Feature Engineering

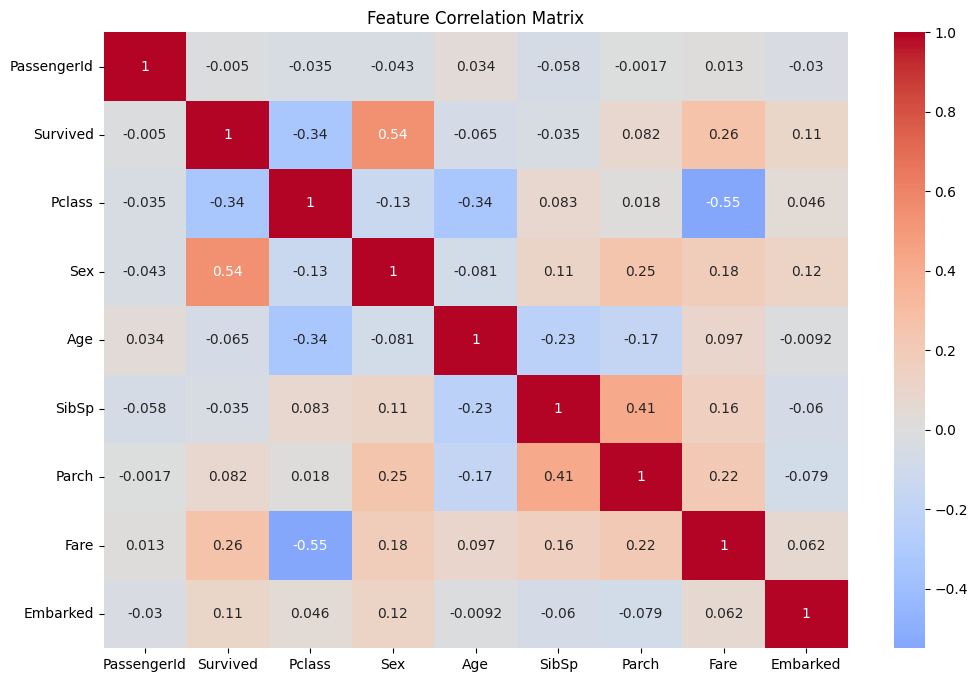

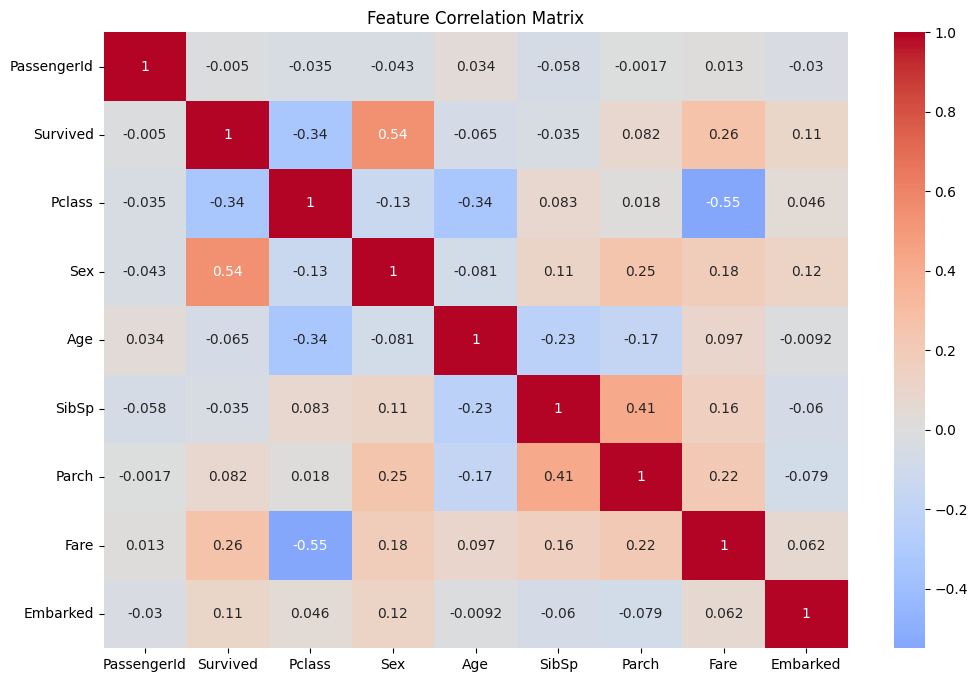

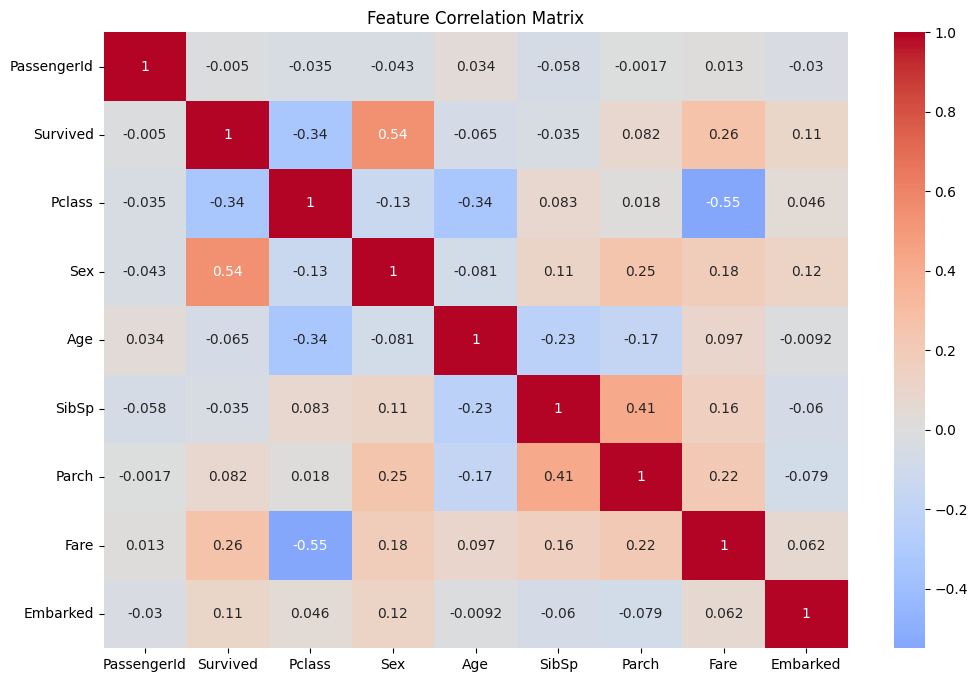

Selecting the most important features in our data reduces dimensionality, prevents overfitting, improves interpretability, and reduces training time

- Correlation Matrix with Heatmap

- Decision Trees

- Principal Component Analysis (PCA)

- ...

Feature Engineering

Selecting the most important features in our data reduces dimensionality, prevents overfitting, improves interpretability, and reduces training time

- Correlation Matrix with Heatmap

- Decision Trees

- Principal Component Analysis (PCA)

- ...

Feature Engineering

The matrix helps to visualise the correlation between features

Feature Engineering

corr_matrix = data.corr()

plt.figure(figsize=(12, 8))

sns.heatmap(corr_matrix, annot=True, center=0)

plt.title('Feature Correlation Matrix')

plt.show()

Feature Engineering

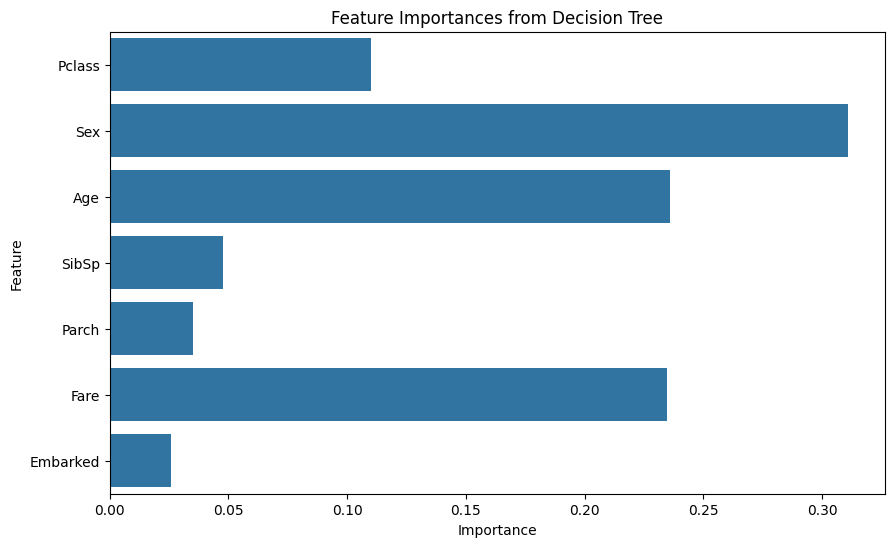

A decision tree helps in identifying the most important features contributing to the prediction. This method splits the dataset into subsets based on the feature that results in the largest information gain. At the end of the process, we get the importance of each feature

Feature Engineering

from sklearn.tree import DecisionTreeClassifier

tree = DecisionTreeClassifier(random_state=42)

tree.fit(X, y)

Feature Engineering

Feature Engineering

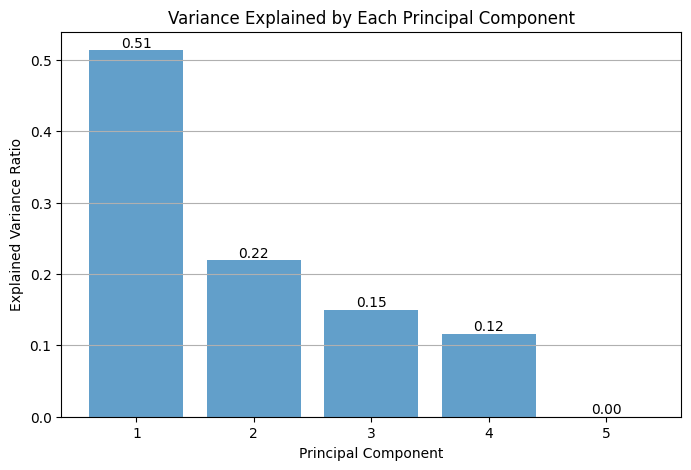

Principal Component Analysis (PCA) is a dimensionality reduction technique that transforms a set of correlated features into a set of linearly uncorrelated features called principal components. These components capture the most variance in the data, allowing for a reduction in the number of features while retaining most of the information.

Feature Engineering

from sklearn.decomposition import PCA

pca = PCA()

X_pca_transformed = pca.fit_transform(X_scaled)

explained_variance = pca.explained_variance_ratio_

cumulative_variance = np.cumsum(explained_variance)

Feature Engineering

from sklearn.decomposition import PCA

pca = PCA()

X_pca_transformed = pca.fit_transform(X_scaled)

explained_variance = pca.explained_variance_ratio_

cumulative_variance = np.cumsum(explained_variance)

Data Address

Data Address

After assessing the data (i.e., data assess), we need to use the data to address the problem in question. This process includes implementing a Machine Learning algorithm that creates a Machine Learning model.

Data Address

Data Address

A Machine Learning algorithm is a set of instructions that are used to train a machine learning model. It defines how the model learns from data and makes predictions or decisions. Linear regression, decision trees, and neural networks are examples of machine learning algorithms.

Data Address

A Machine Learning model is a program that is trained on a dataset and used to make predictions or decisions. The goal is to create a trained model that can generalise well to new, unseen data. For example, a trained model could predict house prices based on new input features.

Data Address

A Machine Learning algorithm uses the training process that goes from a specific set of observations to a general rule (i.e., induction). This process adjusts the Machine Learning model internal parameters to minimise prediction errors. For example, in a linear regression model, the algorithm adjusts the slope and intercept to minimise the difference between predicted and actual values.

Data Address

The parameters of Machine Learning models are adjusted according to the training data (i.e., seen data). For example, when training a model to predict house prices, the training data would include features like square footage, number of bedrooms, and location, along with their actual sale prices. The training data consists of vectors of attribute values.

Data Address

In classification problems, the prediction (i.e., model's output) is one of a finite set of values (e.g., sunny/cloudy/rainy or true/false). In the regression problems, the model's output is a number.

Data Address

Predictions can deviate from the expected values. Prediction errors are quantified by a loss function that indicates to the algorithm how far the prediction is from the target value. This difference is used to update the machine learning model's internal parameters to minimise the prediction errors. Common examples include Mean Squared Error for regression problems and Cross-Entropy for classification problems.

Data Address

Three types of feedback can be part of the inputs in our training process:

- Supervised Learning: The model is trained on labeled data to learn a mapping between inputs and the corresponding labels, so the model can make predictions on unseen data.

- Unsupervised Learning: The model is trained on unlabeled data, and it must find patterns in the data. The goal is to identify hidden structures or groupings in the data.

- Reinforcement Learning: The model learns by interacting with an environment and receiving rewards or penalties. The goal is to learn a policy that maximizes the reward.

Conclusions

Conclusions

Conclusions

Overview

- The ML Adoption Process

- The Systems Engineering Approach

- The Data Science Process

- The ML Pipeline

- Data Access

- Data Assess

- Data Address

Conclusions

Overview

- The ML Adoption Process

- The Systems Engineering Approach

- The Data Science Process

- The ML Pipeline

- Data Access

- Data Assess

- Data Address

Next Time

- ML Deployment

- AI as a Service

- Systems Architectures

Many Thanks!

_script: true

This script will only execute in HTML slides

_script: true